Improved Detection of Fundus Lesions Using YOLOR-CSP Architecture and Slicing Aided Hyper Inference

Keywords:

diabetes mellitus, diabetic retinopathy, fundus image, lesions detection, deep learningAbstract

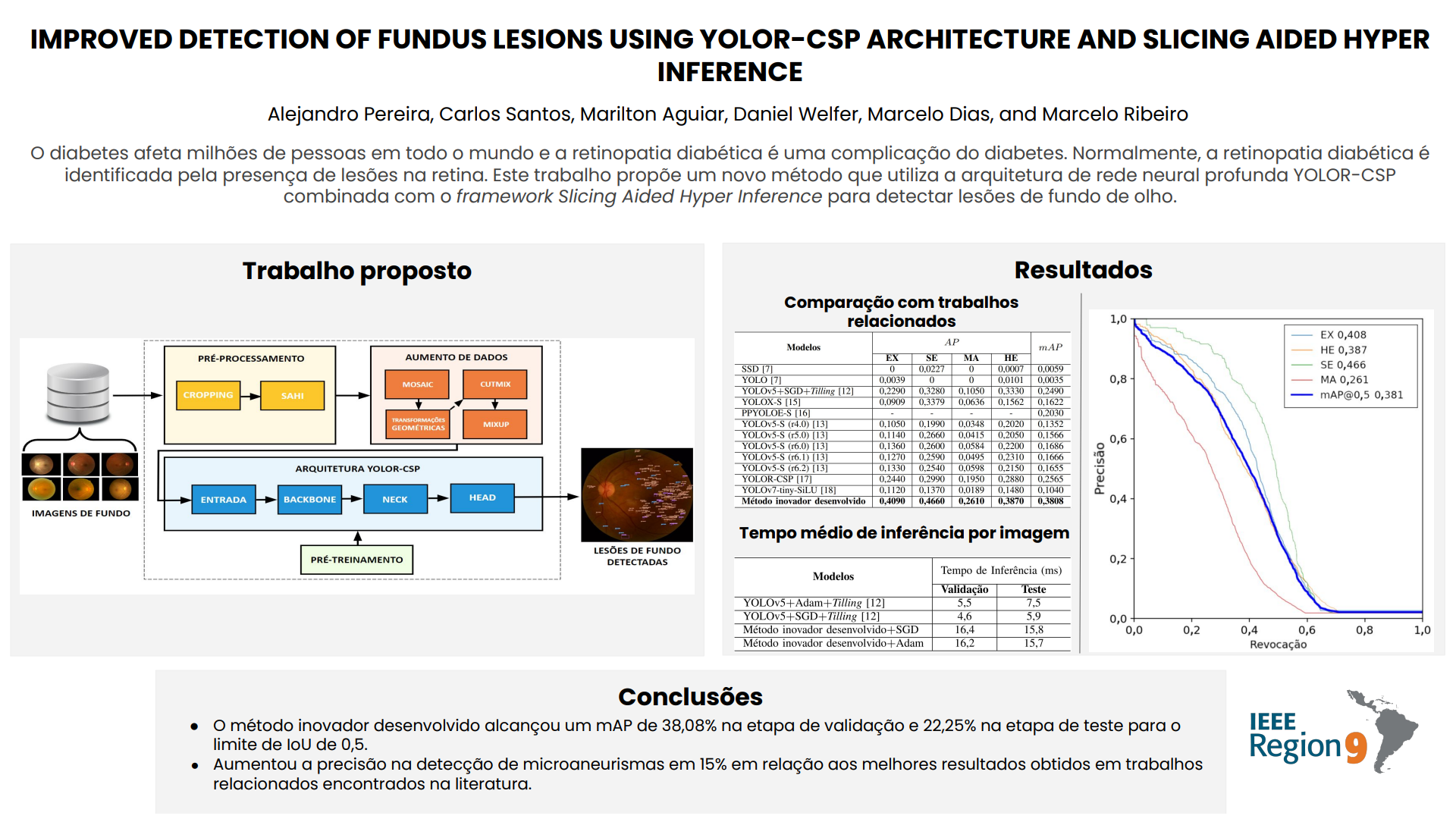

Diabetes affects millions of people worldwide, and diabetic retinopathy is a complication of diabetes. Brazil is the sixth in the world in the incidence of diabetes and has the highest numbers in Latin America with 15.7 million adults affected by this condition. Typically diabetic retinopathy is identified by lesions such as hard and soft exudates, microaneurysms, and vitreous hemorrhages. Early diagnosis of these lesions is essential to prevent the progression of the disease to severe stages and the consequent loss of vision. As the disease diagnosis is based on image analysis, it is possible to use deep learning models to detect artifacts in the retina. This article proposes a new method that uses a YOLOR-CSP architecture combined with the Slicing Aided Hyper Inference framework to detect fundus lesions. The proposed method was trained, adjusted, and evaluated using the DDR dataset, obtaining a mAP equal to 38.08%. The proposed method achieved values of AP equal to 40.90%, 46.60%, 26.10%, and 38.70% for hard exudates, soft exudates, microaneurysms, and vitreous hemorrhages, respectively, surpassing similar studies found in the literature.

Downloads

References

P. Riordan-Eva and E. T. Cunningham, Vaughan & Asbury’s General Ophthalmology, 19th Edition, 19th ed. McGraw-Hill Education/Medical, Oct. 2017.

I. B. de Geografia e Estatística (IBGE), “Censo 2000,” https://www.ibge.gov.br/estatisticas/sociais/administracao-publica-e-participacao-politica/9663-censo-demografico-2000.html, 2000, [Online; accessed 06-Mar-2023].

A. A. Alghadyan, “Diabetic retinopathy - An update,” Saudi Journal of Ophthalmology, vol. 25, no. 2, pp. 99–111, 2011. [Online]. Available: http://dx.doi.org/10.1016/j.sjopt.2011.01.009

C.-Y. Wang, I.-H. Yeh, and H.-Y. M. Liao, “You only learn one representation: Unified network for multiple tasks,” 2021. [Online]. Available: https://arxiv.org/abs/2105.04206

A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “Yolov4: Optimal speed and accuracy of object detection,” 2020.

F. C. Akyon, S. O. Altinuc, and A. Temizel, “Slicing aided hyper inference and fine-tuning for small object detection,” in Proceedings of the International Conference on Image Processing (ICIP). IEEE, Oct. 2022. [Online]. Available: https://doi.org/10.1109/icip46576.2022.9897990

T. Li, Y. Gao, K. Wang, S. Guo, H. Liu, and H. Kang, “Diagnostic assessment of deep learning algorithms for diabetic retinopathy screening,” Information Sciences, vol. 501, pp. 511–522, 2019. [Online]. Available: https://doi.org/10.1016/j.ins.2019.06.011

S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-CNN: Towards real-time object detection with region proposal networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 6, pp. 1137–1149, Jun. 2017. [Online]. Available: https://doi.org/10.1109/tpami.2016.2577031

W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “Ssd: Single shot multibox detector,” in Proceedings of European Conference on Computer Vision – ECCV 2016, B. Leibe, J. Matas, N. Sebe, and M. Welling, Eds. Cham: Springer International Publishing, 2016, pp. 21–37.

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-Decem, Las Vegas, NV, USA, 27–30 June 2016, 2016, pp. 779–788.

C. Santos, M. S. De Aguiar, D. Welfer, and B. Belloni, “Deep neural network model based on one-stage detector for identifying fundus lesions,” in 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021, 2021, pp. 1–8.

C. Santos, M. Aguiar, D. Welfer, and B. Belloni, “A new approach for detecting fundus lesions using image processing and deep neural network architecture based on yolo model,” Sensors, vol. 22,

no. 17, 2022. [Online]. Available: https://www.mdpi.com/1424-8220/22/17/6441

J. Glenn, “YOLOv5 releases,” https://github.com/ultralytics/yolov5/releases/, 2022, [Online; accessed 04-September-2022].

P. Porwal, S. Pachade, M. Kokare, G. Deshmukh, J. Son, W. Bae, L. Liu, J. Wang, X. Liu, L. Gao et al., “IDRiD: Diabetic Retinopathy – Segmentation and Grading Challenge,” Medical Image Analysis, vol. 59,

Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “Yolox: Exceeding yolo series in 2021,” 2021.

S. Xu, X. Wang, W. Lv, Q. Chang, C. Cui, K. Deng, G. Wang, Q. Dang, S. Wei, Y. Du, and B. Lai, “Pp-yoloe: An evolved version of yolo,” 2022. [Online]. Available: https://arxiv.org/abs/2203.16250

C.-Y. Wang, I.-H. Yeh, and H.-Y. M. Liao, “You only learn one representation: Unified network for multiple tasks,” 2021.

C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” 2022. [Online]. Available: https://arxiv.org/abs/2207.02696