Through the Youth Eyes: Training Depression Detection Algorithms with Eye Tracking Data

Keywords:

Machine Learning, Affective Computing, Emotion in human-computer interaction, BiometricsAbstract

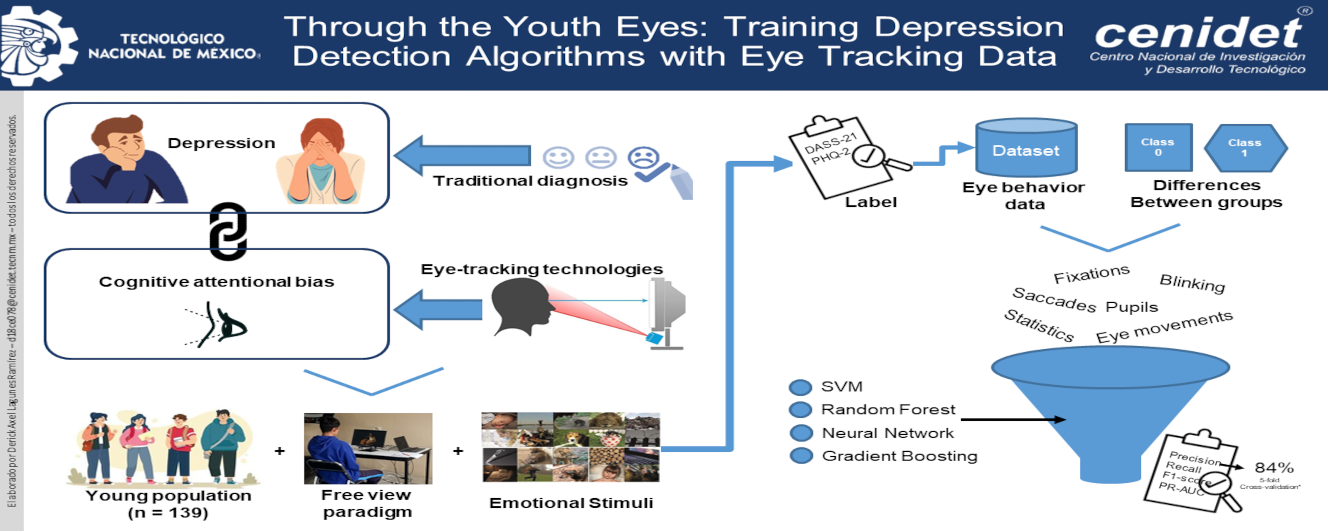

Depression is a prevalent mental health disorder, and early detection is crucial for effective intervention. Recent advancements in eye-tracking technology and machine learning offer new opportunities for non-invasive diagnosis. This study aims to assess the performance of different machine learning algorithms in. predicting depression in a young sample using eye-tracking metrics. Eye-tracking data from 139 participants were recorded with an emotional induction paradigm in which each participant observed a set of positive and negative emotional stimuli. The data were analyzed to find differences between groups, where the most significant features were selected to train prediction models. The dataset was then split into training and testing sets using stratified sampling. Four algorithms—support vector machines (SVM), random forest (RF), a multi-layer perceptron (MLP) neural network, and gradient boosting (GB)—were trained with hyperparameter optimization and 5-fold cross-validation. The RF algorithm achieved the highest accuracy at 84%, followed by SVM, GB, and the MLP neural network. Performance metrics such as accuracy, recall, F1-score, precision recall area under the curve (PR-AUC), and Matthews Correlation Coefficient (MCC) were also used to evaluate the models. The findings suggest that eye-tracking metrics combined with machine learning algorithms can effectively identify depressive symptoms in the young, indicating their potential as non-invasive diagnostic tools in clinical settings.

Downloads

References

S. Alghowinem, R. Goecke, M. Wagner, G. Parker, and M. Breakspear, “Eye movement analysis for depression detection,” in 2013 IEEE International Conference on Image Processing, Sep. 2013, pp. 4220–4224. doi: 10.1109/ICIP.2013.6738869.

M. Hamilton, “A RATING SCALE FOR DEPRESSION,” J. Neurol. Neurosurg. Psychiatry, vol. 23, no. 1, pp. 56–62, Feb. 1960, doi: 10.1136/jnnp.23.1.56.

H.-F. Ho, “The effects of controlling visual attention to handbags for women in online shops: Evidence from eye movements,” Comput. Human Behav., vol. 30, pp. 146–152, Jan. 2014, doi: 10.1016/j.chb.2013.08.006.

Y. Lu, W.-L. Zheng, B. Li, and B.-L. Lu, “Combining Eye Movements and EEG to Enhance Emotion Recognition,” in Proceedings of the 24th International Conference on Artificial Intelligence, 2015, pp. 1170–1176.

T. Suslow, A. Hußlack, A. Kersting, and C. M. Bodenschatz, “Attentional biases to emotional information in clinical depression: A systematic and meta-analytic review of eye tracking findings,” J. Affect. Disord., vol. 274, pp. 632–642, Sep. 2020, doi: 10.1016/j.jad.2020.05.140.

Q. Zhao et al., “Temporal Characteristics of Attentional Disengagement from Emotional Facial Cues in Depression,” Neurophysiol. Clin., vol. 49, no. 3, pp. 235–242, Jun. 2019, doi: 10.1016/j.neucli.2019.03.001.

C. Vazquez, I. Blanco, A. Sanchez, and R. J. McNally, “Attentional bias modification in depression through gaze contingencies and regulatory control using a new eye-tracking intervention paradigm: study protocol for a placebo-controlled trial,” BMC Psychiatry, vol. 16, no. 1, p. 439, Dec. 2016, doi: 10.1186/s12888-016-1150-9.

A. W. Joseph and R. Murugesh, “Potential Eye Tracking Metrics and Indicators to Measure Cognitive Load in Human-Computer Interaction Research,” J. Sci. Res., vol. 64, no. 01, pp. 168–175, Jan. 2020, doi: 10.37398/JSR.2020.640137.

H. Mitre-Hernandez, R. Covarrubias-Carrillo, and C. Lara-Alvarez, “Pupillary Responses for Cognitive Load Measurement: Classifying Difficulty Levels in an Educational Video Game (Preprint),” JMIR Serious Games, Jun. 2020, doi: 10.2196/21620.

D. Lagunes-Ramírez, G. Gonzalez-Serna, M. Lopez-Sanchez, O. Fragoso-Diaz, N. Castro-Sanchez, and J. Olivares-Rojas, “Study of the User’s Eye Tracking to Analyze the Blinking Behavior While Playing a Video Game to Identify Cognitive Load Levels,” in 2020 IEEE International Autumn Meeting on Power, Electronics and Computing (ROPEC), Nov. 2020, pp. 1–5. doi: 10.1109/ROPEC50909.2020.9258693.

J. Zagermann, U. Pfeil, and H. Reiterer, “Studying Eye Movements as a Basis for Measuring Cognitive Load,” in Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems, Apr. 2018, pp. 1–6. doi: 10.1145/3170427.3188628.

I. W. Skinner et al., “The reliability of eyetracking to assess attentional bias to threatening words in healthy individuals,” Behav. Res. Methods, vol. 50, no. 5, pp. 1778–1792, Oct. 2018, doi: 10.3758/s13428-017-0946-y.

A. B. M. Gerdes, G. W. Alpers, H. Braun, S. Köhler, U. Nowak, and L. Treiber, “Emotional sounds guide visual attention to emotional pictures: An eye-tracking study with audio-visual stimuli.,” Emotion, Mar. 2020, doi: 10.1037/emo0000729.

J. Z. Lim, J. Mountstephens, and J. Teo, “Emotion Recognition Using Eye-Tracking: Taxonomy, Review and Current Challenges,” Sensors, vol. 20, no. 8, p. 2384, Apr. 2020, doi: 10.3390/s20082384.

A. Stolicyn, J. D. Steele, and P. Seriès, “Prediction of depression symptoms in individual subjects with face and eye movement tracking,” Psychol. Med., pp. 1–9, Nov. 2020, doi: 10.1017/S0033291720003608.

J. Zhu et al., “An Improved Classification Model for Depression Detection Using EEG and Eye Tracking Data,” IEEE Trans. Nanobioscience, vol. 19, no. 3, pp. 527–537, Jul. 2020, doi: 10.1109/TNB.2020.2990690.

J. Zhu et al., “Toward Depression Recognition Using EEG and Eye Tracking: An Ensemble Classification Model CBEM,” in 2019 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), Nov. 2019, pp. 782–786. doi: 10.1109/BIBM47256.2019.8983225.

S. Alghowinem et al., “Multimodal Depression Detection: Fusion Analysis of Paralinguistic, Head Pose and Eye Gaze Behaviors,” IEEE Trans. Affect. Comput., vol. 9, no. 4, pp. 478–490, Oct. 2018, doi: 10.1109/TAFFC.2016.2634527.

R. Shen, Q. Zhan, Y. Wang, and H. Ma, “Depression Detection by Analysing Eye Movements on Emotional Images,” in ICASSP 2021 - 2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Jun. 2021, pp. 7973–7977. doi: 10.1109/ICASSP39728.2021.9414663.

Z. Pan, H. Ma, L. Zhang, and Y. Wang, “Depression Detection Based on Reaction Time and Eye Movement,” in 2019 IEEE International Conference on Image Processing (ICIP), Sep. 2019, pp. 2184–2188. doi: 10.1109/ICIP.2019.8803181.

H. Wang, Y. Zhou, F. Yu, L. Zhao, C. Wang, and Y. Ren, “Fusional Recognition for Depressive Tendency With Multi-Modal Feature,” IEEE Access, vol. 7, pp. 38702–38713, 2019, doi: 10.1109/ACCESS.2019.2899352.

S. Al-gawwam and M. Benaissa, “Depression Detection From Eye Blink Features,” in 2018 IEEE International Symposium on Signal Processing and Information Technology (ISSPIT), Dec. 2018, pp. 388–392. doi: 10.1109/ISSPIT.2018.8642682.

J. Zhu et al., “Multimodal Mild Depression Recognition Based on EEG-EM Synchronization Acquisition Network,” IEEE Access, vol. 7, pp. 28196–28210, 2019, doi: 10.1109/ACCESS.2019.2901950.

X. Ding, X. Yue, R. Zheng, C. Bi, D. Li, and G. Yao, “Classifying major depression patients and healthy controls using EEG, eye tracking and galvanic skin response data,” J. Affect. Disord., vol. 251, pp. 156–161, May 2019, doi: 10.1016/j.jad.2019.03.058.

X. Li, T. Cao, S. Sun, B. Hu, and M. Ratcliffe, “Classification study on eye movement data: Towards a new approach in depression detection,” in 2016 IEEE Congress on Evolutionary Computation (CEC), Jul. 2016, pp. 1227–1232. doi: 10.1109/CEC.2016.7743927.

S. B. Shafiei, Z. Lone, A. S. Elsayed, A. A. Hussein, and K. A. Guru, “Identifying mental health status using deep neural network trained by visual metrics,” Transl. Psychiatry, vol. 10, no. 1, p. 430, Dec. 2020, doi: 10.1038/s41398-020-01117-5.

M. Gavrilescu and N. Vizireanu, “Predicting Depression, Anxiety, and Stress Levels from Videos Using the Facial Action Coding System,” Sensors, vol. 19, no. 17, p. 3693, Aug. 2019, doi: 10.3390/s19173693.

M. Li et al., “Method of Depression Classification Based on Behavioral and Physiological Signals of Eye Movement,” Complexity, vol. 2020, pp. 1–9, Jan. 2020, doi: 10.1155/2020/4174857.

S. Lu, S. Liu, M. Li, X. Shi, and R. Li, “Depression Classification Model Based on Emotionally Related Eye-Movement Data and Kernel Extreme Learning Machine,” J. Med. Imaging Heal. Informatics, vol. 10, no. 11, pp. 2668–2674, Nov. 2020, doi: 10.1166/jmihi.2020.3198.

Y. Yuan and Q. Wang, “Detection Model of Depression Based on Eye Movement Trajectory,” in 2019 IEEE International Conference on Data Science and Advanced Analytics (DSAA), Oct. 2019, pp. 612–613. doi: 10.1109/DSAA.2019.00082.

K. Kroenke, R. L. Spitzer, and J. B. W. Williams, “The Patient Health Questionnaire-2,” Med. Care, vol. 41, no. 11, pp. 1284–1292, Nov. 2003, doi: 10.1097/01.MLR.0000093487.78664.3C.

L. Parkitny and J. McAuley, “The Depression Anxiety Stress Scale (DASS),” J. Physiother., vol. 56, no. 3, p. 204, 2010, doi: 10.1016/S1836-9553(10)70030-8.

M. M. Bradley and P. J. Lang, “Measuring emotion: The self-assessment manikin and the semantic differential,” J. Behav. Ther. Exp. Psychiatry, vol. 25, no. 1, pp. 49–59, Mar. 1994, doi: 10.1016/0005-7916(94)90063-9.

B. W. Matthews, “Comparison of the predicted and observed secondary structure of T4 phage lysozyme,” Biochim. Biophys. Acta - Protein Struct., vol. 405, no. 2, pp. 442–451, Oct. 1975, doi: 10.1016/0005-2795(75)90109-9.

S. Lu et al., “Attentional bias scores in patients with depression and effects of age: a controlled, eye-tracking study,” J. Int. Med. Res., vol. 45, no. 5, pp. 1518–1527, Oct. 2017, doi: 10.1177/0300060517708920.

A. Sanchez, N. Romero, and R. De Raedt, “Depression-related difficulties disengaging from negative faces are associated with sustained attention to negative feedback during social evaluation and predict stress recovery,” PLoS One, vol. 12, no. 3, p. e0175040, Mar. 2017, doi: 10.1371/journal.pone.0175040.

S. E. Garcia, S. M. S. Francis, E. B. Tone, and E. C. Tully, “Understanding associations between negatively biased attention and depression and social anxiety: positively biased attention is key,” Anxiety, Stress. Coping, vol. 32, no. 6, pp. 611–625, Nov. 2019, doi: 10.1080/10615806.2019.1638732.

A. B. Jin, L. H. Steding, and A. K. Webb, “Reduced emotional and cardiovascular reactivity to emotionally evocative stimuli in major depressive disorder,” Int. J. Psychophysiol., vol. 97, no. 1, pp. 66–74, Jul. 2015, doi: 10.1016/j.ijpsycho.2015.04.014.

J. Klawohn et al., “Aberrant attentional bias to sad faces in depression and the role of stressful life events: Evidence from an eye-tracking paradigm,” Behav. Res. Ther., vol. 135, p. 103762, Dec. 2020, doi: 10.1016/j.brat.2020.103762.

I. Krejtz, P. Holas, M. Rusanowska, and J. B. Nezlek, “Positive online attentional training as a means of modifying attentional and interpretational biases among the clinically depressed: An experimental study using eye tracking,” J. Clin. Psychol., vol. 74, no. 9, pp. 1594–1606, Sep. 2018, doi: 10.1002/jclp.22617.

C. M. Bodenschatz, M. Skopinceva, T. Ruß, and T. Suslow, “Attentional bias and childhood maltreatment in clinical depression - An eye-tracking study,” J. Psychiatr. Res., vol. 112, pp. 83–88, May 2019, doi: 10.1016/j.jpsychires.2019.02.025.

W. Tang et al., “Depressive Symptoms in Late Pregnancy Disrupt Attentional Processing of Negative–Positive Emotion: An Eye-Movement Study,” Front. Psychiatry, vol. 10, Oct. 2019, doi: 10.3389/fpsyt.2019.00780.

I. Yaroslavsky, E. S. Allard, and A. Sanchez-Lopez, “Can’t look Away: Attention control deficits predict Rumination, depression symptoms and depressive affect in daily Life,” J. Affect. Disord., vol. 245, pp. 1061–1069, Feb. 2019, doi: 10.1016/j.jad.2018.11.036.

C. M. Bodenschatz, M. Skopinceva, T. Ruß, A. Kersting, and T. Suslow, “Face perception without subjective awareness – Emotional expressions guide early gaze behavior in clinically depressed and healthy individuals,” J. Affect. Disord., vol. 265, pp. 91–98, Mar. 2020, doi: 10.1016/j.jad.2020.01.039.

K. E. Unruh, J. W. Bodfish, and K. O. Gotham, “Adults with Autism and Adults with Depression Show Similar Attentional Biases to Social-Affective Images,” J. Autism Dev. Disord., vol. 50, no. 7, pp. 2336–2347, Jul. 2020, doi: 10.1007/s10803-018-3627-5.

M. Godara, A. Sanchez-Lopez, and R. De Raedt, “Music to my ears, goal for my eyes? Music reward modulates gaze disengagement from negative stimuli in dysphoria,” Behav. Res. Ther., vol. 120, p. 103434, Sep. 2019, doi: 10.1016/j.brat.2019.103434.

B. E. Gibb, S. D. Pollak, G. Hajcak, and M. Owens, “Attentional biases in children of depressed mothers: An event-related potential (ERP) study.,” J. Abnorm. Psychol., vol. 125, no. 8, pp. 1166–1178, Nov. 2016, doi: 10.1037/abn0000216.

B. Platt et al., “An Eye-Tracking Study of Attention Biases in Children at High Familial Risk for Depression and Their Parents with Depression,” Child Psychiatry Hum. Dev., vol. 53, no. 1, pp. 89–108, Feb. 2022, doi: 10.1007/s10578-020-01105-2.

M. Owens et al., “Eye tracking indices of attentional bias in children of depressed mothers: Polygenic influences help to clarify previous mixed findings,” Dev. Psychopathol., vol. 28, no. 2, pp. 385–397, May 2016, doi: 10.1017/S0954579415000462.