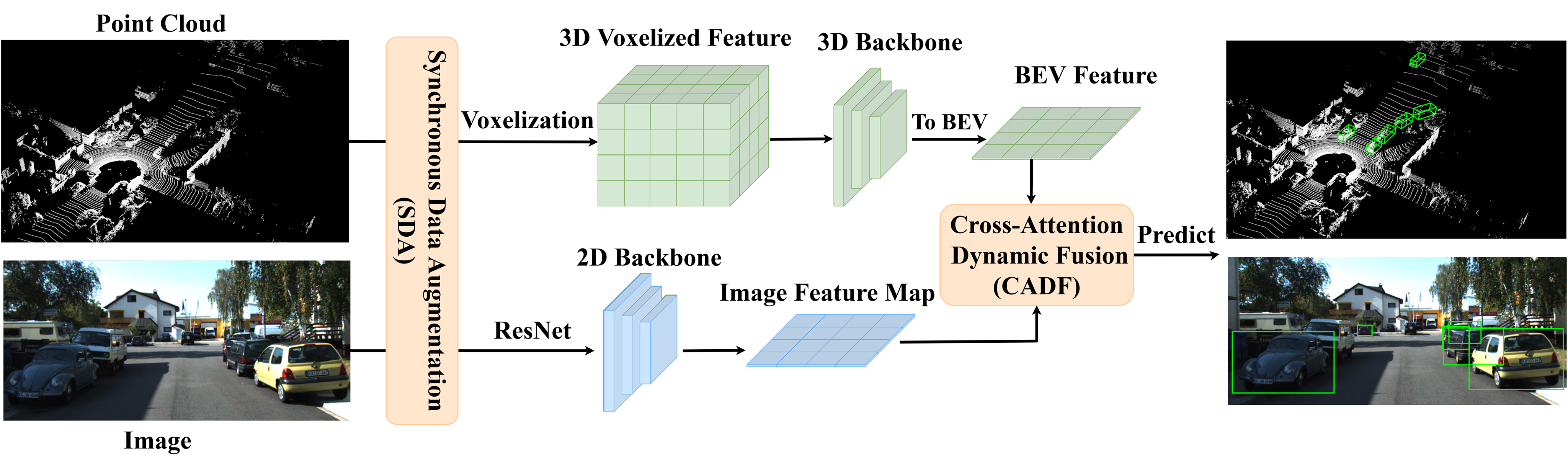

DyFusion: Cross-Attention 3D Object Detection with Dynamic Fusion

Keywords:

Cross-Attention Dynamic Fusion, Synchronous Data Augmentation, 3D object detectionAbstract

In the realm of autonomous driving, LiDAR and camera sensors play an indispensable role, furnishing pivotalobservational data for the critical task of precise 3D object detection. Existing fusion algorithms effectively utilize the complementary data from both sensors. However, these methods typically concatenate the raw point cloud data and pixel-level image features, unfortunately, a process that introduces errors and results in the loss of critical information embedded in each modality. To mitigate the problem of lost feature information, this paper proposes a Cross-Attention Dynamic Fusion (CADF) strategy that dynamically fuses the two heterogeneous data sources. In addition, we acknowledge the issue of insufficient data augmentation for these two diverse modalities. To combat this, we propose a Synchronous Data Augmentation (SDA) strategy designed to enhance training efficiency. We have tested our method using the KITTI and nuScenes datasets, and the results have been promising. Remarkably, our top-performing model attained an 82.52% mAP on the KITTI test benchmark, outperforming other state-of-the-art methods.

Downloads

References

L. Wang, X. Zhang, Z. Song, J. Bi, G. Zhang, H. Wei, L. Tang, L. Yang, J. Li, C. Jia et al, “Multi-modal 3d object detection in autonomous driving: A survey and taxonomy,” IEEE Transactions on Intelligent V ehicles, 2023.

A. Geiger, P . Lenz, and R. Urtasun, “Are we ready for autonomous driving? the kitti vision benchmark suite,” in 2012 IEEE conference on computer vision and pattern recognition. IEEE, 2012, pp. 3354–3361.

H. Caesar, V . Bankiti, A. H. Lang, S. V ora, V . E. Liong, Q. Xu, A. Krishnan, Y . Pan, G. Baldan, and O. Beijbom, “nuscenes: A multimodal dataset for autonomous driving,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 11 621–11 631.

C. R. Qi, H. Su, K. Mo, and L. J. Guibas, “Pointnet: Deep learning on point sets for 3d classification and segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2017, pp.652–660.

Y . Zhou and O. Tuzel, “V oxelnet: End-to-end learning for point cloud based 3d object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 4490–4499.

C. R. Qi, W. Liu, C. Wu, H. Su, and L. J. Guibas, “Frustum pointnets for 3d object detection from rgb-d data,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 918– 927.

X. Chen, H. Ma, J. Wan, B. Li, and T. Xia, “Multi-view 3d object detection network for autonomous driving,” in Proceedings of the IEEE conference on Computer Vision and Pattern Recognition, 2017, pp.1907–1915.

J. Ku, M. Mozifian, J. Lee, A. Harakeh, and S. L. Waslander, “Joint 3d proposal generation and object detection from view aggregation,” in 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2018, pp. 1–8.

Y . Yan, Y . Mao, and B. Li, “Second: Sparsely embedded convolutional detection,” Sensors, vol. 18, no. 10, p. 3337, 2018.

A. Simonelli, S. R. Bulo, L. Porzi, M. López-Antequera, and P . Kontschieder, “Disentangling monocular 3d object detection,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 1991–1999.

G. Brazil and X. Liu, “M3d-rpn: Monocular 3d region proposal network for object detection,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, 2019, pp. 9287–9296.

G. Wang, B. Tian, Y . Zhang, L. Chen, D. Cao, and J. Wu, “Multiview adaptive fusion network for 3d object detection,” arXiv preprint arXiv:2011.00652, 2020.

J. H. Y oo, Y . Kim, J. Kim, and J. W. Choi, “3d-cvf: Generating joint camera and lidar features using cross-view spatial feature fusion for 3d object detection,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXVII 16. Springer, 2020, pp. 720–736.

J. Deng, S. Shi, P . Li, W. Zhou, Y . Zhang, and H. Li, “V oxel rcnn: Towards high performance voxel-based 3d object detection,” in Proceedings of the AAAI Conference on Artificial Intelligence, vol. 35, no. 2, 2021, pp. 1201–1209.

C. R. Qi, W. Liu, C. Wu, H. Su, and L. J. Guibas, “Frustum pointnets for 3d object detection from rgb-d data,” in Proceedings of the IEEE conference on computer vision and pattern recognition, 2018, pp. 918– 927.

W. Shi and R. Rajkumar, “Point-gnn: Graph neural network for 3d object detection in a point cloud,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 1711–1719.

S. Shi, X. Wang, and H. Li, “Pointrcnn: 3d object proposal generation and detection from point cloud,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2019, pp. 770– 779.

Z. Wang and K. Jia, “Frustum convnet: Sliding frustums to aggregate local point-wise features for amodal 3d object detection,” in 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2019, pp. 1742–1749.

Z. Zhang, Y . Shen, H. Li, X. Zhao, M. Yang, W. Tan, S. Pu, and H. Mao, “Maff-net: Filter false positive for 3d vehicle detection with multi-modal adaptive feature fusion,” in 2022 IEEE 25th International Conference on Intelligent Transportation Systems (ITSC). IEEE, 2022, pp. 369–376.

T. Huang, Z. Liu, X. Chen, and X. Bai, “Epnet: Enhancing point features with image semantics for 3d object detection,” in European Conference on Computer Vision. Springer, 2020, pp. 35–52.

S. V ora, A. H. Lang, B. Helou, and O. Beijbom, “Pointpainting: Sequential fusion for 3d object detection,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2020, pp. 4604– 4612.

Q. Chen, L. Sun, Z. Wang, K. Jia, and A. Y uille, “Object as hotspots: An anchor-free 3d object detection approach via firing of hotspots,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XXI 16. Springer, 2020, pp. 68–84.

T. Yin, X. Zhou, and P . Krahenbuhl, “Center-based 3d object detection and tracking,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 2021, pp. 11 784–11 793.