Mapping the Landscape of SLAM Research: A Review

Keywords:

Simultaneous Localization and Mapping, SLAM, Survey, Visual SLAM, Artificial IntelligenceAbstract

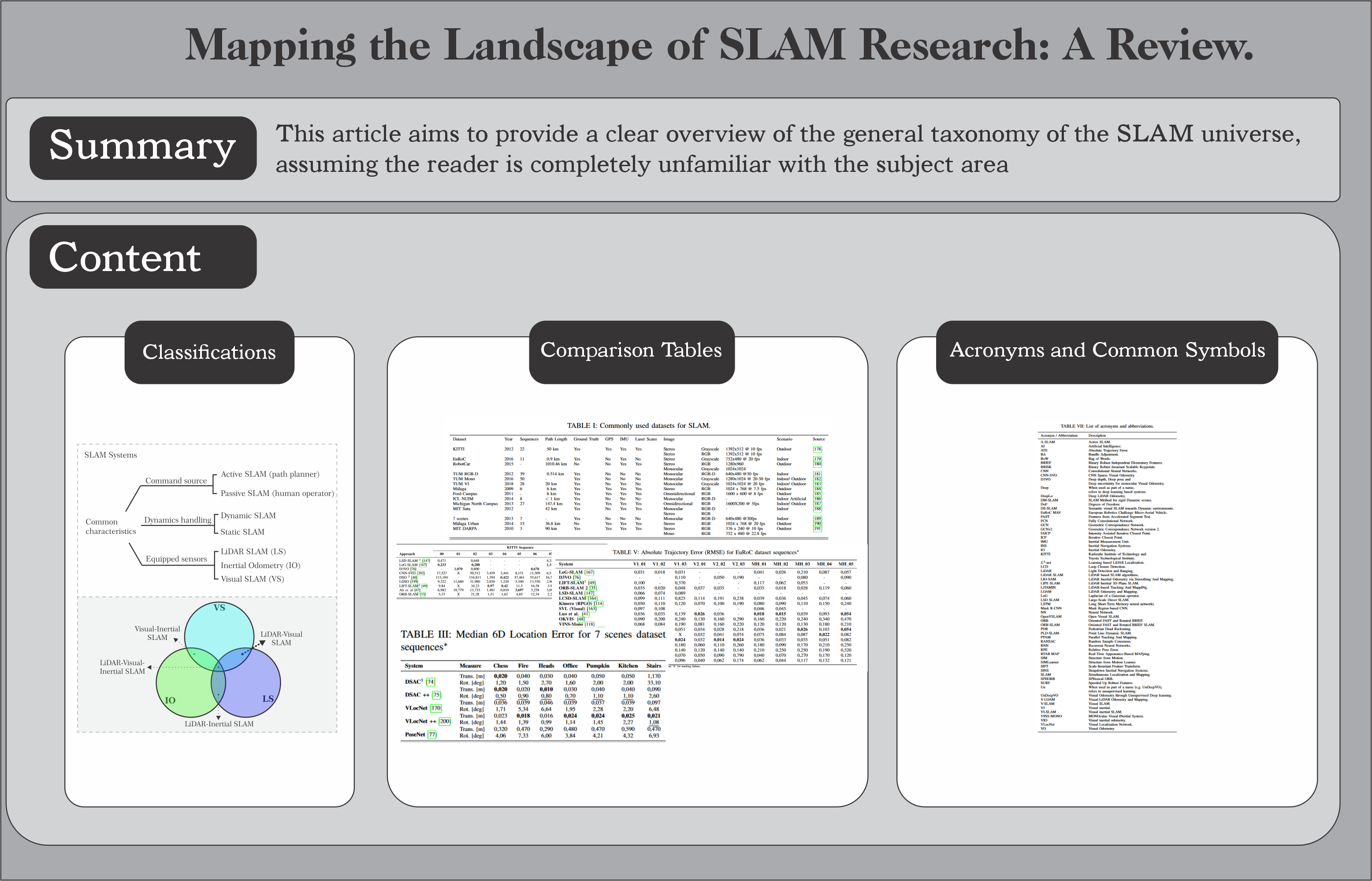

Multiple publications have arisen from over three decades of research in the field of simultaneous localization and mapping (SLAM), overwhelming those who wish to delve into this area. The extensive body of work in SLAM has resulted in an abundant and, at times, confusing flow of data, lacking a straightforward explanation of the underlying principles. This article aims to address this issue by providing a clear overview of the general taxonomy of the SLAM universe, assuming the reader is completely unfamiliar with the subject area. As cameras remain the primary sensor choice for SLAM, and considering the vast number of articles available on this topic, special emphasis will be placed on Visual SLAM. Additionally, we will delve into the influence of artificial intelligence on the development of new algorithms. The article incorporates comparative tables to enable readers to assess system performance using benchmark datasets. Moreover, it offers insights into current trends and prospective pathways within the subject area.

Downloads

References

I. Lluvia, E. Lazkano, and A. Ansuategi, “Active mapping and robot exploration: A survey,” Sensors, vol. 21, no. 7, p. 2445, 2021.

R. C. Smith and P. Cheeseman, “On the representation and estimation of spatial uncertainty,” The international journal of Robotics Research, vol. 5, no. 4, pp. 56–68, 1986.

H. F. Durrant-Whyte, “Uncertain geometry in robotics,” IEEE Journal on Robotics and Automation, vol. 4, no. 1, pp. 23–31, 1988. ] H. Durrant-Whyte and T. Bailey, “Simultaneous localization and mapping: part i,” IEEE robotics & automation magazine, vol. 13, no. 2,pp. 99–110, 2006.

T. Bailey and H. Durrant-Whyte, “Simultaneous localization and mapping (slam): Part ii,” IEEE robotics & automation magazine, vol. 13,no. 3, pp. 108–117, 2006.

K. Yousif, A. Bab-Hadiashar, and R. Hoseinnezhad, “An overview to visual odometry and visual slam: Applications to mobile robotics,”Intelligent Industrial Systems, vol. 1, no. 4, pp. 289–311, 2015.

B. Kitchenham and S. Charters, “Guidelines for performing systematic literature reviews in software engineering,” Technical report, Ver. 2.3 EBSE Technical Report. EBSE, 2007.

S. Huang and G. Dissanayake, “A critique of current developments in simultaneous localization and mapping,” International Journal of Advanced Robotic Systems, vol. 13, no. 5, p.1729881416669482, 2016.

D. Scaramuzza and F. Fraundorfer, “Visual odometry [tutorial],” IEEE robotics & automation magazine, vol. 18, no. 4, pp. 80–92, 2011.

F. Fraundorfer and D. Scaramuzza, “Visual odometry: Part ii: Matching, robustness, optimization, and applications,” IEEE Robotics & Automation Magazine, vol. 19, no. 2, pp. 78–90, 2012.

S. Saeedi, M. Trentini, M. Seto, and H. Li, “Multiple-robot simultaneous localization and mapping: A review,” Journal of Field Robotics, vol. 33, no. 1, pp. 3–46, 2016.

B. Huang, J. Zhao, and J. Liu, “A survey of simultaneous localization and mapping with an envision in 6g wireless networks,” arXiv preprint arXiv:1909.05214, 2019.

G. Bresson, Z. Alsayed, L. Yu, and S. Glaser, “Simultaneous localization and mapping: A survey of current trends in autonomous driving,” IEEE Transactions on Intelligent Vehicles, vol. 2, no. 3, pp. 194–220, 2017.

M. R. U. Saputra, A. Markham, and N. Trigoni, “Visual slam and structure from motion in dynamic environments: A survey,” ACM Computing Surveys (CSUR), vol. 51, no. 2, pp. 1–36, 2018.

C. Chen, B. Wang, C. X. Lu, N. Trigoni, and A. Markham, “A survey on deep learning for localization and mapping: Towards the age of spatial machine intelligence,” arXiv preprint arXiv:2006.12567, 2020.

B. Yamauchi, “A frontier-based approach for autonomous exploration,” Proceedings 1997 IEEE International Symposium on Computational Intelligence in Robotics and Automation CIRA’97.’Towards New Computational Principles for Robotics and Automation’, pp. 146–151,1997.

B. Yamauchi, A. Schultz, and W. Adams, “Mobile robot explorationand map-building with continuous localization,” Proceedings. 1998IEEE International Conference on Robotics and Automation (Cat. No.98CH36146), vol. 4, pp. 3715–3720, 1998.

C. Zhu, R. Ding, M. Lin, and Y. Wu, “A 3d frontier-based explorationtool for mavs,” 2015 IEEE 27th International Conference on Tools withArtificial Intelligence (ICTAI), pp. 348–352, 2015.

A. Dai, S. Papatheodorou, N. Funk, D. Tzoumanikas, and S. Leuteneg-ger, “Fast frontier-based information-driven autonomous explorationwith an mav,” 2020 IEEE International Conference on Robotics andAutomation (ICRA), pp. 9570–9576, 2020.

H. H. González-Banos and J.-C. Latombe, “Navigation strategies forexploring indoor environments,” The International Journal of RoboticsResearch, vol. 21, no. 10-11, pp. 829–848, 2002.

W. Burgard, M. Moors, D. Fox, R. Simmons, and S. Thrun, “Collab-orative multi-robot exploration,” Proceedings 2000 ICRA. MillenniumConference. IEEE International Conference on Robotics and Automation. Symposia Proceedings (Cat. No. 00CH37065), vol. 1, pp. 476–481, 2000.

R. Zlot, A. Stentz, M. B. Dias, and S. Thayer, “Multi-robot explorationcontrolled by a market economy,” Proceedings 2002 IEEE internationalconference on robotics and automation (Cat. No. 02CH37292), vol. 3,pp. 3016–3023, 2002.

A. A. Makarenko, S. B. Williams, F. Bourgault, and H. F. Durrant-Whyte, “An experiment in integrated exploration,” IEEE/RSJ interna-tional conference on intelligent robots and systems, vol. 1, pp. 534–539,2002.

M. Juliá, A. Gil, and O. Reinoso, “A comparison of path planningstrategies for autonomous exploration and mapping of unknown envi-ronments,” Autonomous Robots, vol. 33, no. 4, pp. 427–444, 2012.

R. Sim and N. Roy, “Global a-optimal robot exploration in slam,”Proceedings of the 2005 IEEE international conference on roboticsand automation, pp. 661–666, 2005.

J. Vallvé and J. Andrade-Cetto, “Active pose slam with rrt,” 2015IEEE International Conference on Robotics and Automation (ICRA),pp. 2167–2173, 2015.

C. Leung, S. Huang, and G. Dissanayake, “Active slam using modelpredictive control and attractor based exploration,” 2006 IEEE/RSJInternational Conference on Intelligent Robots and Systems, pp. 5026–5031, 2006.

I. Maurovi ́c, M. Seder, K. Lenac, and I. Petrovi ́c, “Path planning foractive slam based on the d* algorithm with negative edge weights,”IEEE Transactions on Systems, Man, and Cybernetics: Systems, vol. 48,no. 8, pp. 1321–1331, 2017.

E. Bonetto, P. Goldschmid, M. Pabst, M. J. Black, and A. Ahmad,“irotate: Active visual slam for omnidirectional robots,” Robotics andAutonomous Systems, p. 104102, 2022.

H. Carrillo, P. Dames, V. Kumar, and J. A. Castellanos, “Autonomousrobotic exploration using a utility function based on rényi’s generaltheory of entropy,” Autonomous Robots, vol. 42, no. 2, pp. 235–256,2018.

Y. Chen, S. Huang, and R. Fitch, “Active slam for mobile robots witharea coverage and obstacle avoidance,” IEEE/ASME Transactions onMechatronics, vol. 25, no. 3, pp. 1182–1192, 2020.

C. Cadena, L. Carlone, H. Carrillo, Y. Latif, D. Scaramuzza, J. Neira,I. Reid, and J. J. Leonard, “Past, present, and future of simultaneouslocalization and mapping: Toward the robust-perception age,” IEEETransactions on robotics, vol. 32, no. 6, pp. 1309–1332, 2016.

R. Mur-Artal, J. M. M. Montiel, and J. D. Tardos, “Orb-slam: a versatileand accurate monocular slam system,” IEEE transactions on robotics,vol. 31, no. 5, pp. 1147–1163, 2015.

R. Mur-Artal and J. D. Tardós, “Visual-inertial monocular slam withmap reuse,” IEEE Robotics and Automation Letters, vol. 2, no. 2,pp. 796–803, 2017.

R. Mur-Artal and J. D. Tardós, “Orb-slam2: An open-source slamsystem for monocular, stereo, and rgb-d cameras,” IEEE Transactionson Robotics, vol. 33, no. 5, pp. 1255–1262, 2017.

F. Nobis, O. Papanikolaou, J. Betz, and M. Lienkamp, “Persistent mapsaving for visual localization for autonomous vehicles: An orb-slam2 extension,” 2020 Fifteenth International Conference on EcologicalVehicles and Renewable Energies (EVER), pp. 1–9, 2020.

D. Schlegel, M. Colosi, and G. Grisetti, “Proslam: graph slam froma programmer’s perspective,” 2018 IEEE International Conference onRobotics and Automation (ICRA), pp. 3833–3840, 2018.

R. Elvira, J. D. Tardós, and J. M. Montiel, “Orbslam-atlas: a robust andaccurate multi-map system,” 2019 IEEE/RSJ International Conferenceon Intelligent Robots and Systems (IROS), pp. 6253–6259, 2019.

C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. Montiel, and J. D.Tardós, “Orb-slam3: An accurate open-source library for visual, visual–inertial, and multimap slam,” IEEE Transactions on Robotics, 2021.

J. Engel, V. Koltun, and D. Cremers, “Direct sparse odometry,” IEEEtransactions on pattern analysis and machine intelligence, vol. 40,no. 3, pp. 611–625, 2017.

H. Luo, C. Pape, and E. Reithmeier, “Hybrid monocular slam usingdouble window optimization,” IEEE Robotics and Automation Letters,vol. 6, no. 3, pp. 4899–4906, 2021.

G. Grisetti, R. Kümmerle, C. Stachniss, and W. Burgard, “A tutorial ongraph-based slam,” IEEE Intelligent Transportation Systems Magazine,vol. 2, no. 4, pp. 31–43, 2010.

S. Kohlbrecher, O. Von Stryk, J. Meyer, and U. Klingauf, “A flexibleand scalable slam system with full 3d motion estimation,” 2011IEEE international symposium on safety, security, and rescue robotics,pp. 155–160, 2011.

H. Carrillo, P. Dames, V. Kumar, and J. A. Castellanos, “Autonomousrobotic exploration using occupancy grid maps and graph slam basedon shannon and rényi entropy,” 2015 IEEE international conference onrobotics and automation (ICRA), pp. 487–494, 2015.

M. Montemerlo, S. Thrun, D. Koller, B. Wegbreit, et al., “Fastslam:A factored solution to the simultaneous localization and mappingproblem,” Aaai/iaai, vol. 593598, 2002.

J. Zhang, W. Sui, X. Wang, W. Meng, H. Zhu, and Q. Zhang, “Deeponline correction for monocular visual odometry,” 2021 IEEE Inter-national Conference on Robotics and Automation (ICRA), pp. 14396–14402, 2021.

M. Bloesch, J. Czarnowski, R. Clark, S. Leutenegger, and A. J. Davi-son, “Codeslam—learning a compact, optimisable representation fordense visual slam,” Proceedings of the IEEE conference on computervision and pattern recognition, pp. 2560–2568, 2018.

S. Leutenegger, S. Lynen, M. Bosse, R. Siegwart, and P. Furgale,“Keyframe-based visual–inertial odometry using nonlinear optimiza-tion,” The International Journal of Robotics Research, vol. 34, no. 3,pp. 314–334, 2015.

H. M. S. Bruno and E. L. Colombini, “Lift-slam: a deep-learningfeature-based monocular visual slam method,” Neurocomputing,vol. 455, pp. 97–110, 2021.

B. Triggs, P. F. McLauchlan, R. I. Hartley, and A. W. Fitzgibbon,“Bundle adjustment—a modern synthesis,” International workshop onvision algorithms, pp. 298–372, 1999.

S. Lowry, N. Sünderhauf, P. Newman, J. J. Leonard, D. Cox, P. Corke,and M. J. Milford, “Visual place recognition: A survey,” IEEE Trans-actions on Robotics, vol. 32, no. 1, pp. 1–19, 2015.

Y. Latif, G. Huang, J. J. Leonard, and J. Neira, “An online sparsity-cognizant loop-closure algorithm for visual navigation.,” Robotics:Science and Systems, 2014.

G. Csurka, C. Dance, L. Fan, J. Willamowski, and C. Bray, “Visualcategorization with bags of keypoints,” Workshop on statistical learningin computer vision, ECCV, vol. 1, no. 1-22, pp. 1–2, 2004.

D. Gálvez-López and J. D. Tardos, “Bags of binary words for fastplace recognition in image sequences,” IEEE Transactions on Robotics,vol. 28, no. 5, pp. 1188–1197, 2012.

M. Labbe and F. Michaud, “Appearance-based loop closure detectionfor online large-scale and long-term operation,” IEEE Transactions onRobotics, vol. 29, no. 3, pp. 734–745, 2013.

D. Filliat, “A visual bag of words method for interactive qualitativelocalization and mapping,” Proceedings 2007 IEEE International Con-ference on Robotics and Automation, pp. 3921–3926, 2007.

D. Schlegel and G. Grisetti, “Visual localization and loop closingusing decision trees and binary features,” 2016 IEEE/RSJ InternationalConference on Intelligent Robots and Systems (IROS), pp. 4616–4623,2016.

J. Chen, J. Li, Y. Xu, G. Shen, and Y. Gao, “A compact loopclosure detection based on spatial partitioning,” 2017 2nd InternationalConference on Image, Vision and Computing (ICIVC), pp. 371–375,2017.

Z. Yang, Y. Pan, L. Deng, Y. Xie, and R. Huan, “Plsav: Parallel loopsearching and verifying for loop closure detection,” IET IntelligentTransport Systems, vol. 15, no. 5, pp. 683–698, 2021.

N. Kejriwal, S. Kumar, and T. Shibata, “High performance loop closuredetection using bag of word pairs,” Robotics and Autonomous Systems,vol. 77, pp. 55–65, 2016.

H. Zhang, F. Han, and H. Wang, “Robust multimodal sequence-basedloop closure detection via structured sparsity.,” Robotics: Science andsystems, 2016.

M. Yokozuka, K. Koide, S. Oishi, and A. Banno, “Litamin: Lidar-basedtracking and mapping by stabilized icp for geometry approximationwith normal distributions,” 2020 IEEE/RSJ International Conferenceon Intelligent Robots and Systems (IROS), pp. 5143–5150, 2020.

M. Yokozuka, K. Koide, S. Oishi, and A. Banno, “Litamin2: Ultralight lidar-based slam using geometric approximation applied with kl-divergence,” 2021 IEEE International Conference on Robotics andAutomation (ICRA), pp. 11619–11625, 2021.

J. Behley and C. Stachniss, “Efficient surfel-based slam using 3d laserrange data in urban environments.,” Robotics: Science and Systems,vol. 2018, p. 59, 2018.

J. Zhang and S. Singh, “Loam: Lidar odometry and mapping in real-time.,” Robotics: Science and Systems, vol. 2, no. 9, pp. 1–9, 2014.

T. Shan and B. Englot, “Lego-loam: Lightweight and ground-optimizedlidar odometry and mapping on variable terrain,” 2018 IEEE/RSJInternational Conference on Intelligent Robots and Systems (IROS),pp. 4758–4765, 2018.

W. Ali, P. Liu, R. Ying, and Z. Gong, “6-dof feature based lidar slamusing orb features from rasterized images of 3d lidar point cloud,”arXiv preprint arXiv:2103.10678, 2021.

M. Valente, C. Joly, and A. de La Fortelle, “An lstm network for real-time odometry estimation,” 2019 IEEE Intelligent Vehicles Symposium(IV), pp. 1434–1440, 2019.

L. Sun, D. Adolfsson, M. Magnusson, H. Andreasson, I. Posner, andT. Duckett, “Localising faster: Efficient and precise lidar-based robotlocalisation in large-scale environments,” 2020 IEEE InternationalConference on Robotics and Automation (ICRA), pp. 4386–4392, 2020.

M. A. Fischler and R. C. Bolles, “Random sample consensus: aparadigm for model fitting with applications to image analysis andautomated cartography,” Communications of the ACM, vol. 24, no. 6,pp. 381–395, 1981.

J.-P. Tardif, Y. Pavlidis, and K. Daniilidis, “Monocular visual odom-etry in urban environments using an omnidirectional camera,” 2008IEEE/RSJ International Conference on Intelligent Robots and Systems,pp. 2531–2538, 2008.

B. Bescos, J. M. Fácil, J. Civera, and J. Neira, “Dynaslam: Tracking,mapping, and inpainting in dynamic scenes,” IEEE Robotics andAutomation Letters, vol. 3, no. 4, pp. 4076–4083, 2018.

G. Klein and D. Murray, “Parallel tracking and mapping for small arworkspaces,” 2007 6th IEEE and ACM international symposium onmixed and augmented reality, pp. 225–234, 2007.

R. Munoz-Salinas and R. Medina-Carnicer, “Ucoslam: Simultaneouslocalization and mapping by fusion of keypoints and squared planarmarkers,” Pattern Recognition, vol. 101, p. 107193, 2020.

R. Munoz-Salinas, M. J. Marin-Jimenez, and R. Medina-Carnicer,“Spm-slam: Simultaneous localization and mapping with squared pla-nar markers,” Pattern Recognition, vol. 86, pp. 156–171, 2019.

B. Pfrommer and K. Daniilidis, “Tagslam: Robust slam with fiducialmarkers,” arXiv preprint arXiv:1910.00679, 2019.

J. Wang and E. Olson, “Apriltag 2: Efficient and robust fiducialdetection,” 2016 IEEE/RSJ International Conference on IntelligentRobots and Systems (IROS), pp. 4193–4198, 2016.

S. Sumikura, M. Shibuya, and K. Sakurada, “Openvslam: A versatilevisual slam framework,” Proceedings of the 27th ACM InternationalConference on Multimedia, pp. 2292–2295, 2019.

P. G. Savage, “Strapdown inertial navigation integration algorithmdesign part 1: Attitude algorithms,” Journal of guidance, control, anddynamics, vol. 21, no. 1, pp. 19–28, 1998.

S. Beauregard and H. Haas, “Pedestrian dead reckoning: A basis forpersonal positioning,” Proceedings of the 3rd Workshop on Positioning,Navigation and Communication, pp. 27–35, 2006.

Y. Wu, X. Hu, D. Hu, T. Li, and J. Lian, “Strapdown inertial navigationsystem algorithms based on dual quaternions,” IEEE transactions onaerospace and electronic systems, vol. 41, no. 1, pp. 110–132, 2005.

W. Sun, D. Wang, L. Xu, and L. Xu, “Mems-based rotary strapdowninertial navigation system,” Measurement, vol. 46, no. 8, pp. 2585–2596, 2013.

S. Cortés, A. Solin, and J. Kannala, “Deep learning based speed esti-mation for constraining strapdown inertial navigation on smartphones,”2018 IEEE 28th International Workshop on Machine Learning forSignal Processing (MLSP), pp. 1–6, 2018.

M. Brossard, A. Barrau, and S. Bonnabel, “Rins-w: Robust inertial nav-igation system on wheels,” 2019 IEEE/RSJ International Conferenceon Intelligent Robots and Systems (IROS), pp. 2068–2075, 2019.

H. Yan, Q. Shan, and Y. Furukawa, “Ridi: Robust imu double inte-gration,” Proceedings of the European Conference on Computer Vision(ECCV), pp. 621–636, 2018.

M. Ren, K. Pan, Y. Liu, H. Guo, X. Zhang, and P. Wang, “A novelpedestrian navigation algorithm for a foot-mounted inertial-sensor-based system,” Sensors, vol. 16, no. 1, p. 139, 2016.

B. Wagstaff and J. Kelly, “Lstm-based zero-velocity detection forrobust inertial navigation,” 2018 International Conference on IndoorPositioning and Indoor Navigation (IPIN), pp. 1–8, 2018.

C. Chen, Y. Miao, C. X. Lu, L. Xie, P. Blunsom, A. Markham, andN. Trigoni, “Motiontransformer: Transferring neural inertial trackingbetween domains,” Proceedings of the AAAI Conference on ArtificialIntelligence, vol. 33, no. 01, pp. 8009–8016, 2019.

C. Chen, X. Lu, A. Markham, and N. Trigoni, “Ionet: Learning tocure the curse of drift in inertial odometry,” Proceedings of the AAAIConference on Artificial Intelligence, vol. 32, no. 1, 2018.27 IEEE LATIN AMERICA TRANSACTIONS , Vol. 7, No. 7, Oct 2020

M. A. Esfahani, H. Wang, K. Wu, and S. Yuan, “Aboldeepio: Anovel deep inertial odometry network for autonomous vehicles,” IEEETransactions on Intelligent Transportation Systems, vol. 21, no. 5,pp. 1941–1950, 2019.

J. Ku, A. Harakeh, and S. L. Waslander, “In defense of classical imageprocessing: Fast depth completion on the cpu,” 2018 15th Conferenceon Computer and Robot Vision (CRV), pp. 16–22, 2018.

F. Ma and S. Karaman, “Sparse-to-dense: Depth prediction from sparsedepth samples and a single image,” 2018 IEEE international conferenceon robotics and automation (ICRA), pp. 4796–4803, 2018.

S. S. Shivakumar, T. Nguyen, I. D. Miller, S. W. Chen, V. Kumar,and C. J. Taylor, “Dfusenet: Deep fusion of rgb and sparse depthinformation for image guided dense depth completion,” 2019 IEEEIntelligent Transportation Systems Conference (ITSC), pp. 13–20, 2019.

J. Zhang and S. Singh, “Visual-lidar odometry and mapping: Low-drift,robust, and fast,” 2015 IEEE International Conference on Robotics andAutomation (ICRA), pp. 2174–2181, 2015.

M. Labbé and F. Michaud, “Rtab-map as an open-source lidar andvisual simultaneous localization and mapping library for large-scaleand long-term online operation,” Journal of Field Robotics, vol. 36,no. 2, pp. 416–446, 2019.

S. Li and D. Lee, “Rgb-d slam in dynamic environments using staticpoint weighting,” IEEE Robotics and Automation Letters, vol. 2, no. 4,pp. 2263–2270, 2017.

Y. Sun, M. Liu, and M. Q.-H. Meng, “Improving rgb-d slam in dynamicenvironments: A motion removal approach,” Robotics and AutonomousSystems, vol. 89, pp. 110–122, 2017.

Y. Wang and S. Huang, “Motion segmentation based robust rgb-dslam,” Proceeding of the 11th World Congress on Intelligent Controland Automation, pp. 3122–3127, 2014.

Y. Xu, Y. Ou, and T. Xu, “Slam of robot based on the fusion of visionand lidar,” 2018 IEEE International Conference on Cyborg and BionicSystems (CBS), pp. 121–126, 2018.

D. Xu, D. Anguelov, and A. Jain, “Pointfusion: Deep sensor fusion for3d bounding box estimation,” Proceedings of the IEEE conference oncomputer vision and pattern recognition, pp. 244–253, 2018.

K. Shin, Y. P. Kwon, and M. Tomizuka, “Roarnet: A robust 3dobject detection based on region approximation refinement,” 2019 IEEEIntelligent Vehicles Symposium (IV), pp. 2510–2515, 2019.

J. Ku, M. Mozifian, J. Lee, A. Harakeh, and S. L. Waslander, “Joint 3dproposal generation and object detection from view aggregation,” 2018IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS), pp. 1–8, 2018.

X. Chen, H. Ma, J. Wan, B. Li, and T. Xia, “Multi-view 3d objectdetection network for autonomous driving,” Proceedings of the IEEEconference on Computer Vision and Pattern Recognition, pp. 1907–1915, 2017.

J. Levinson and S. Thrun, “Automatic online calibration of camerasand lasers.,” Robotics: Science and Systems, vol. 2, p. 7, 2013.

A. Dhall, K. Chelani, V. Radhakrishnan, and K. M. Krishna, “Lidar-camera calibration using 3d-3d point correspondences,” arXiv preprintarXiv:1705.09785, 2017.

A. I. Mourikis and S. I. Roumeliotis, “A multi-state constraint kalmanfilter for vision-aided inertial navigation,” Proceedings 2007 IEEEInternational Conference on Robotics and Automation, pp. 3565–3572,2007.

D. Zou, Y. Wu, L. Pei, H. Ling, and W. Yu, “Structvio: visual-inertialodometry with structural regularity of man-made environments,” IEEETransactions on Robotics, vol. 35, no. 4, pp. 999–1013, 2019.

V. Usenko, N. Demmel, D. Schubert, J. Stückler, and D. Cre-mers, “Visual-inertial mapping with non-linear factor recovery,” IEEERobotics and Automation Letters, vol. 5, no. 2, pp. 422–429, 2019.

C. Forster, L. Carlone, F. Dellaert, and D. Scaramuzza, “On-manifoldpreintegration for real-time visual–inertial odometry,” IEEE Transac-tions on Robotics, vol. 33, no. 1, pp. 1–21, 2016.

A. Rosinol, M. Abate, Y. Chang, and L. Carlone, “Kimera: an open-source library for real-time metric-semantic localization and mapping,”2020 IEEE International Conference on Robotics and Automation(ICRA), pp. 1689–1696, 2020.

M. Bloesch, S. Omari, M. Hutter, and R. Siegwart, “Robust visualinertial odometry using a direct ekf-based approach,” 2015 IEEE/RSJinternational conference on intelligent robots and systems (IROS),pp. 298–304, 2015.

L. Von Stumberg, V. Usenko, and D. Cremers, “Direct sparse visual-inertial odometry using dynamic marginalization,” 2018 IEEE Interna-tional Conference on Robotics and Automation (ICRA), pp. 2510–2517,2018.

V. Usenko, J. Engel, J. Stückler, and D. Cremers, “Direct visual-inertialodometry with stereo cameras,” 2016 IEEE International Conferenceon Robotics and Automation (ICRA), pp. 1885–1892, 2016.

T. Qin, P. Li, and S. Shen, “Vins-mono: A robust and versatile monoc-ular visual-inertial state estimator,” IEEE Transactions on Robotics,vol. 34, no. 4, pp. 1004–1020, 2018.

T. Qin, J. Pan, S. Cao, and S. Shen, “A general optimization-basedframework for local odometry estimation with multiple sensors,” arXivpreprint arXiv:1901.03638, 2019.

T. Schneider, M. Dymczyk, M. Fehr, K. Egger, S. Lynen, I. Gilitschen-ski, and R. Siegwart, “maplab: An open framework for research invisual-inertial mapping and localization,” IEEE Robotics and Automa-tion Letters, vol. 3, no. 3, pp. 1418–1425, 2018.

C. Campos, J. M. Montiel, and J. D. Tardós, “Inertial-only optimizationfor visual-inertial initialization,” 2020 IEEE International Conferenceon Robotics and Automation (ICRA), pp. 51–57, 2020.

T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti, and D. Rus,“Lio-sam: Tightly-coupled lidar inertial odometry via smoothing andmapping,” 2020 IEEE/RSJ International Conference on IntelligentRobots and Systems (IROS), pp. 5135–5142, 2020.

P. Geneva, K. Eckenhoff, Y. Yang, and G. Huang, “Lips: Lidar-inertial3d plane slam,” 2018 IEEE/RSJ International Conference on IntelligentRobots and Systems (IROS), pp. 123–130, 2018.

F. Neuhaus, T. Koß, R. Kohnen, and D. Paulus, “Mc2slam: Real-timeinertial lidar odometry using two-scan motion compensation,” GermanConference on Pattern Recognition, pp. 60–72, 2018.

R. Opromolla, G. Fasano, G. Rufino, M. Grassi, and A. Savvaris,“Lidar-inertial integration for uav localization and mapping in complexenvironments,” 2016 International Conference on Unmanned AircraftSystems (ICUAS), pp. 649–656, 2016.

B. Bescos, J. Neira, R. Siegwart, and C. Cadena, “Empty cities: Imageinpainting for a dynamic-object-invariant space,” 2019 InternationalConference on Robotics and Automation (ICRA), pp. 5460–5466, 2019.

B. Bescos, C. Cadena, and J. Neira, “Empty cities: A dynamic-object-invariant space for visual slam,” IEEE Transactions on Robotics, 2020.

K. He, G. Gkioxari, P. Dollár, and R. Girshick, “Mask r-cnn,” Pro-ceedings of the IEEE international conference on computer vision,pp. 2961–2969, 2017.

J. Cheng, Z. Wang, H. Zhou, L. Li, and J. Yao, “Dm-slam: A feature-based slam system for rigid dynamic scenes,” ISPRS InternationalJournal of Geo-Information, vol. 9, no. 4, p. 202, 2020.

C. Yu, Z. Liu, X.-J. Liu, F. Xie, Y. Yang, Q. Wei, and Q. Fei, “Ds-slam: A semantic visual slam towards dynamic environments,” 2018IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS), pp. 1168–1174, 2018.

G. Liu, W. Zeng, B. Feng, and F. Xu, “Dms-slam: A general visual slamsystem for dynamic scenes with multiple sensors,” Sensors, vol. 19,no. 17, p. 3714, 2019.

D. Esparza and G. Flores, “The stdyn-slam: a stereo vision andsemantic segmentation approach for vslam in dynamic outdoor envi-ronments,” IEEE Access, vol. 10, pp. 18201–18209, 2022.

C. Zhang, T. Huang, R. Zhang, and X. Yi, “Pld-slam: A new rgb-dslam method with point and line features for indoor dynamic scene,”ISPRS International Journal of Geo-Information, vol. 10, no. 3, p. 163,2021.

J. Cheng, Y. Sun, and M. Q.-H. Meng, “Improving monocular visualslam in dynamic environments: an optical-flow-based approach,” Ad-vanced Robotics, vol. 33, no. 12, pp. 576–589, 2019.

N. Yu, M. Gan, H. Yu, and K. Yang, “Drso-slam: A dynamic rgb-d slamalgorithm for indoor dynamic scenes,” in 2021 33rd Chinese Controland Decision Conference (CCDC), pp. 1052–1058, IEEE, 2021.

E. Mouragnon, M. Lhuillier, M. Dhome, F. Dekeyser, and P. Sayd,“Monocular vision based slam for mobile robots,” 18th InternationalConference on Pattern Recognition (ICPR’06), vol. 3, pp. 1027–1031,2006.

J. Delmerico and D. Scaramuzza, “A benchmark comparison ofmonocular visual-inertial odometry algorithms for flying robots,” 2018IEEE International Conference on Robotics and Automation (ICRA),pp. 2502–2509, 2018.

N. Yang, R. Wang, J. Stuckler, and D. Cremers, “Deep virtual stereoodometry: Leveraging deep depth prediction for monocular directsparse odometry,” Proceedings of the European Conference on Com-puter Vision (ECCV), pp. 817–833, 2018.

P. Schmuck and M. Chli, “Ccm-slam: Robust and efficient centralizedcollaborative monocular simultaneous localization and mapping forrobotic teams,” Journal of Field Robotics, vol. 36, no. 4, pp. 763–781,2019.

C. Mei, G. Sibley, M. Cummins, P. Newman, and I. Reid, “A constant-time efficient stereo slam system,” Proceedings of the British machinevision conference, vol. 1, no. 2009, 2009.

J. Engel, J. Stückler, and D. Cremers, “Large-scale direct slam withstereo cameras,” 2015 IEEE/RSJ International Conference on Intelli-gent Robots and Systems (IROS), pp. 1935–1942, 2015.

G.-x. Xin, X.-t. Zhang, X. Wang, and J. Song, “A rgbd slam algorithmcombining orb with prosac for indoor mobile robot,” in 2015 4th In-ternational Conference on Computer Science and Network Technology(ICCSNT), vol. 1, pp. 71–74, IEEE, 2015.

Q. Li, X. Wang, T. Wu, and H. Yang, “Point-line feature fusion basedfield real-time rgb-d slam,” Computers & Graphics, vol. 107, pp. 10–19, 2022.

Y. Wang, K. Xu, Y. Tian, and X. Ding, “Drg-slam: A semantic rgb-d slam using geometric features for indoor dynamic scene,” in 2022IEEE/RSJ International Conference on Intelligent Robots and Systems(IROS), pp. 1352–1359, IEEE, 2022.

Y. Liu, M. Xu, G. Jiang, X. Tong, J. Yun, Y. Liu, B. Chen, Y. Cao,N. Sun, and Z. Li, “Target localization in local dense mappingusing rgbd slam and object detection,” Concurrency and Computation:Practice and Experience, vol. 34, no. 4, p. e6655, 2022.

G. Gallego, T. Delbrück, G. Orchard, C. Bartolozzi, B. Taba, A. Censi,S. Leutenegger, A. J. Davison, J. Conradt, K. Daniilidis, et al., “Event-based vision: A survey,” IEEE transactions on pattern analysis andmachine intelligence, vol. 44, no. 1, pp. 154–180, 2020.

W. Chamorro, J. Solà, and J. Andrade-Cetto, “Event-based line slamin real-time,” IEEE Robotics and Automation Letters, vol. 7, no. 3,pp. 8146–8153, 2022.

A. G. Gelen and A. Atasoy, “An artificial neural slam framework forevent-based vision,” IEEE Access, 2023.

J. Engel, T. Schöps, and D. Cremers, “Lsd-slam: Large-scale directmonocular slam,” European conference on computer vision, pp. 834–849, 2014.

R. Wang, M. Schworer, and D. Cremers, “Stereo dso: Large-scale directsparse visual odometry with stereo cameras,” Proceedings of the IEEEInternational Conference on Computer Vision, pp. 3903–3911, 2017.

X. Gao, R. Wang, N. Demmel, and D. Cremers, “Ldso: Direct sparseodometry with loop closure,” 2018 IEEE/RSJ International Conferenceon Intelligent Robots and Systems (IROS), pp. 2198–2204, 2018.

N. Yang, L. v. Stumberg, R. Wang, and D. Cremers, “D3vo: Deepdepth, deep pose and deep uncertainty for monocular visual odometry,”Proceedings of the IEEE/CVF Conference on Computer Vision andPattern Recognition, pp. 1281–1292, 2020.

D. G. Lowe, “Distinctive image features from scale-invariant key-points,” International journal of computer vision, vol. 60, no. 2, pp. 91–110, 2004.

H. Bay, T. Tuytelaars, and L. Van Gool, “Surf: Speeded up robustfeatures,” European conference on computer vision, pp. 404–417, 2006.

E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “Orb: An efficientalternative to sift or surf,” 2011 International conference on computervision, pp. 2564–2571, 2011.

M. Calonder, V. Lepetit, C. Strecha, and P. Fua, “Brief: Binary robustindependent elementary features,” European conference on computervision, pp. 778–792, 2010.

E. Rosten and T. Drummond, “Machine learning for high-speed cornerdetection,” European conference on computer vision, pp. 430–443,2006.

S. Leutenegger, M. Chli, and R. Y. Siegwart, “Brisk: Binary robust in-variant scalable keypoints,” 2011 International conference on computervision, pp. 2548–2555, 2011.

D. DeTone, T. Malisiewicz, and A. Rabinovich, “Superpoint: Self-supervised interest point detection and description,” Proceedings ofthe IEEE conference on computer vision and pattern recognitionworkshops, pp. 224–236, 2018.

J. Tang, J. Folkesson, and P. Jensfelt, “Geometric correspondencenetwork for camera motion estimation,” IEEE Robotics and AutomationLetters, vol. 3, no. 2, pp. 1010–1017, 2018.

J. Tang, L. Ericson, J. Folkesson, and P. Jensfelt, “Gcnv2: Efficientcorrespondence prediction for real-time slam,” IEEE Robotics andAutomation Letters, vol. 4, no. 4, pp. 3505–3512, 2019.

J. Li, X. Wang, and S. Li, “Spherical-model-based slam on full-viewimages for indoor environments,” Applied Sciences, vol. 8, no. 11,p. 2268, 2018.

T. Pire, T. Fischer, G. Castro, P. De Cristóforis, J. Civera, and J. J.Berlles, “S-ptam: Stereo parallel tracking and mapping,” Robotics andAutonomous Systems, vol. 93, pp. 27–42, 2017.

C. Forster, M. Pizzoli, and D. Scaramuzza, “Svo: Fast semi-directmonocular visual odometry,” 2014 IEEE international conference onrobotics and automation (ICRA), pp. 15–22, 2014.

C. Forster, Z. Zhang, M. Gassner, M. Werlberger, and D. Scaramuzza,“Svo: Semidirect visual odometry for monocular and multicamerasystems,” IEEE Transactions on Robotics, vol. 33, no. 2, pp. 249–265,2016.

S.-p. Li, T. Zhang, X. Gao, D. Wang, and Y. Xian, “Semi-directmonocular visual and visual-inertial slam with loop closure detection,”Robotics and Autonomous Systems, vol. 112, pp. 201–210, 2019.

S. H. Lee and J. Civera, “Loosely-coupled semi-direct monocularslam,” IEEE Robotics and Automation Letters, vol. 4, no. 2, pp. 399–406, 2018.

K. M. Yi, E. Trulls, V. Lepetit, and P. Fua, “Lift: Learned invariantfeature transform,” European conference on computer vision, pp. 467–483, 2016.

D. Li, X. Shi, Q. Long, S. Liu, W. Yang, F. Wang, Q. Wei, and F. Qiao,“Dxslam: A robust and efficient visual slam system with deep features,”in 2020 IEEE/RSJ International conference on intelligent robots andsystems (IROS), pp. 4958–4965, IEEE, 2020.

T. Weyand, I. Kostrikov, and J. Philbin, “Planet-photo geolocation withconvolutional neural networks,” European Conference on ComputerVision, pp. 37–55, 2016.

G. Zhang, X. Yan, Y. Xu, and Y. Ye, “Neural guided visual slam systemwith laplacian of gaussian operator,” IET Computer Vision, vol. 15,no. 3, pp. 181–196, 2021.

B. Dongdong, W. Chaoqun, B. Zhang, Y. Xiaodong, Y. Xuejun, et al.,“Cnn feature boosted seqslam for real-time loop closure detection,”Chinese Journal of Electronics, vol. 27, no. 3, pp. 488–499, 2018.

A. R. Memon, H. Wang, and A. Hussain, “Loop closure detectionusing supervised and unsupervised deep neural networks for monocularslam systems,” Robotics and Autonomous Systems, vol. 126, p. 103470,2020.

X. Gao and T. Zhang, “Unsupervised learning to detect loops usingdeep neural networks for visual slam system,” Autonomous robots,vol. 41, pp. 1–18, 2017.

A. Kendall, M. Grimes, and R. Cipolla, “Posenet: A convolutionalnetwork for real-time 6-dof camera relocalization,” Proceedings of theIEEE international conference on computer vision, pp. 2938–2946,2015.

F. Walch, C. Hazirbas, L. Leal-Taixe, T. Sattler, S. Hilsenbeck, andD. Cremers, “Image-based localization using lstms for structuredfeature correlation,” Proceedings of the IEEE International Conferenceon Computer Vision, pp. 627–637, 2017.

A. Kendall and R. Cipolla, “Geometric loss functions for camera poseregression with deep learning,” Proceedings of the IEEE conference oncomputer vision and pattern recognition, pp. 5974–5983, 2017.

Z. Lv, F. Dellaert, J. M. Rehg, and A. Geiger, “Taking a deeper look atthe inverse compositional algorithm,” in Proceedings of the IEEE/CVFConference on Computer Vision and Pattern Recognition, pp. 4581–4590, 2019.

Y. An, J. Shi, D. Gu, and Q. Liu, “Visual-lidar slam based on unsu-pervised multi-channel deep neural networks,” Cognitive Computation,vol. 14, no. 4, pp. 1496–1508, 2022.

M. F. Aslan, A. Durdu, A. Yusefi, and A. Yilmaz, “Hvionet: A deeplearning based hybrid visual–inertial odometry approach for unmannedaerial system position estimation,” Neural Networks, vol. 155, pp. 461–474, 2022.

W. Yuan, X. Gu, Z. Dai, S. Zhu, and P. Tan, “Neural window fully-connected crfs for monocular depth estimation,” in Proceedings of theIEEE/CVF Conference on Computer Vision and Pattern Recognition,pp. 3916–3925, 2022.

A. Agarwal and C. Arora, “Attention attention everywhere: Monoculardepth prediction with skip attention,” in Proceedings of the IEEE/CVFWinter Conference on Applications of Computer Vision, pp. 5861–5870,2023.

R. Mahjourian, M. Wicke, and A. Angelova, “Unsupervised learningof depth and ego-motion from monocular video using 3d geometricconstraints,” Proceedings of the IEEE Conference on Computer Visionand Pattern Recognition, pp. 5667–5675, 2018.

H. Zhan, R. Garg, C. S. Weerasekera, K. Li, H. Agarwal, and I. Reid,“Unsupervised learning of monocular depth estimation and visualodometry with deep feature reconstruction,” Proceedings of the IEEEConference on Computer Vision and Pattern Recognition, pp. 340–349,2018.29 IEEE LATIN AMERICA TRANSACTIONS , Vol. 7, No. 7, Oct 2020R. Li, S. Wang, Z. Long, and D. Gu, “Undeepvo: Monocular visualodometry through unsupervised deep learning,” 2018 IEEE interna-tional conference on robotics and automation (ICRA), pp. 7286–7291,2018.

T. Zhou, M. Brown, N. Snavely, and D. G. Lowe, “Unsupervisedlearning of depth and ego-motion from video,” Proceedings of the IEEEconference on computer vision and pattern recognition, pp. 1851–1858,2017.

K. Tateno, F. Tombari, I. Laina, and N. Navab, “Cnn-slam: Real-timedense monocular slam with learned depth prediction,” in Proceedingsof the IEEE Conference on Computer Vision and Pattern Recognition(CVPR), July 2017.

C. Tang and P. Tan, “Ba-net: Dense bundle adjustment network,” arXivpreprint arXiv:1806.04807, 2018.

K. Wang, K. Wang, and S. Shen, “Flownorm: A learning-basedmethod for increasing convergence range of direct alignment,” in 2020IEEE International Conference on Robotics and Automation (ICRA),pp. 2109–2115, IEEE, 2020.

S. Wen, T. Wang, and S. Tao, “Hybrid cnn-lstm architecture for lidarpoint clouds semantic segmentation,” IEEE Robotics and AutomationLetters, vol. 7, no. 3, pp. 5811–5818, 2022.

A. Diab, R. Kashef, and A. Shaker, “Deep learning for lidar point cloudclassification in remote sensing,” Sensors, vol. 22, no. 20, p. 7868,2022.

G. Spampinato, A. Bruna, I. Guarneri, and D. Giacalone, “Deep learn-ing localization with 2d range scanner,” 2021 7th International Confer-ence on Automation, Robotics and Applications (ICARA), pp. 206–210,2021.

X. Chen, T. Läbe, A. Milioto, T. Röhling, O. Vysotska, A. Haag,J. Behley, and C. Stachniss, “Overlapnet: Loop closing for lidar-basedslam,” arXiv preprint arXiv:2105.11344, 2021.

W. Lu, Y. Zhou, G. Wan, S. Hou, and S. Song, “L3-net: Towards learn-ing based lidar localization for autonomous driving,” Proceedings of theIEEE/CVF Conference on Computer Vision and Pattern Recognition,pp. 6389–6398, 2019.

Y. Cho, G. Kim, and A. Kim, “Deeplo: Geometry-aware deep lidarodometry,” arXiv preprint arXiv:1902.10562, 2019.

R. Buchanan, V. Agrawal, M. Camurri, F. Dellaert, and M. Fallon,“Deep imu bias inference for robust visual-inertial odometry with factorgraphs,” IEEE Robotics and Automation Letters, vol. 8, no. 1, pp. 41–48, 2022.

Z. Wang, Y. Zhu, K. Lu, D. Freer, H. Wu, and H. Chen, “Attentionguided unsupervised learning of monocular visual-inertial odometry,”in 2022 IEEE Intelligent Vehicles Symposium (IV), pp. 651–657, IEEE,2022.

R. Clark, S. Wang, H. Wen, A. Markham, and N. Trigoni, “Vinet:Visual-inertial odometry as a sequence-to-sequence learning problem,”Proceedings of the AAAI Conference on Artificial Intelligence, vol. 31,2017.

L. Han, Y. Lin, G. Du, and S. Lian, “Deepvio: Self-supervised deeplearning of monocular visual inertial odometry using 3d geometricconstraints,” 2019 IEEE/RSJ International Conference on IntelligentRobots and Systems (IROS), pp. 6906–6913, 2019.

E. J. Shamwell, K. Lindgren, S. Leung, and W. D. Nothwang, “Unsu-pervised deep visual-inertial odometry with online error correction forrgb-d imagery,” IEEE transactions on pattern analysis and machineintelligence, vol. 42, no. 10, pp. 2478–2493, 2019.

M. F. Aslan, A. Durdu, and K. Sabanci, “Visual-inertial image-odometry network (viionet): A gaussian process regression-based deeparchitecture proposal for uav pose estimation,” Measurement, vol. 194,p. 111030, 2022.

C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna, “Re-thinking the inception architecture for computer vision,” in Proceedingsof the IEEE conference on computer vision and pattern recognition,pp. 2818–2826, 2016.

Y. Almalioglu, M. Turan, M. R. U. Saputra, P. P. de Gusmão,A. Markham, and N. Trigoni, “Selfvio: Self-supervised deep monoc-ular visual–inertial odometry and depth estimation,” Neural Networks,vol. 150, pp. 119–136, 2022.

E. Romera, J. M. Alvarez, L. M. Bergasa, and R. Arroyo, “Erfnet:Efficient residual factorized convnet for real-time semantic segmenta-tion,” IEEE Transactions on Intelligent Transportation Systems, vol. 19,no. 1, pp. 263–272, 2017.

C. Hazirbas, L. Ma, C. Domokos, and D. Cremers, “Fusenet: In-corporating depth into semantic segmentation via fusion-based cnnarchitecture,” Asian conference on computer vision, pp. 213–228, 2016.

G. Marchesi, C. Eichhorn, D. A. Plecher, Y. Itoh, and G. Klinker,“Envslam: Combining slam systems and neural networks to improvethe environment fusion in ar applications,” ISPRS International Journalof Geo-Information, vol. 10, no. 11, p. 772, 2021.

A. G. Howard, M. Zhu, B. Chen, D. Kalenichenko, W. Wang,T. Weyand, M. Andreetto, and H. Adam, “Mobilenets: Efficient convo-lutional neural networks for mobile vision applications,” arXiv preprintarXiv:1704.04861, 2017.

A. Valada, J. Vertens, A. Dhall, and W. Burgard, “Adapnet: Adaptivesemantic segmentation in adverse environmental conditions,” 2017IEEE International Conference on Robotics and Automation (ICRA),pp. 4644–4651, 2017.

N. Radwan, A. Valada, and W. Burgard, “Vlocnet++: Deep multitasklearning for semantic visual localization and odometry,” IEEE Roboticsand Automation Letters, vol. 3, no. 4, pp. 4407–4414, 2018.

S. Jin, L. Chen, R. Sun, and S. McLoone, “A novel vslam frameworkwith unsupervised semantic segmentation based on adversarial transferlearning,” Applied Soft Computing, vol. 90, p. 106153, 2020.

Y. Zhao, Z. Xiong, S. Zhou, Z. Peng, P. Campoy, and L. Zhang,“Ksf-slam: a key segmentation frame based semantic slam in dynamicenvironments,” Journal of Intelligent & Robotic Systems, vol. 105,no. 1, p. 3, 2022.

A. Valada, N. Radwan, and W. Burgard, “Deep auxiliary learning forvisual localization and odometry,” 2018 IEEE international conferenceon robotics and automation (ICRA), pp. 6939–6946, 2018.

J. McCormac, R. Clark, M. Bloesch, A. Davison, and S. Leutenegger,“Fusion++: Volumetric object-level slam,” 2018 international confer-ence on 3D vision (3DV), pp. 32–41, 2018.

E. Sucar, S. Liu, J. Ortiz, and A. J. Davison, “imap: Implicit map-ping and positioning in real-time,” in Proceedings of the IEEE/CVFInternational Conference on Computer Vision, pp. 6229–6238, 2021.

A. Geiger, P. Lenz, C. Stiller, and R. Urtasun, “Vision meets robotics:The kitti dataset,” The International Journal of Robotics Research,vol. 32, no. 11, pp. 1231–1237, 2013.

M. Burri, J. Nikolic, P. Gohl, T. Schneider, J. Rehder, S. Omari,M. W. Achtelik, and R. Siegwart, “The euroc micro aerial vehicledatasets,” The International Journal of Robotics Research, vol. 35,no. 10, pp. 1157–1163, 2016.

W. Maddern, G. Pascoe, C. Linegar, and P. Newman, “1 year, 1000 km:The oxford robotcar dataset,” The International Journal of RoboticsResearch, vol. 36, no. 1, pp. 3–15, 2017.

J. Sturm, N. Engelhard, F. Endres, W. Burgard, and D. Cremers, “Abenchmark for the evaluation of rgb-d slam systems,” 2012 IEEE/RSJinternational conference on intelligent robots and systems, pp. 573–580, 2012.

J. Engel, V. Usenko, and D. Cremers, “A photometrically cali-brated benchmark for monocular visual odometry,” arXiv preprintarXiv:1607.02555, 2016.

D. Schubert, T. Goll, N. Demmel, V. Usenko, J. Stückler, and D. Cre-mers, “The tum vi benchmark for evaluating visual-inertial odometry,”2018 IEEE/RSJ International Conference on Intelligent Robots andSystems (IROS), pp. 1680–1687, 2018.

J.-L. Blanco, F.-A. Moreno, and J. Gonzalez, “A collection of outdoorrobotic datasets with centimeter-accuracy ground truth,” AutonomousRobots, vol. 27, no. 4, pp. 327–351, 2009.

G. Pandey, J. R. McBride, and R. M. Eustice, “Ford campus visionand lidar data set,” The International Journal of Robotics Research,vol. 30, no. 13, pp. 1543–1552, 2011.

A. Handa, T. Whelan, J. McDonald, and A. J. Davison, “A bench-mark for rgb-d visual odometry, 3d reconstruction and slam,” 2014IEEE international conference on Robotics and automation (ICRA),pp. 1524–1531, 2014.

N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “Universityof michigan north campus long-term vision and lidar dataset,” TheInternational Journal of Robotics Research, vol. 35, no. 9, pp. 1023–1035, 2016.

M. Fallon, H. Johannsson, M. Kaess, and J. J. Leonard, “The mitstata center dataset,” The International Journal of Robotics Research,vol. 32, no. 14, pp. 1695–1699, 2013.

B. Glocker, S. Izadi, J. Shotton, and A. Criminisi, “Real-time rgb-dcamera relocalization,” 2013 IEEE International Symposium on Mixedand Augmented Reality (ISMAR), pp. 173–179, 2013.

J.-L. Blanco-Claraco, F.-A. Moreno-Duenas, and J. González-Jiménez,“The málaga urban dataset: High-rate stereo and lidar in a realisticurban scenario,” The International Journal of Robotics Research,vol. 33, no. 2, pp. 207–214, 2014.

A. S. Huang, M. Antone, E. Olson, L. Fletcher, D. Moore, S. Teller, andJ. Leonard, “A high-rate, heterogeneous data set from the darpa urbanchallenge,” The International Journal of Robotics Research, vol. 29,no. 13, pp. 1595–1601, 2010.

S. Y. Loo, A. J. Amiri, S. Mashohor, S. H. Tang, and H. Zhang,“Cnn-svo: Improving the mapping in semi-direct visual odometryusing single-image depth prediction,” 2019 International Conferenceon Robotics and Automation (ICRA), pp. 5218–5223, 2019.

J. A. Placed and J. A. Castellanos, “A deep reinforcement learningapproach for active slam,” Applied Sciences, vol. 10, no. 23, p. 8386,2020.

B. K. Horn, “Closed-form solution of absolute orientation using unitquaternions,” Josa a, vol. 4, no. 4, pp. 629–642, 1987.

E. Brachmann, A. Krull, S. Nowozin, J. Shotton, F. Michel,S. Gumhold, and C. Rother, “Dsac-differentiable ransac for cameralocalization,” Proceedings of the IEEE Conference on Computer Visionand Pattern Recognition, pp. 6684–6692, 2017.

E. Brachmann and C. Rother, “Learning less is more-6d camera local-ization via 3d surface regression,” Proceedings of the IEEE Conferenceon Computer Vision and Pattern Recognition, pp. 4654–4662, 2018.

A. Geiger, P. Lenz, and R. Urtasun, “Are we ready for autonomousdriving? the kitti vision benchmark suite,” 2012 IEEE Conference onComputer Vision and Pattern Recognition, pp. 3354–3361, 2012.

M. Bujanca, X. Shi, M. Spear, P. Zhao, B. Lennox, and M. Luján, “Ro-bust slam systems: Are we there yet?,” 2021 IEEE/RSJ InternationalConference on Intelligent Robots and Systems (IROS), 2021.

M. Bujanca, P. Gafton, S. Saeedi, A. Nisbet, B. Bodin, M. F. O’Boyle,A. Davison, P. Kelly, G. Riley, B. Lennox, M. Luján, and S. Furber,“Slambench 3.0: Systematic automated reproducible evaluation ofslam systems for robot vision challenges and scene understanding,”2019 International Conference on Robotics and Automation (ICRA),pp. 6351–6358, 2019.

R. Duan, Y. Feng, and C.-Y. Wen, “Deep pose graph-matching-basedloop closure detection for semantic visual slam,” Sustainability, vol. 14,no. 19, p. 11864, 2022.

J. Wu, Q. Shi, Q. Lu, X. Liu, X. Zhu, and Z. Lin, “Learning invariantsemantic representation for long-term robust visual localization,” En-gineering Applications of Artificial Intelligence, vol. 111, p. 104793,2022.

S. Wen, Y. Zhao, X. Yuan, Z. Wang, D. Zhang, and L. Manfredi, “Pathplanning for active slam based on deep reinforcement learning underunknown environments,” Intelligent Service Robotics, vol. 13, pp. 263–272, 2020.

K. Naveed, M. L. Anjum, W. Hussain, and D. Lee, “Deep introspectiveslam: Deep reinforcement learning based approach to avoid trackingfailure in visual slam,” Autonomous Robots, vol. 46, no. 6, pp. 705–724,2022.

A. Safa, T. Verbelen, I. Ocket, A. Bourdoux, H. Sahli, F. Catthoor, andG. Gielen, “Fusing event-based camera and radar for slam using spikingneural networks with continual stdp learning,” in 2023 IEEE Interna-tional Conference on Robotics and Automation (ICRA), pp. 2782–2788,IEEE, 2023