An improved Soft Actor-Critic strategy for optimal energy management

Keywords:

Energy management, Distributed resources, Demand response, Deep Reinforcement LearningAbstract

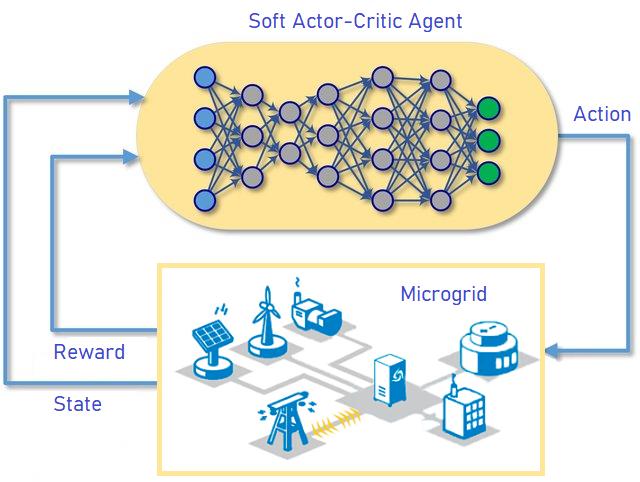

The transition from the current electrical grid to a smart, sustainable, efficient, and flexible electrical grid requires detecting future capabilities in order to have a system that can monitor, predict, learn, and make decisions on local energy consumption and production in real-time. A microgrid with these characteristics will allow the integration of distributed renewable energy systems efficiently, reducing the demand on power plants. The use of reinforcement learning can help find creative ways to keep the grid balanced; reschedule energy consumption through incentives; make predictions of demand and available energy at the grid scale, and assess the complexity of making these decisions. This work proposes using the novel Soft Actor-Critic (SAC) Deep Reinforcement Learning technique to manage electrical microgrids efficiently. SAC uses an entropybased objective function that allows it to overcome the problem of convergence brittleness by encouraging exploration without assigning a high probability of occurrence to any part of the range of actions. Results show the benefits of the proposed technique for the coordinated energy management of the microgrid.

Downloads

References

H. Minor-Popocatl, O. Aguilar-Mejía, F. D. Santillán-Lemus, A. Valderrabano-Gonzalez, and R.-I. Samper-Torres, “Economic dispatch in micro-grids with alternative energy sources and batteries,” IEEE Latin America Transactions, vol. 100, no. XXX, 2022.

O. A. Omitaomu and H. Niu, “Artificial intelligence techniques in smart grid: A survey,” Smart Cities, vol. 4, no. 2, pp. 548–568, 2021.

R. S. Sutton, A. G. Barto, et al., “Introduction to reinforcement learning,” 1998.

Y. LeCun, Y. Bengio, and G. Hinton, “Deep learning,” nature, vol. 521, no. 7553, pp. 436–444, 2015.

J. R. Vázquez-Canteli and Z. Nagy, “Reinforcement learning for demand response: A review of algorithms and modeling techniques,” Applied energy, vol. 235, pp. 1072–1089, 2019.

O. De Somer, A. Soares, K. Vanthournout, F. Spiessens, T. Kuijpers, and K. Vossen, “Using reinforcement learning for demand response of domestic hot water buffers: A real-life demonstration,” in 2017 IEEE PES Innovative Smart Grid Technologies Conference Europe (ISGTEurope), pp. 1–7, IEEE, 2017.

M. Trimboli, L. Avila, and M. Rahmani-Andebili, “Reinforcement learning techniques for mppt control of pv system under climatic changes,” in Applications of Artificial Intelligence in Planning and Operation of Smart Grids, pp. 31–73, Springer, 2022.

J. R. Vazquez-Canteli, G. Henze, and Z. Nagy, “Marlisa: Multi-agent reinforcement learning with iterative sequential action selection for load shaping of grid-interactive connected buildings,” in Proceedings of the 7th ACM international conference on systems for energy-efficient buildings, cities, and transportation, pp. 170–179, 2020.

T. Haarnoja, A. Zhou, P. Abbeel, and S. Levine, “Soft actor-critic: Offpolicy maximum entropy deep reinforcement learning with a stochastic actor,” in International conference on machine learning, pp. 1861–1870, PMLR, 2018.

T. A. Nakabi and P. Toivanen, “Deep reinforcement learning for energy management in a microgrid with flexible demand,” Sustainable Energy, Grids and Networks, vol. 25, p. 100413, 2021.

“Wind farm data,” Fortum Oy, Finland, 2018.

“Fingrid open datasets,” 2018.

T. A. Nakabi and P. Toivanen, “An ann-based model for learning individual customer behavior in response to electricity prices,” Sustainable Energy, Grids and Networks, vol. 18, p. 100212, 2019.

R. S. Sutton and A. G. Barto, Reinforcement learning: An introduction. MIT press, 2018.

G. Brockman, V. Cheung, L. Pettersson, J. Schneider, J. Schulman, J. Tang, and W. Zaremba, “Openai gym,” arXiv preprint arXiv:1606.01540, 2016.

L. Yu, S. Qin, M. Zhang, C. Shen, T. Jiang, and X. Guan, “A review of deep reinforcement learning for smart building energy management,” IEEE Internet of Things Journal, vol. 8, no. 15, pp. 12046–12063, 2021.

D. Zhao, H. Wang, K. Shao, and Y. Zhu, “Deep reinforcement learning with experience replay based on sarsa,” in 2016 IEEE symposium series on computational intelligence (SSCI), pp. 1–6, IEEE, 2016.