A Data-Centric Approach for Portuguese Speech Recognition: Language Model And Its Implications

Keywords:

automatic speech recognition, language model, brazilian portuguese, wav2vec2, KenLMAbstract

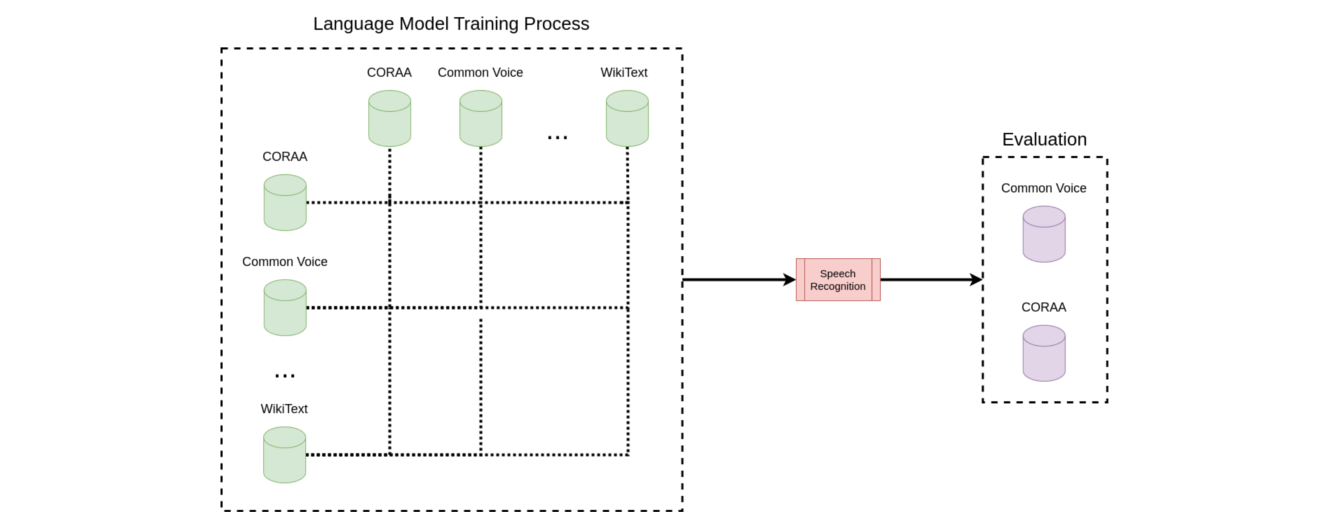

Recent advances in Automatic Speech Recognition have made it possible to achieve a quality never seen before in the literature, both for languages with abundant data, such as English, which has a large number of studies and for the Portuguese language, which has a more limited amount of resources and studies. The most recent advances address speech recognition problems with Transformers based models, which have the capability to perform the speech recognition task directly from the raw signal, without the need for manual feature extraction. Some studies have already shown that it is possible to further improve the quality of the transcription of these models using language models within the decoding stage, however, the real impact of such language models is still not clear, especially for the Brazilian Portuguese scenario. Also, it is known that the quality of the data used for training the models is of paramount importance, however, there are few works in the literature addressing this issue. This work explores the impact of language models applied to Portuguese speech recognition both in terms of data quality and computational performance, with a data-centric approach. We propose an approach to measure similarity between datasets and, thus, assist in decision-making during training. The approach indicates paths for the advancement of the state-of-the-art aiming at Portuguese speech recognition, showing that it is possible to reduce the size of the language model by 80% and still achieve error rates around 7.17% for the Common Voice dataset. The source code is available at https://github.com/joaoalvarenga/language-model-evaluation.

Downloads

References

A. Gulati, J. Qin, C.-C. Chiu, N. Parmar, Y. Zhang, J. Yu, W. Han,

S. Wang, Z. Zhang, Y. Wu et al., “Conformer: Convolution-augmented

transformer for speech recognition,” arXiv preprint arXiv:2005.08100,

A. Baevski, Y. Zhou, A. Mohamed, and M. Auli, “wav2vec 2.0: A frame-

work for self-supervised learning of speech representations,” Advances

in Neural Information Processing Systems, vol. 33, pp. 12 449–12 460,

V. Panayotov, G. Chen, D. Povey, and S. Khudanpur, “Librispeech:

An asr corpus based on public domain audio books,” in 2015 IEEE

International Conference on Acoustics, Speech and Signal Processing

(ICASSP), 2015, pp. 5206–5210.

A. Graves, S. Fernández, F. Gomez, and J. Schmidhuber, “Connectionist

temporal classification: Labelling unsegmented sequence data with

recurrent neural networks,” in Proceedings of the 23rd International

Conference on Machine Learning, ser. ICML ’06. New York, NY,

USA: Association for Computing Machinery, 2006, p. 369–376.

[Online]. Available: https://doi.org/10.1145/1143844.1143891

A. Graves and N. Jaitly, “Towards end-to-end speech recognition with

recurrent neural networks,” in Proceedings of the 31st International

Conference on Machine Learning, ser. Proceedings of Machine

Learning Research, E. P. Xing and T. Jebara, Eds., vol. 32, no. 2.

Bejing, China: PMLR, 22–24 Jun 2014, pp. 1764–1772. [Online].

Available: http://proceedings.mlr.press/v32/graves14.html

D. Amodei, R. Anubhai, E. Battenberg, C. Case, J. Casper, B. Catanzaro,

J. Chen, M. Chrzanowski, A. Coates, G. Diamos, E. Elsen, J. H. Engel,

L. Fan, C. Fougner, T. Han, A. Y. Hannun, B. Jun, P. LeGresley, L. Lin,

S. Narang, A. Y. Ng, S. Ozair, R. Prenger, J. Raiman, S. Satheesh,

D. Seetapun, S. Sengupta, Y. Wang, Z. Wang, C. Wang, B. Xiao,

D. Yogatama, J. Zhan, and Z. Zhu, “Deep speech 2: End-to-end speech

recognition in english and mandarin,” CoRR, vol. abs/1512.02595,

[Online]. Available: http://arxiv.org/abs/1512.02595

W. Chan, N. Jaitly, Q. Le, and O. Vinyals, “Listen, attend and spell: A

neural network for large vocabulary conversational speech recognition,”

in 2016 IEEE International Conference on Acoustics, Speech and Signal

Processing (ICASSP), 2016, pp. 4960–4964.

I. Macedo Quintanilha, S. Netto, and L. Biscainho, “Towards an end-to-

end speech recognizer for portuguese using deep neural networks,” 09

Y. He, T. N. Sainath, R. Prabhavalkar, I. McGraw, R. Alvarez,

D. Zhao, D. Rybach, A. Kannan, Y. Wu, R. Pang, Q. Liang, D. Bhatia,

Y. Shangguan, B. Li, G. Pundak, K. C. Sim, T. Bagby, S. Chang,

K. Rao, and A. Gruenstein, “Streaming end-to-end speech recognition

for mobile devices,” CoRR, vol. abs/1811.06621, 2018. [Online].

Available: http://arxiv.org/abs/1811.06621

X. Yang, J. Li, and X. Zhou, “A novel pyramidal-fsmn architecture with

lattice-free MMI for speech recognition,” CoRR, vol. abs/1810.11352,

[Online]. Available: http://arxiv.org/abs/1810.11352

C. Batista, A. L. Dias, and N. Sampaio Neto, “Baseline Acoustic

Models for Brazilian Portuguese Using Kaldi Tools,” in Proc.

IberSPEECH 2018, 2018, pp. 77–81. [Online]. Available: http:

//dx.doi.org/10.21437/IberSPEECH.2018-17

D. S. Park, W. Chan, Y. Zhang, C.-C. Chiu, B. Zoph, E. D. Cubuk,

and Q. V. Le, “Specaugment: A simple data augmentation method for

automatic speech recognition,” Interspeech 2019, Sep 2019. [Online].

Available: http://dx.doi.org/10.21437/Interspeech.2019-2680

J. Li, V. Lavrukhin, B. Ginsburg, R. Leary, O. Kuchaiev, J. Cohen,

H. Nguyen, and R. Gadde, “Jasper: An end-to-end convolutional neural

acoustic model,” 09 2019, pp. 71–75.

I. Macedo Quintanilha, S. Lima Netto, and L. Pereira Biscainho, “An

open-source end-to-end asr system for brazilian portuguese using dnns

built from newly assembled corpora,” Journal of Communication and

Information Systems, vol. 35, no. 1, pp. 230–242, Sep. 2020. [Online].

Available: https://jcis.sbrt.org.br/jcis/article/view/721

W. Han, Z. Zhang, Y. Zhang, J. Yu, C.-C. Chiu, J. Qin, A. Gulati,

R. Pang, and Y. Wu, “Contextnet: Improving convolutional neural

networks for automatic speech recognition with global context,” 2020.

[Online]. Available: https://arxiv.org/abs/2005.03191

A. Baevski, H. Zhou, A. Mohamed, and M. Auli, “wav2vec 2.0: A

framework for self-supervised learning of speech representations,” 2020.

D. S. Park, Y. Zhang, Y. Jia, W. Han, C.-C. Chiu, B. Li, Y. Wu,

and Q. V. Le, “Improved noisy student training for automatic

speech recognition,” Interspeech 2020, Oct 2020. [Online]. Available:

http://dx.doi.org/10.21437/Interspeech.2020-1470

Q. Xu, A. Baevski, T. Likhomanenko, P. Tomasello, A. Conneau,

R. Collobert, G. Synnaeve, and M. Auli, “Self-training and pre-training

are complementary for speech recognition,” CoRR, vol. abs/2010.11430,

[Online]. Available: https://arxiv.org/abs/2010.11430

A. Conneau, A. Baevski, R. Collobert, A. Mohamed, and

M. Auli, “Unsupervised cross-lingual representation learning for

speech recognition,” CoRR, vol. abs/2006.13979, 2020. [Online].

Available: https://arxiv.org/abs/2006.13979

H. Bourlard and N. Morgan, Connectionist Speech Recognition: A

Hybrid Approach, 01 1994.

O. Abdel-Hamid, A.-r. Mohamed, H. Jiang, and G. Penn, “Applying

convolutional neural networks concepts to hybrid nn-hmm model for

speech recognition,” in 2012 IEEE International Conference on Acous-

tics, Speech and Signal Processing (ICASSP), 2012, pp. 4277–4280.

G. Hinton, L. Deng, D. Yu, G. E. Dahl, A.-r. Mohamed, N. Jaitly,

A. Senior, V. Vanhoucke, P. Nguyen, T. N. Sainath, and B. Kingsbury,

“Deep neural networks for acoustic modeling in speech recognition:

The shared views of four research groups,” IEEE Signal Processing

Magazine, vol. 29, no. 6, pp. 82–97, 2012.

B. T. Lowerre, “The harpy speech recognition system,” Ph.D. disserta-

tion, Carnegie-Mellon University, 1976.

L. R. S. Gris, E. Casanova, F. S. de Oliveira, A. da Silva Soares,

and A. C. Júnior, “Brazilian portuguese speech recognition using

wav2vec 2.0,” CoRR, vol. abs/2107.11414, 2021. [Online]. Available:

https://arxiv.org/abs/2107.11414

A. C. Júnior, E. Casanova, A. da Silva Soares, F. S. de Oliveira,

L. Oliveira, R. C. F. Junior, D. P. P. da Silva, F. G. Fayet, B. B.

Carlotto, L. R. S. Gris, and S. M. Aluísio, “CORAA: a large corpus

of spontaneous and prepared speech manually validated for speech

recognition in brazilian portuguese,” CoRR, vol. abs/2110.15731, 2021.

[Online]. Available: https://arxiv.org/abs/2110.15731

T. Ganchev, N. Fakotakis, and G. Kokkinakis, “Comparative evaluation

of various mfcc implementations on the speaker verification task,” in in

Proc. of the SPECOM-2005, 2005, pp. 191–194.

T. Lima and M. Da Costa-Abreu, “A survey on automatic speech recog-

nition systems for portuguese language and its variations,” Computer

Speech & Language, vol. 62, p. 101055, 12 2019.

M. Schramm, L. Freitas, A. Zanuz, and D. Barone, “Cslu: Spoltech

brazilian portuguese version 1.0 ldc2006s16,” 2006.

R. Oliveira, P. Batista, N. Neto, and A. Klautau, “Baseline acoustic mod-

els for brazilian portuguese using cmu sphinx tools,” in Computational

Processing of the Portuguese Language, H. Caseli, A. Villavicencio,

A. Teixeira, and F. Perdigão, Eds. Berlin, Heidelberg: Springer Berlin

Heidelberg, 2012, pp. 375–380.

T. Raso and H. Mello, “The c-oral-brasil i: Reference corpus for

informal spoken brazilian portuguese,” in Computational Processing of

the Portuguese Language, H. Caseli, A. Villavicencio, A. Teixeira, and

F. Perdigão, Eds. Berlin, Heidelberg: Springer Berlin Heidelberg, 2012,

pp. 362–367.

J. Gonzalez-Dominguez, I. Lopez-Moreno, P. J. Moreno, and

J. Gonzalez-Rodriguez, “Frame-by-frame language identification in

short utterances using deep neural networks,” Neural Networks, vol. 64,

pp. 49–58, 2015, special Issue on “Deep Learning of Representations”.

[Online]. Available: https://www.sciencedirect.com/science/article/pii/

S0893608014002019

N. Neto, C. Patrick, A. Klautau, and I. Trancoso, “Free tools and

resources for brazilian portuguese speech recognition,” Journal of the

Brazilian Computer Society, vol. 17, no. 1, pp. 53–68, 2011.

P. Legal, “Pcd legal: Acessível para todos,” 2018. [Online]. Available:

PUC-Rio, “Centro de estudos em telecomunicações (cetuc).” [Online].

Available: http://www.cetuc.puc-rio.br/

K. Heafield, “KenLM: Faster and smaller language model queries,”

in Proceedings of the Sixth Workshop on Statistical Machine

A. Conneau, A. Baevski, R. Collobert, A. Mohamed, and M. Auli, “Un-

supervised cross-lingual representation learning for speech recognition,”

Translation. Edinburgh, Scotland: Association for Computational

Linguistics, Jul. 2011, pp. 187–197. [Online]. Available: https:

//aclanthology.org/W11-2123

R. Ardila, M. Branson, K. Davis, M. Henretty, M. Kohler, J. Meyer,

R. Morais, L. Saunders, F. M. Tyers, and G. Weber, “Common voice:

A massively-multilingual speech corpus,” CoRR, vol. abs/1912.06670,

[Online]. Available: http://arxiv.org/abs/1912.06670

V. Pratap, Q. Xu, A. Sriram, G. Synnaeve, and R. Collobert, “Mls: A

large-scale multilingual dataset for speech research,” Interspeech 2020,

Oct 2020. [Online]. Available: http://dx.doi.org/10.21437/Interspeech.

-2826

Linguateca, “Cetenfolha.” [Online]. Available: https://www.linguateca.p

t/cetenfolha/

Y. Tian, L. Yu, X. Chen, and S. Ganguli, “Understanding self-supervised

learning with dual deep networks,” CoRR, vol. abs/2010.00578, 2020.

[Online]. Available: https://arxiv.org/abs/2010.00578

J. Devlin, M. Chang, K. Lee, and K. Toutanova, “BERT: pre-training

of deep bidirectional transformers for language understanding,” CoRR,

vol. abs/1810.04805, 2018. [Online]. Available: http://arxiv.org/abs/18

04805

V. I. Levenshtein, “Binary Codes Capable of Correcting Deletions,

Insertions and Reversals,” Soviet Physics Doklady, vol. 10, p. 707, Feb.

K. S. Jones, “A statistical interpretation of term specificity and its

application in retrieval,” Journal of Documentation, vol. 28, pp. 11–21,