Medical Report Generation through Radiology Images: An Overview.

Keywords:

Medical Report, Deep Learning, Medical ImagesAbstract

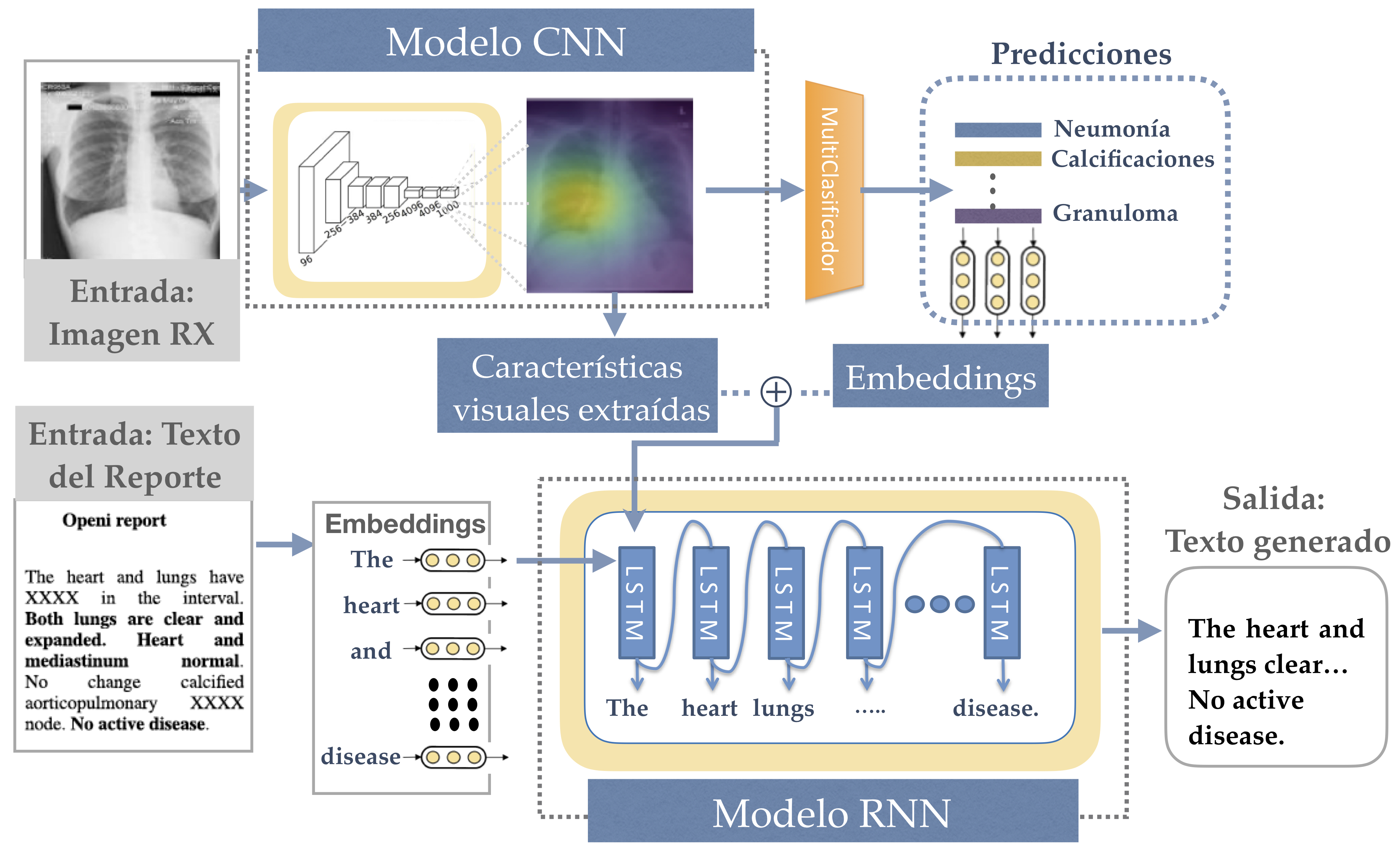

The interpretation of medical images is a fundamental process for the diagnosis and treatment of patients. This process contributes determining the causes of symptoms as well as monitoring the effects of any treatment. Although the generation of medical reports from images is a complex task, deep learning strategies have been integrated with models that allow this arduous task to be tackled, achieving promising results. This work aims to present a compilation of the most outstanding deep learning strategies focused on the automatic generation of medical radiology reports from X-Ray images. Papers based on DenseNet, ResNet and VGG architectures, in combination with Long Short-Term Memories (LSTMs) and attention models, are analyzed in terms of the pre-processing strategies, databases used, model adaptations, and metric results. All these important findings are summarized in this survey, highlighting those models that reported the highest performance.

Downloads

References

A. D. Orjuela-Cañon and O. Perdomo, “Clustering proposal support for the covid-19 making decision process in a data demanding scenario,” IEEE Latin America Transactions, vol. 19, p. 1041–1049, jun. 2021.

A. Radiology, “Acr recommendations for the use of chest radiography and computed tomography (ct) for suspected covid-19. infection,” ACR website, 2020.

S. Yang, J. Niu, J. Wu, Y. Wang, X. Liu, and Q. Li, “Automatic ultrasound image report generation with adaptive multimodal attention mechanism,” Neurocomputing, vol. 427, pp. 40–49, 2021.

C. I. Orozco, E. Xamena, C. A. Martínez, and D. A. Rodríguez, “Covid-xr: A web management platform for coronavirus detection on x-ray chest images,” IEEE Latin America Transactions, vol. 19, p. 1033–1040, jun. 2021.

C. I. Orozco, E. Xamena, C. A. Martínez, and D. A. Rodríguez, “Covidxr: A web management platform for coronavirus detection on x-ray chest images,” IEEE LAT AM T, vol. 19, p. 1033–1040, jun. 2021.

M. M. A. Monshi, J. Poon, and V. Chung, “Deep learning in generating radiology reports: A survey,” Artificial Intelligence in Medicine, vol. 106, p. 101878, 2020.

B. Jing, P. Xie, and E. Xing, “On the Automatic Generation of Medical Imaging Reports,” ACL 2018 - 56th Annual Meeting of the Association for Computational Linguistics, Proceedings of the Conference (Long Papers), vol. 1, pp. 2577–2586, 11 2017.

R. M. Thanki and A. Kothari, “Data Compression and Its Application in Medical Imaging,” in Hybrid and Advanced Compression Techniques for Medical Images, pp. 1–15, Springer International Publishing, 2019.

X. Liu, Y. Zhou, and Z. Wang, “Recognition and extraction of named entities in online medical diagnosis data based on a deep neural network,” Journal of Visual Communication and Image Representation, vol. 60, pp. 1–15, 4 2019.

M. I. Neuman, E. Y. Lee, S. Bixby, S. Diperna, J. Hellinger, R. Markowitz, S. Servaes, M. C. Monuteaux, and S. S. Shah, “Variability in the interpretation of chest radiographs for the diagnosis of pneumonia in children,” Journal of Hospital Medicine, vol. 7, pp. 294–298, 4 2012.

R. M. Hopstaken, T.Witbraad, J. M. van Engelshoven, and G. J. Dinant, “Inter-observer variation in the interpretation of chest radiographs for pneumonia in community-acquired lower respiratory tract infections,” Clinical Radiology, vol. 59, pp. 743–752, 8 2004.

G. Litjens, T. Kooi, B. E. Bejnordi, A. A. A. Setio, F. Ciompi, M. Ghafoorian, J. A. van der Laak, B. van Ginneken, and C. I. Sánchez, “A survey on deep learning in medical image analysis,” Medical Image Analysis, vol. 42, pp. 60–88, 12 2017.

I. Allaouzi, M. Ben Ahmed, B. Benamrou, and M. Ouardouz, “Automatic caption generation for medical images,” in Proceedings of the 3rd International Conference on Smart City Applications, SCA ’18, (New York, NY, USA), Association for Computing Machinery, 2018.

B. Pandey, D. Kumar Pandey, B. Pratap Mishra, and W. Rhmann, “A comprehensive survey of deep learning in the field of medical imaging and medical natural language processing: Challenges and research directions,” Journal of King Saud University - Computer and

Information Sciences, 2021.

H. C. Shin, K. Roberts, L. Lu, D. Demner-Fushman, J. Yao, and R. M. Summers, “Learning to Read Chest X-Rays: Recurrent Neural Cascade Model for Automated Image Annotation,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 2016-December, pp. 2497–2506, IEEE Computer Society, 12 2016.

S. A. Hasan, Y. Ling, J. Liu, R. Sreenivasan, S. Anand, T. R. Arora, V. Datla, K. Lee, A. Qadir, C. Swisher, and O. Farri, “Attentionbased medical caption generation with image modality classification and clinical concept mapping,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11018 LNCS, pp. 224–230, Springer Verlag, 9 2018.

C. Y. Li, X. Liang, Z. Hu, and E. P. Xing, “Hybrid Retrieval-Generation Reinforced Agent for Medical Image Report Generation,” Advances in Neural Information Processing Systems, vol. 2018-December, pp. 1530–1540, 5 2018.

X. Wang, Y. Peng, L. Lu, Z. Lu, and R. M. Summers, “TieNet: Text- Image Embedding Network for Common Thorax Disease Classification and Reporting in Chest X-Rays,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 9049–9058, IEEE Computer Society, 12 2018.

Z. Zhang, Y. Xie, F. Xing, M. McGough, and L. Yang, “MDNet: A Semantically and Visually Interpretable Medical Image Diagnosis Network,” Proceedings - 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, vol. 2017-January, pp. 3549– 3557, 7 2017.

X. Huang, F. Yan, W. Xu, and M. Li, “Multi-Attention and Incorporating Background Information Model for Chest X-Ray Image Report Generation,” IEEE Access, vol. 7, pp. 154808–154817, 2019.

B. Jing, Z. Wang, and E. Xing, “Show, describe and conclude: On exploiting the structure information of chest x-ray reports,” Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 2577–2586, 2019.

K. Xu, J. L. Ba, R. Kiros, K. Cho, A. Courville, R. Salakhutdinov, R. S. Zemel, and Y. Bengio, “Show, attend and tell: Neural image caption generation with visual attention,” in 32nd International Conference on Machine Learning, ICML 2015, vol. 3, pp. 2048–2057, International

Machine Learning Society (IMLS), 2 2015.

P. Harzig, Y.-Y. Chen, F. Chen, and R. Lienhart, “Addressing data bias problems for chest x-ray image report generation,” arXiv, 2019.

H. C. Shin, L. Lu, L. Kim, A. Seff, J. Yao, and R. M. Summers, “Interleaved text/image Deep Mining on a large-scale radiology database,” in Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, vol. 07-12-June-2015, pp. 1090–1099, IEEE Computer Society, 10 2015.

W. Xiaosong, L. Le, S. Hoo-chang, K. Lauren, N. Isabella, Y. Jianhua, and S. Ronald, “Unsupervised category discovery via looped deep pseudo-task optimization using a large scale radiology image database,” arXiv, 2016.

J. Rubin, D. Sanghavi, C. Zhao, K. Lee, A. Qadir, and M. Xu-Wilson, “Large Scale Automated Reading of Frontal and Lateral Chest X-Rays

using Dual Convolutional Neural Networks,” arXiv, 4 2018.

Y. Dong, Y. Pan, J. Zhang, and W. Xu, “Learning to Read Chest X-Ray Images from 16000+ Examples Using CNN,” in Proceedings- 2017 IEEE 2nd International Conference on Connected Health: Applications, Systems and Engineering Technologies, CHASE 2017, pp. 51–57, Institute of Electrical and Electronics Engineers Inc., 8 2017.

A. Gasimova, “Automated enriched medical concept generation for chest x-ray images,” Lecture Notes in Computer Science, p. 83–92,

G. Liu, T.-M. H. Hsu, M. McDermott, W. Boag, W.-H. Weng, P. Szolovits, and M. Ghassemi, “Clinically accurate chest x-ray report generation,” in Proceedings of the 4th Machine Learning for Healthcare Conference (F. Doshi-Velez, J. Fackler, K. Jung, D. Kale, R. Ranganath, B. Wallace, and J. Wiens, eds.), vol. 106 of Proceedings of Machine Learning Research, pp. 249–269, PMLR, 09–10 Aug 2019.

J. Tian, C. Zhong, Z. Shi, and F. Xu, “Towards automatic diagnosis from multi-modal medical data,” in Lecture Notes in Computer Science, vol. 11797 LNCS, pp. 67–74, Springer, 2019.

Y. Xue and X. Huang, “Improved disease classification in chest xrays with transferred features from report generation,” in Information Processing in Medical Imaging, IPMI 2019, Proceedings (A. Chung, S. Bao, J. Gee, and P. Yushkevich, eds.), Lecture Notes in Computer Science, (Germany), pp. 125–138, Springer Verlag, 2019.

C. Yin, B. Qian, J. Wei, X. Li, X. Zhang, Y. Li, and Q. Zheng, “Automatic generation of medical imaging diagnostic report with hierarchical recurrent neural network,” 2019 IEEE International Conference on Data Mining (ICDM), pp. 728–737, 2019.

X. Liu, K. Gao, B. Liu, C. Pan, K. Liang, L. Yan, J. Ma, F. He, S. Zhang, S. Pan, and Y. Yu, “Advances in Deep Learning-Based Medical Image Analysis,” Health Data Science, vol. 2021, pp. 1–14, 6 2021.

A. Khan, A. Sohail, U. Zahoora, and A. S. Qureshi, “A survey of the recent architectures of deep convolutional neural networks,” Artificial Intelligence Review, vol. 53, pp. 5455–5516, 12 2020.

D. H. Hubel and T. N. Wiesel, “Receptive fields and functional architecture of monkey striate cortex,” The Journal of Physiology, vol. 195, pp. 215–243, 3 1968.

K. Fukushima and S. Miyake, “Neocognitron: A Self-Organizing Neural Network Model for a Mechanism of Visual Pattern Recognition,” in Biological Cybernetics, pp. 267–285, Springer, Berlin, Heidelberg, 1982.

Y. Lecun, L. Bottou, Y. Bengio, and P. Haffner, “Gradient-based learning applied to document recognition,” Proceedings of the IEEE, vol. 86, no. 11, pp. 2278–2324, 1998.

C. Szegedy, W. Wei Liu, Y. Yangqing Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, V. Vanhoucke, and A. Rabinovich, “Going deeper with convolutions,” in 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1–9, IEEE, 6 2015.

A. Garcia-Garcia, S. Orts-Escolano, S. Oprea, V. Villena-Martinez, P. Martinez-Gonzalez, and J. Garcia-Rodriguez, “A survey on deep learning techniques for image and video semantic segmentation,” Applied Soft Computing Journal, vol. 70, pp. 41–65, 2018.

A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Communications of the ACM, vol. 60, pp. 84–90, 5 2017.

K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv, 2015.

G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” in 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 2261–2269, 2017.

K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” in 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778, IEEE, 6 2016.

S. Biswal, C. Xiao, L. M. Glass, B. Westover, and J. Sun, CLARA: Clinical Report Auto-Completion. WWW ’20, New York, NY, USA: Association for Computing Machinery, 2020.

C. Y. Li, X. Liang, Z. Hu, and E. P. Xing, “Knowledge-driven encode, retrieve, paraphrase for medical image report generation,” arXiv, 2019.

Y. Xiong, B. Du, and P. Yan, “Reinforced Transformer for Medical Image Captioning,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11861 LNCS, pp. 673–680, Springer, 10 2019.

Y. Zhang, X. Wang, Z. Xu, Q. Yu, A. Yuille, and D. Xu, “When radiology report generation meets knowledge graph,” Proceedings of the AAAI Conference on Artificial Intelligence, vol. 34, pp. 12910– 12917, Apr. 2020.

M. Gu, X. Huang, and Y. Fang, “Automatic generation of pulmonary radiology reports with semantic tags,” 2019 IEEE 11th International Conference on Advanced Infocomm Technology (ICAIT), pp. 162–167, 2019.

X. Li, R. Cao, and D. Zhu, “Vispi: Automatic visual perception and interpretation of chest x-rays,” arXiv, 2020.

Y. Xue, T. Xu, L. Rodney Long, Z. Xue, S. Antani, G. R. Thoma, and X. Huang, “Multimodal recurrent model with attention for automated radiology report generation,” in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture

Notes in Bioinformatics), vol. 11070 LNCS, pp. 457–466, Springer Verlag, 2018.

J. Yuan, H. Liao, R. Luo, and J. Luo, Automatic Radiology Report Generation Based on Multi-view Image Fusion and Medical Concept Enrichment, vol. 11769 LNCS. Springer Science and Business Media Deutschland GmbH, 10 2019.

G. O. Gajbhiye, A. V. Nandedkar, and I. Faye, Automatic report generation for chest X-Ray images: A multilevel multi-attention approach, vol. 1147 CCIS, pp. 174–182. Springer, 9 2020.

U. Kamath, J. Liu, and J. Whitaker, Deep Learning for NLP and Speech Recognition. Springer International Publishing, 2019.

S. Lu, B. Wang, H. Wang, L. Chen, M. Linjian, and X. Zhang, “A real-time object detection algorithm for video,” Computers & Electrical Engineering, vol. 77, pp. 398–408, 2019.

X. Xie, Y. Xiong, P. S. Yu, K. Li, S. Zhang, and Y. Zhu, “Attention-Based Abnormal-Aware Fusion Network for Radiology Report Generation,”

in Lecture Notes in Computer Science (including subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), vol. 11448 LNCS, pp. 448–452, Springer Verlag, 4 2019.

S. Singh, S. Karimi, K. Ho-Shon, and L. Hamey, “From Chest X-Rays to Radiology Reports: A Multimodal Machine Learning Approach,” in 2019 Digital Image Computing: Techniques and Applications, DICTA 2019, Institute of Electrical and Electronics Engineers Inc., 12 2019.

D. Bahdanau, K. H. Cho, and Y. Bengio, “Neural machine translation by jointly learning to align and translate,” in 3rd International Conference on Learning Representations, ICLR 2015 - Conference Track Proceedings, International Conference on Learning Representations, ICLR, 9 2015.

M. T. Luong, H. Pham, and C. D. Manning, “Effective approaches to attention-based neural machine translation,” in Conference Proceedings - EMNLP 2015: Conference on Empirical Methods in Natural Language Processing, pp. 1412–1421, Association for Computational Linguistics (ACL), 8 2015.

J. Hu, L. Shen, S. Albanie, G. Sun, and E. Wu, “Squeeze-and-Excitation Networks,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 42, pp. 2011–2023, 9 2017.

Z. Niu, G. Zhong, and H. Yu, “A review on the attention mechanism of deep learning,” Neurocomputing, vol. 452, pp. 48–62, 2021.

B. Chiu and S. Baker, “Word embeddings for biomedical natural language processing: A survey,” Language and Linguistics Compass,vol. 14, 12 2020.

S. Wang, W. Zhou, and C. Jiang, “A survey of word embeddings based on deep learning,” Computing, vol. 102, pp. 717–740, 3 2020.

T. Mikolov, K. Chen, G. Corrado, and J. Dean, “Efficient estimation of word representations in vector space,” in 1st International Conference on Learning Representations, ICLR 2013, Scottsdale, Arizona, USA, May 2-4, 2013, Workshop Track Proceedings, 2013.

J. Pennington, R. Socher, and C. Manning, “GloVe: Global vectors for word representation,” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), (Doha, Qatar), pp. 1532–1543, Association for Computational Linguistics, Oct. 2014.

M. E. Peters, M. Neumann, M. Iyyer, M. Gardner, C. Clark, K. Lee, and L. Zettlemoyer, “Deep contextualized word representations,” in Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers), (New Orleans, Louisiana), pp. 2227–2237, Association for Computational Linguistics, June 2018.

J. Devlin, M. Chang, K. Lee, and K. Toutanova, “BERT: pre-training of deep bidirectional transformers for language understanding,” in Proceedings of the 2019 Conference NAACL-HLT (J. Burstein, C. Doran, and T. Solorio, eds.), pp. 4171–4186, Association for Computational Linguistics, 2019.

R. Jain, P. Nagrath, G. Kataria, V. Sirish Kaushik, and D. Jude Hemanth, “Pneumonia detection in chest X-ray images using convolutional

neural networks and transfer learning,” Measurement: Journal of the International Measurement Confederation, vol. 165, p. 108046, 12 2020.

Y. Wang, E. J. Choi, Y. Choi, H. Zhang, G. Y. Jin, and S. B. Ko, “Breast Cancer Classification in Automated Breast Ultrasound Using Multiview

Convolutional Neural Network with Transfer Learning,” Ultrasound in Medicine and Biology, vol. 46, pp. 1119–1132, 5 2020.

D. Demner-Fushman, M. Kohli, M. Rosenman, S. Shooshan, L. Rodriguez, S. Antani, G. Thoma, and C. Mcdonald, “Preparing a collection

of radiology examinations for distribution and retrieval,” Journal of the American Medical Informatics Association : JAMIA, vol. 23, 07 2015.

X. Wang, Y. Peng, L. Lu, Z. Lu, M. Bagheri, and R. M. Summers, “Chestx-ray8: Hospital-scale chest x-ray database and benchmarks on weakly-supervised classification and localization of common thorax diseases,” 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Jul 2017.

J. Irvin, P. Rajpurkar, M. Ko, Y. Yu, S. Ciurea-Ilcus, C. Chute, H. Marklund, B. Haghgoo, R. Ball, K. Shpanskaya, J. Seekins, D. A. Mong, S. S. Halabi, J. K. Sandberg, R. Jones, D. B. Larson, C. P. Langlotz, B. N. Patel, M. P. Lungren, and A. Y. Ng, “CheXpert: A Large Chest Radiograph Dataset with Uncertainty Labels and Expert Comparison,” 33rd AAAI Conference on Artificial Intelligence, AAAI 2019, pp. 590–597, 1 2019.

B. Ionescu, H. Müller, M. Villegas, H. Arenas, G. Boato, D. T. Dang Nguyen, Y. Dicente Cid, C. Eickhoff, A. García Seco de Herrera, C. Gurrin, M. B. Islam, V. Kovalev, V. Liauchuk, J. Mothe, L. Piras, M. Riegler, and I. Schwall, “Overview of imageclef 2017: Information extraction from images,” in Lecture Notes in Computer Science, vol. 10456, pp. 11–14, 08 2017.

B. Ionescu, H. Müller, M. Villegas, A. G. S. de Herrera, C. Eickhoff, V. Andrearczyk, Y. D. Cid, V. Liauchuk, V. Kovalev, S. A. Hasan, Y. Ling, O. Farri, J. Liu, M. Lungren, D.-T. Dang-Nguyen, L. Piras, M. Riegler, L. Zhou, M. Lux, and C. Gurrin, “Overview of Image- CLEF 2018: Challenges, datasets and evaluation,” in Experimental IR Meets Multilinguality, Multimodality, and Interaction, Proceedings of the Ninth International Conference of the CLEF Association (CLEF 2018), (Avignon, France), LNCS Lecture Notes in Computer Science, Springer, September 10-14 2018.

A. E. W. Johnson, T. J. Pollard, N. R. Greenbaum, M. P. Lungren, C. ying Deng, Y. Peng, Z. Lu, R. G. Mark, S. J. Berkowitz, and S. Horng, “Mimic cxr-jpg, a large publicly available database of labeled chest radiographs,” arXiv, 2019.

A. E. Johnson, T. J. Pollard, S. J. Berkowitz, N. R. Greenbaum, M. P. Lungren, C. y. Deng, R. G. Mark, and S. Horng, “MIMIC-CXR, a deidentified publicly available database of chest radiographs with free-text reports,” Scientific Data, vol. 6, pp. 1–8, 12 2019.

J. Gu, Z. Wang, J. Kuen, L. Ma, A. Shahroudy, B. Shuai, T. Liu, X. Wang, G. Wang, J. Cai, and T. Chen, “Recent advances in convolutional neural networks,” Pattern Recognition, vol. 77, pp. 354–377, 5 2018.

M. M. A. Monshi, J. Poon, and Y. Y. Chung, “Convolutional neural network to detect thorax diseases from multi-view chest x-rays,” in Neural Information Processing,26th International Conference, ICONIP 2019, Sydney, NSW, Australia, December 12–15, 2019, Proceedings, Part IV, 2019.

A. Karargyris, S. Kashyap, I. Lourentzou, J. T. Wu, A. Sharma, M. Tong, S. Abedin, D. Beymer, V. Mukherjee, E. A. Krupinski, and M. Moradi, “Creation and validation of a chest X-ray dataset with eye-tracking and report dictation for AI development,” Scientific Data, vol. 8, pp. 1–18, 12 2021.

A. Bustos, A. Pertusa, J.-M. Salinas, and M. de la Iglesia-Vayá, “Padchest: A large chest x-ray image dataset with multi-label annotated reports,” Medical Image Analysis, vol. 66, p. 101797, Dec 2020.

P. Rajpurkar, J. Irvin, K. Zhu, B. Yang, H. Mehta, T. Duan, D. Ding, A. Bagul, C. Langlotz, K. Shpanskaya, M. P. Lungren, and A. Y. Ng, “Chexnet: Radiologist-level pneumonia detection on chest x-rays with deep learning,” arXiv, 2017.

G. Spinks and M.-F. Moens, “Justifying diagnosis decisions by deep neural networks,” Journal of Biomedical Informatics, vol. 96, p. 103248, 07 2019.

D. Demner-Fushman, M. Kohli, M. Rosenman, S. E. Shooshan, L. M. Rodriguez, S. Antani, G. Thoma, and C. McDonald, “Preparing a collection of radiology examinations for distribution and retrieval,” Journal of the American Medical Informatics Association : JAMIA, vol. 23 2, pp. 304–10, 2016.

W. T. Le, F. Maleki, F. P. Romero, R. Forghani, and S. Kadoury, Overview of Machine Learning: Part 2: Deep Learning for Medical Image Analysis, vol. 30, pp. 417–431. W.B. Saunders, 11 2020.

H. Dong, G. Yang, F. Liu, Y. Mo, and Y. Guo, “Automatic brain tumor detection and segmentation using U-net based fully convolutional networks,” in Communications in Computer and Information Science, vol. 723, pp. 506–517, Springer Verlag, 2017.

T. Cai, A. A. Giannopoulos, S. Yu, T. Kelil, B. Ripley, K. K. Kumamaru, F. J. Rybicki, and D. Mitsouras, “Natural language processing technologies in radiology research and clinical applications,” Radiographics, vol. 36, pp. 176–191, 1 2016.

S. Pyysalo, F. Ginter, H. Moen, T. Salakoski, and S. Ananiadou, “Distributional semantics resources for biomedical text processing,” Proceedings of Languages in Biology and Medicine, 01 2013.

K. Papineni, S. Roukos, T. Ward, and W.-J. Zhu, “Bleu: A method for automatic evaluation of machine translation,” in Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, ACL ’02, (USA), p. 311–318, Association for Computational Linguistics, 2002.

C.-Y. Lin, “ROUGE: A package for automatic evaluation of summaries,” in Text Summarization Branches Out, (Barcelona, Spain), pp. 74–81, Association for Computational Linguistics, July 2004.

A. Lavie and A. Agarwal, “METEOR: An automatic metric for MT evaluation with high levels of correlation with human judgments,” in Proceedings of the Second Workshop on Statistical Machine Translation, (Prague, Czech Republic), pp. 228–231, Association for Computational Linguistics, June 2007.

R. Vedantam, C. L. Zitnick, and D. Parikh, “Cider: Consensus-based image description evaluation,” arXiv, 2015.

B. J. Erickson and F. Kitamura, Magician’s corner: 9. performance metrics for machine learning models, vol. 3. Radiological Society of North America Inc., 5 2021.

X. He and L. Deng, “Deep learning in natural language generation fromimages,” in Deep Learning in Natural Language Processing, pp. 289–307, Springer International Publishing, 1 2018.

Y. Peng, X.Wang, L. Lu, M. Bagheri, R. Summers, and Z. Lu, “Negbio: a high-performance tool for negation and uncertainty detection in

radiology reports,” arXiv, 2017.

S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural computation, vol. 9, pp. 1735–80, 12 1997.

K. Cho, B. van Merrienboer, Ç. Gülçehre, D. Bahdanau, F. Bougares, H. Schwenk, and Y. Bengio, “Learning phrase representations using RNN encoder-decoder for statistical machine translation,” in Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing, EMNLP 2014, October 25-29, 2014, Doha, Qatar, A meeting of SIGDAT, a Special Interest Group of the ACL (A. Moschitti, B. Pang, and W. Daelemans, eds.), pp. 1724–1734, ACL, 2014.

Y. Zhang, D. Y. Ding, T. Qian, C. D. Manning, and C. P. Langlotz, “Learning to summarize radiology findings,” in Proceedings of the

Ninth International Workshop on Health Text Mining and Information Analysis., p. 204–213, 2018.

L. Kaelbling, M. Littman, and A. Moore, “Reinforcement learning: A survey,” J. Artif. Intell. Res., vol. 4, pp. 237–285, 1996.

J. Zhao, Y. Kim, K. Zhang, A. Rush, and Y. LeCun, “Adversarially regularized autoencoders,” in Proceedings of the 35th International Conference on Machine Learning (J. Dy and A. Krause, eds.), vol. 80 of Proceedings of Machine Learning Research, pp. 5902–5911, PMLR, 10–15 Jul 2018.

H. Zhang, T. Xu, H. Li, S. Zhang, X. Wang, X. Huang, and D. Metaxas, “Stackgan: Text to photo-realistic image synthesis with stacked generative adversarial networks,” in 2017 IEEE International Conference on Computer Vision (ICCV), pp. 5908–5916, IEEE Computer Society,

oct 2017.

I. Goodfellow, J. Pouget-Abadie, M. Mirza, B. Xu, D. Warde-Farley, S. Ozair, A. Courville, and Y. Bengio, “Generative adversarial networks,” Commun. ACM, vol. 63, p. 139–144, Oct. 2020.

R. R. Selvaraju, M. Cogswell, A. Das, R. Vedantam, D. Parikh, and D. Batra, “Grad-cam: Visual explanations from deep networks via gradient-based localization,” International Journal of Computer Vision, vol. 128, p. 336–359, Oct 2019.

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. u. Kaiser, and I. Polosukhin, “Attention is all you need,” in Advances in Neural Information Processing Systems 30 (I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett, eds.), pp. 5998–6008, Curran Associates, Inc., 2017.

O. Alfarghaly, R. Khaled, A. Elkorany, M. Helal, and A. Fahmy, “Automated radiology report generation using conditioned transformers,” Informatics in Medicine Unlocked, vol. 24, p. 100557, 2021.

V. Sanh, L. Debut, J. Chaumond, and T. Wolf, “Distilbert, a distilled version of bert: smaller, faster, cheaper and lighter,” arXiv, 2020.