Motion Planning of Mobile Robots in Indoor Topological Environments using Partially Observable Markov Decision Process

Keywords:

Greedy optimization, Motion planning, Probabilistic robotics, UncertaintyAbstract

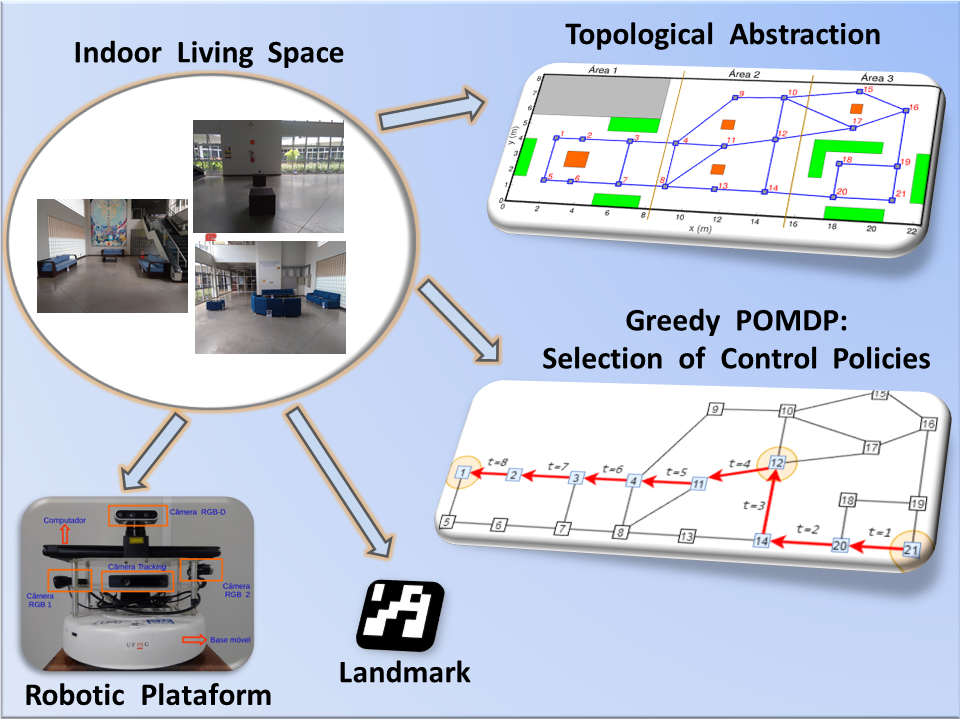

Deterministic motion planners perform well in simulated environments, where sensors and actuators are perfect. However, these assumptions are restrictive and consequently motion planning will have poor performance if applied to real robotic systems (or a more realistic simulator), as they are inherently fraught with uncertainty. In most real robotic systems, states cannot be directly observed, and the results of the actions performed by the robots are uncertain. Thus, the robot must make use of a new class of planners that take into account system uncertainties when making a decision. In the present work, the Partially Observable Markov Decision Process is presented as an alternative to solve problems immersed in uncertainties, selecting optimal actions aiming to perform a given task. The contribution of this article is to implement the Partially Observable Markov Decision Process using greedy optimization, which has considerably simplified the decision-making problem for uncertain environments. This article also presents new ways to determine the parameters of the Partially Observable Markov Decision Process. The aforementioned tooling was applied in a system to control the actions of a real robot that navigates in a indoor topological living space with ambiguity of informations.

Downloads