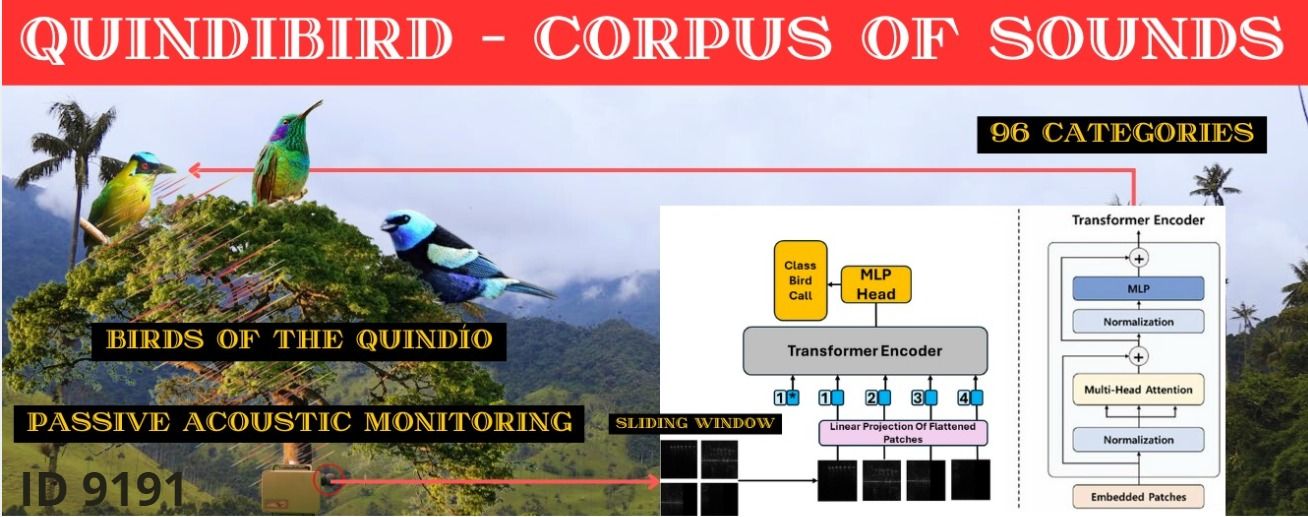

A corpus of bird sounds from Quindío and its application for passive acoustic monitoring through neural networks

Keywords:

Passive acoustic monitoring, Bird sounds, Sound corpus, Avifauna, Feature extractionAbstract

The biodiversity of a region is an invaluable asset that requires continuous efforts for its preservation and study. Particularly, for the region of Quindío in Colombia it is highlighted its richness in native birdlife, which enriches its natural landscape, and significantly contributes to the country’s biological diversity. The observation and study of those species becomes an essential task for the conservation and knowledge of the region. In this context, this paper proposes a corpus of sounds with a ML processing support. Datasets that were used include audios of 170 bird species, which corresponds to approximately 30% of the bird species identified in Quindío. Audios of human voices, silences and noises also were included. For signal processing, the sliding window feature extraction technique is used to analyze and classify bird sounds. Additionally, three neural networks were trained to evaluate the corpus, the first being a convolutional network. From the results of this network , two additional networks were trained, one of which was another convolutional network, while the second was based on the transformers architecture. These networks were trained with the categories that showed performance with an F1-score metric equal to or greater than 0.30 in the first convolutional network. The results obtained show precision levels of 0.55, 0.53 and 0.65 respectively. Network based on Transformers demonstrated better performance in classifying

the sounds of native birds of Quindío. A proof of concept was carried out on this network with audios of the species Saffron Finch (Sicalis flaveola), reaching an accuracy of 65.74%. These results offer a baseline for future research in the field of bird sound classification, thus promoting the conservation of regional avifauna.

Downloads

References

Stefan Kahl, S. Kahl, Connor M. Wood, C. M. Wood, Maximilian Eibl, M. Eibl, & Holger Klinck, H. Klinck. BirdNET: A deep learning solution for avian diversity monitoring. Ecological informatics, 61, 101236. doi: 10.1016/j.ecoinf.2021.101236.

INECOL instituto de ecología, AC (2022). Aves, señales acústicas y paisajes sonoros. https://www.inecol.mx/inecol/index.php/es/2017-06-26-16-35-48/17-ciencia-hoy/1290-aves-senalesacusticas- y-paisajessonoros.

Instituto de Investigaci´on de Recursos Biol´ogicos Alexander von Humboldt (2020). Conjunto de datos de monitoreo ac´ustico pasivo en la Reserva Natural Los Y´ataros de Gachantiv´a, Boyac´a, Colombia. 12447 eventos. https://doi.org/10.15472/wt0elp.

J. Salamon, J. P. Bello, A. Farnsworth and S. Kelling, ”Fusing shallow and deep learning for bioacoustic bird species classification,” 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 2017, pp. 141-145, doi: 10.1109/ICASSP.2017.7952134..

Bravo Sanchez, F.J., Hossain, M.R., English, N.B. et al. Bioacoustic classification of avian calls from raw sound waveforms with an open-source deep learning architecture. Sci Rep 11, 15733 (2021). https://doi.org/10.1038/s41598-021-95076-6

Kiskin, I., Zilli, D., Li, Y. et al. Bioacoustic detection with wavelet conditioned convolutional neural networks. Neural Comput & Applic 32, 915–927 (2020). https://doi.org/10.1007/s00521-018-3626-7

Ilyas Potamitis, Todor Ganchev, Dimitris Kontodimas, On Automatic Bioacoustic Detection of Pests: The Cases of Rhynchophorus ferrugineus and Sitophilus oryzae, Journal of Economic Entomology, Volume 102, Issue 4, 1 August 2009, Pages 1681–1690, https://doi.org/10.1603/029.102.0436

Zhang S, Gao Y, Cai J, Yang H, Zhao Q, Pan F. A Novel Bird Sound Recognition Method Based on Multifeature Fusion and a Transformer Encoder. Sensors. 2023; 23(19):8099. https://doi.org/10.3390/s23198099

Nagesh, C. K., & Purushothama, A. (2022). The Birds Need Attention Too: Analysing usage of Self Attention in identifying bird calls in soundscapes. [Preprint]. https://doi.org/10.48550/arXiv.2211.07722.

Puget, J. (2021). STFT Transformers for Bird Song Recognition.

G´omez, W.E., Isaza, C.V., & Daza, J.M. (2018). Identifying disturbed habitats: A new method from acoustic indices. Ecol. Informatics, 45, 16-25. https://doi.org/10.1016/j.ecoinf.2018.03.001

Larissa Sayuri Moreira Sugai, Thiago Sanna Freire Silva, José Wagner Ribeiro, Jr, Diego Llusia, Terrestrial Passive Acoustic Monitoring: Review and Perspectives, BioScience, Volume 69, Issue 1, January 2019, Pages 15–25, https://doi.org/10.1093/biosci/biy147.

Kalan, A.K., Mundry, R., Wagner, O.J., Heinicke, S., Boesch, C., & Kuhl, H.S. (2015). Towards the automated detection and occupancy estimation of primates using passive acoustic monitoring. Ecological Indicators, 54, 217-226..

Browning, E.; Gibb, R.; Glover-Kapfer, P. and Jones, K.E. (2017) Passive acoustic monitoring in ecology and conservation. Woking, UK, WWF-UK, 76pp. (WWF Conservation Technology Series 1(2)).

Bedoya, C., Isaza, C.V., Daza, J.M., & López, J.D. (2014). Automatic recognition of anuran species based on syllable identification. Ecol. Informatics, 24, 200-209. https://doi.org/10.1016/j.ecoinf.2014.08.009

P´aez, J. P. C. (2022, 21 junio). Escuche aquí los paisajes acústicos del Golfo de Tribugá. Revista Pesquisa Javeriana. https://www.javeriana.edu.co/pesquisa/el-golfo-de-tribuga-colombiasonidos-paisajesacuaticos/

Bakker, K. (2022) Science is making it possible to ‘hear’ nature. It does more talking than we knew. https://www.theguardian.com/commentisfree/2022/nov/30/sciencehear-nature-digital-bioacoustics

Reyes Cabezas, M y Riveros Bonilla, A. (2019). Análisis del canto y comportamiento de sicalis flaveola (aves: thraupidae) en un gradiente de ruido vehicular en la ciudad de Armenia, Quindío. Disponible en: https://bdigital.uniquindio.edu.co/handle/001/6055