Lightweight Real-Time Object Detection via Enhanced Global Perception and Intra-Layer Interaction for Complex Traffic Scenarios

Keywords:

object detection, complex autonomous driving, real-time, global dependencies, multi-branch scale-awareAbstract

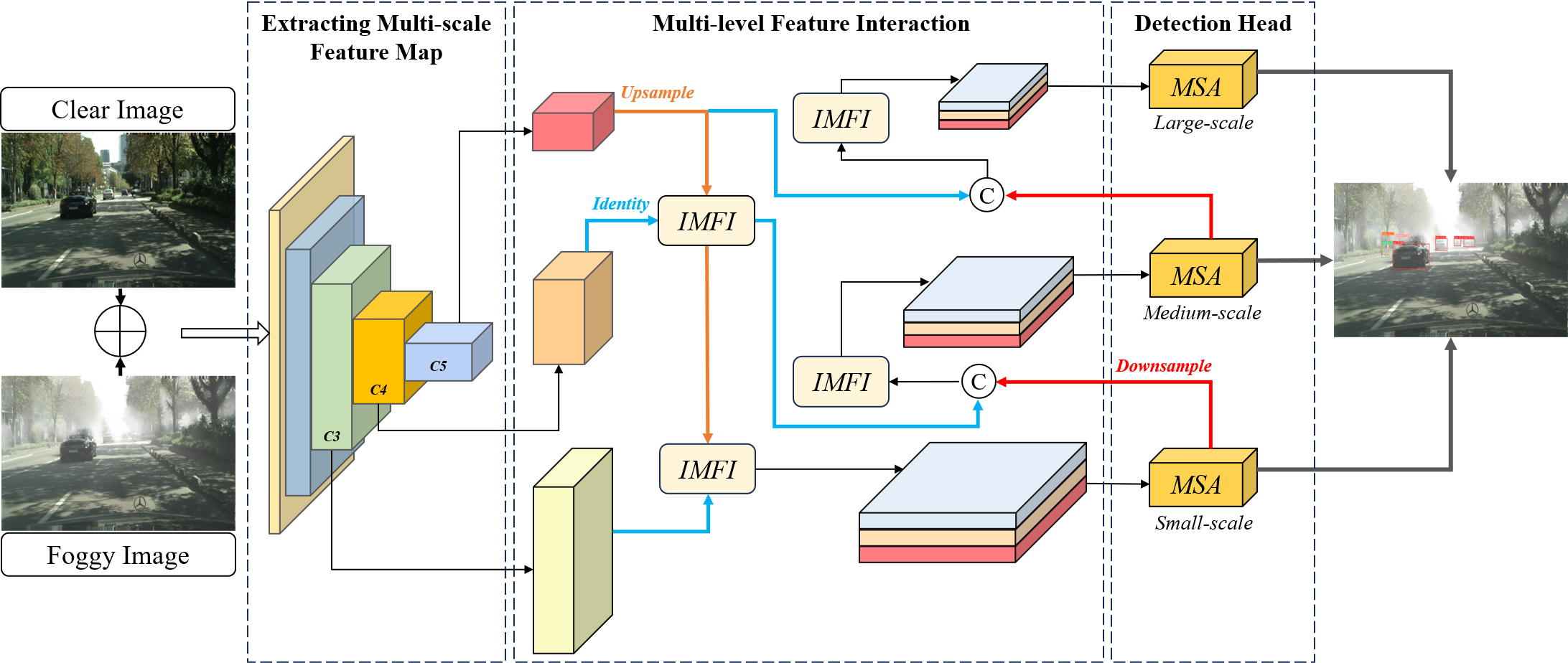

Due to unfavorable factors such as cluttered spatial and temporal distribution of multiple types of targets, occlusion of background objects of different shapes, and blurring of feature information by inclement weather, the low detection accuracy in complex traffic scenarios has been a troubling issue. Regarding the above-mentioned issues, the paper proposes a lightweight real-time detection network to augment multi-scale object perception capabilities in traffic scenarios while ensuring real-time detection speed. First, we construct a novel global feature extraction (GFE) structure by cascading orthogonal band convolution kernels that capture the global dependencies between pixels to improve feature discrimination. Then, an intra-layer multi-scale feature interaction (IMFI) module is proposed to reinforce the effective reuse and multi-level transfer of salient features. In addition, we build a multi-branch scale-aware aggregation (MSA) module that captures abundant context-associated features to improve the target decision-making capability and the self-adaptive capability of the model when dealing with diverse object scales. Experimental results demonstrate that the proposed approach attains a significant improvement of 5.6 percentage points in AP50 with fewer parameters and computational power compared to the baseline model, with an improved FPS of 73. Furthermore, our approach strikes the optimal speed-accuracy balance when compared against other excellent object detection algorithms of the same magnitude.

Downloads

References

X. Dai, X. Yuan, and X. Wei, “Tirnet: Object detection in thermal infrared images for autonomous driving,” Applied Intelligence, vol. 51, pp. 1244–1261, 2021.

D. C. Santos, F. A. da Silva, D. R. Pereira, L. L. de Almeida, A. O. Artero, M. A. Piteri, and V. H. Albuquerque, “Real-time traffic sign detection and recognition using cnn,” IEEE Latin America Transactions, vol. 18, no. 03, pp. 522–529, 2020.

V. Kshirsagar, R. H. Bhalerao, and M. Chaturvedi, “Modified yolo module for efficient object tracking in a video,” IEEE Latin America Transactions, vol. 21, no. 3, pp. 389–398, 2023.

P. Zhu, L. Wen, D. Du, X. Bian, H. Fan, Q. Hu, and H. Ling, “Detection and tracking meet drones challenge,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 44, no. 11, pp. 7380–7399, 2021.

R. Girshick, “Fast r-cnn,” in Proceedings of the IEEE international conference on computer vision, pp. 1440–1448, 2015.

S. Ren, K. He, R. Girshick, and J. Sun, “Faster r-cnn: Towards real-time object detection with region proposal networks,” Advances in neural information processing systems, vol. 28, 2015.

W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. Reed, C.-Y. Fu, and A. C. Berg, “Ssd: Single shot multibox detector,” in Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, pp. 21–37, Springer, 2016.

J. Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 779–788, 2016.

J. Redmon and A. Farhadi, “Yolo9000: better, faster, stronger,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 7263–7271, 2017.

J. Redmon and A. Farhadi, “Yolov3: An incremental improvement,” arXiv preprint arXiv:1804.02767, 2018.

A. Bochkovskiy, C.-Y. Wang, and H.-Y. M. Liao, “Yolov4: Optimal speed and accuracy of object detection,” arXiv preprint arXiv:2004.10934, 2020.

T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature pyramid networks for object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 2117–2125, 2017.

S. Liu, L. Qi, H. Qin, J. Shi, and J. Jia, “Path aggregation network for instance segmentation,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 8759–8768, 2018.

M. Tan, R. Pang, and Q. V. Le, “Efficientdet: Scalable and efficient object detection,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 10781–10790, 2020.

A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, et al., “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint arXiv:2010.11929, 2020.

W. Wang, E. Xie, X. Li, D.-P. Fan, K. Song, D. Liang, T. Lu, P. Luo, and L. Shao, “Pyramid vision transformer: A versatile backbone for dense prediction without convolutions,” in Proceedings of the IEEE/CVF international conference on computer vision, pp. 568–578, 2021.

Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” in Proceedings of the IEEE/CVF international conference on computer vision, pp. 10012–10022, 2021.

N. Carion, F. Massa, G. Synnaeve, N. Usunier, A. Kirillov, and S. Zagoruyko, “End-to-end object detection with transformers,” in European conference on computer vision, pp. 213–229, Springer, 2020.

C. Sakaridis, D. Dai, and L. Van Gool, “Semantic foggy scene understanding with synthetic data,” International Journal of Computer Vision, vol. 126, pp. 973–992, 2018.

M. Cordts, M. Omran, S. Ramos, T. Rehfeld, M. Enzweiler, R. Benenson, U. Franke, S. Roth, and B. Schiele, “The cityscapes dataset for semantic urban scene understanding,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 3213–3223, 2016.

M. Everingham, S. A. Eslami, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman, “The pascal visual object classes challenge: A retrospective,” International journal of computer vision, vol. 111, pp. 98–136, 2015.

T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick, “Microsoft coco: Common objects in context,” in Computer Vision–ECCV 2014: 13th European Conference, Zurich, Switzerland, September 6-12, 2014, Proceedings, Part V 13, pp. 740–755, Springer, 2014.

Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “Yolox: Exceeding yolo series in 2021,” arXiv preprint arXiv:2107.08430, 2021.

C. Li, L. Li, Y. Geng, H. Jiang, M. Cheng, B. Zhang, Z. Ke, X. Xu, and X. Chu, “Yolov6 v3. 0: A full-scale reloading,” arXiv preprint arXiv:2301.05586, 2023.

C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 7464–7475, 2023.

J. Chen, S.-h. Kao, H. He, W. Zhuo, S. Wen, C.-H. Lee, and S.-H. G. Chan, “Run, don’t walk: Chasing higher flops for faster neural networks,” in Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 12021–12031, 2023.

X. Chu, L. Li, and B. Zhang, “Make repvgg greater again: A quantization-aware approach,” arXiv preprint arXiv:2212.01593, 2022.

J. Dai, Y. Li, K. He, and J. Sun, “R-fcn: Object detection via region-based fully convolutional networks,” Advances in neural information processing systems, vol. 29, 2016.

Z. Tian, C. Shen, H. Chen, and T. He, “Fcos: Fully convolutional one-stage object detection,” in Proceedings of the IEEE/CVF international conference on computer vision, pp. 9627–9636, 2019.

S. Zhang, C. Chi, Y. Yao, Z. Lei, and S. Z. Li, “Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection,” in Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 9759–9768, 2020.

W. Li and G. Liu, “A single-shot object detector with feature aggregation and enhancement,” in 2019 IEEE International Conference on Image Processing (ICIP), pp. 3910–3914, IEEE, 2019.

S. Zhang, L. Wen, X. Bian, Z. Lei, and S. Z. Li, “Single-shot refinement neural network for object detection,” in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 4203–4212, 2018.