Word Level Sign Language Recognition via Handcrafted Features

Keywords:

Sign Language Recognition, Word Sign Language Recognition, Computer Vision, Pattern RecognitionAbstract

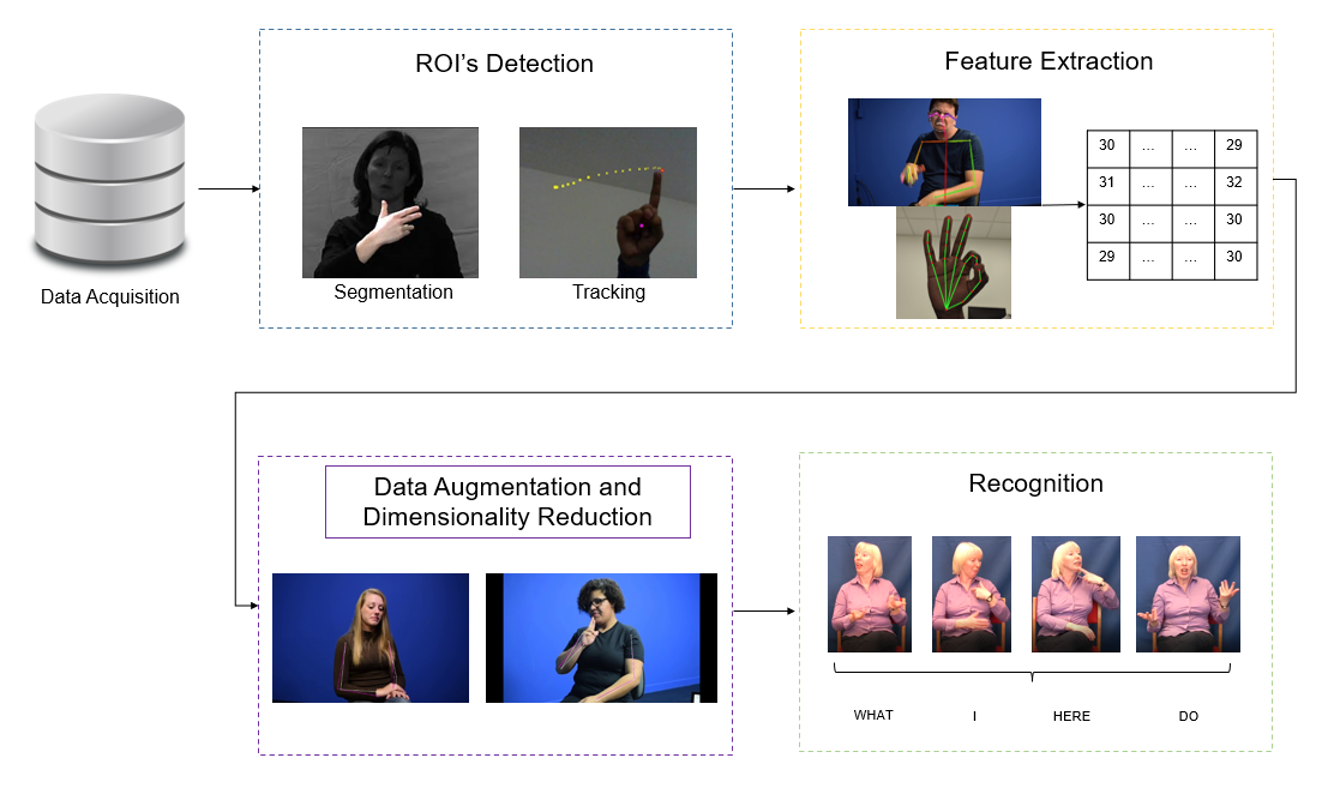

The ability to be understood and convey feelings, requests or ideas through words (spoken or written) is one of the most undervalue by all the humans who have the privilege to do it. Deaf community faces this challenge every single day and, even though, sign languages exist as way to battle against this issue, not all in deaf community knows who to use them; in fact, hearing community knows in a smaller proportion how to interpret them. By this reason sign language recognition area becomes relevant as an effort to solve this issue and create new communication channels. This work aims a methodology for word level sign language recognition, as principal highlights a small set of handcrafted features are defined, between them non-manual features are explored deeply. Data augmentation and dimensionality reduction were performed to obtain a concise feature space. Two recognition models were used (Bidirection Long Term Memory and Transformer) in LIBRAS dataset, and the best result was an accuracy of 94.33%, which was obtained with the bidirectional long term memory network.

Downloads

References

W. H. Organization, “Deafness and hearing lossv.” https://www.who.int/news-room/fact sheets/detail/deafness-and-hearing-loss.

R. Rastgoo, K. Kiani, and S. Escalera, “Sign language recognition: A deep survey,” Expert Systems with Applications, vol. 164, p. 113794, 2021.

A. Wadhawan and P. Kumar, “Sign language recognition systems: A decade systematic literature review,” Archives of Computational Methods in Engineering, vol. 28, no. 3, pp. 785–813, 2021.

R. Elakkiya, “Machine learning based sign language recognition: a review and its research frontier,” Journal of Ambient Intelligence and Humanized Computing, vol. 12, no. 7, pp. 7205–7224, 2021.

E.-S. M. El-Alfy and H. Luqman, “A comprehensive survey and taxonomy of sign language research,” Engineering Applications of Artificial Intelligence, vol. 114, p. 105198, 2022.

S. Subburaj and S. Murugavalli, “Survey on sign language recognition in context of vision-based and deep learning,” Measurement: Sensors, vol. 23, p. 100385, 2022.

O. Koller, “Quantitative survey of the state of the art in sign language recognition,” arXiv preprint arXiv:2008.09918, 2020.

J. Espejel-Cabrera, J. Cervantes, F. García-Lamont, J. S. R. Castilla, and L. D. Jalili, “Mexican sign language segmentation using color based neuronal networks to detect the individual skin color,” Expert Systems

with Applications, vol. 183, p. 115295, 2021.

R. Marzouk, F. Alrowais, F. N. Al-Wesabi, and A. M. Hilal, “Atom search optimization with deep learning enabled arabic sign language recognition for speaking and hearing disability persons,” in Healthcare,

vol. 10, p. 1606, MDPI, 2022.

D. Kothadiya, C. Bhatt, K. Sapariya, K. Patel, A.-B. Gil-González, and J. M. Corchado, “Deepsign: Sign language detection and recognition using deep learning,” Electronics, vol. 11, no. 11, p. 1780, 2022.

J. Guo, W. Xue, L. Guo, T. Yuan, and S. Chen, “Multi-level temporal relation graph for continuous sign language recognition,” in Chinese Conference on Pattern Recognition and Computer Vision (PRCV),

pp. 408–419, Springer, 2022.

H. Hu, J. Pu, W. Zhou, and H. Li, “Collaborative multilingual continuous sign language recognition: A unified framework,” IEEE Transactions on Multimedia, pp. 1–12, 2022.

S. Das, S. K. Biswas, and B. Purkayastha, “Automated indian sign language recognition system by fusing deep and handcrafted feature,” Multimedia Tools and Applications, vol. 82, no. 11, pp. 16905–16927,

I. Rodríguez-Moreno, J. M. Martínez-Otzeta, I. Goienetxea, and B. Sierra, “Sign language recognition by means of common spatial patterns: An analysis,” Plos one, vol. 17, no. 10, p. e0276941, 2022.

A. C. Caliwag, H.-J. Hwang, S.-H. Kim, and W. Lim, “Movement-in-a-video detection scheme for sign language gesture recognition using neural network,” Applied Sciences, vol. 12, no. 20, p. 10542, 2022.

J. Li, J. Meng, H. Gong, and Z. Fan, “Research on continuous dynamic gesture recognition of chinese sign language based on multi-mode fusion,” IEEE Access, vol. 10, pp. 106946–106957, 2022.

R. M. d. Quadros, “Documentação da língua brasileira de sinais,” Brasília: IPHAN - Ministerio da Cultura, vol. 1, pp. 157–174, 2016.

H. Sloetjes and P. Wittenburg, “Annotation by category-elan and iso dcr,” in 6th international Conference on Language Resources and Evaluation (LREC 2008), 2008.

C. Lugaresi, J. Tang, H. Nash, C. McClanahan, E. Uboweja, M. Hays, F. Zhang, C.-L. Chang, M. Yong, J. Lee, W.-T. Chang, W. Hua, M. Georg, and M. Grundmann, “Mediapipe: A framework for perceiving

and processing reality,” in Third Workshop on Computer Vision for AR/VR at IEEE Computer Vision and Pattern Recognition (CVPR) 2019, 2019.

G. Moon, S.-I. Yu, H. Wen, T. Shiratori, and K. M. Lee, “Interhand2.6m: A dataset and baseline for 3d interacting hand pose estimation from a single rgb image,” in Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part XX 16, pp. 548–564, Springer, 2020.

M. Horvat and G. Gledec, “A comparative study of yolov5 models performance for image localization and classification,” in Central European Conference on Information and Intelligent Systems, pp. 349–356, Faculty of Organization and Informatics Varazdin, 2022.

S. S. A. Zaidi, M. S. Ansari, A. Aslam, N. Kanwal, M. Asghar, and B. Lee, “A survey of modern deep learning based object detection models,” Digital Signal Processing, vol. 126, p. 103514, 2022.

N. K. Benamara, E. Zigh, T. B. Stambouli, and M. Keche, “Towards a robust thermal-visible heterogeneous face recognition approach based on a cycle generative adversarial network,” Int. J. Interact. Multimed. Artif. Intell, vol. 7, no. 4, pp. 132–145, 2022.

D. Wu, S. Lv, M. Jiang, and H. Song, “Using channel pruning-based yolo v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments,” Computers and Electronics in Agriculture, vol. 178, p. 105742, 2020.

J. Jiang, X. Fu, R. Qin, X. Wang, and Z. Ma, “High-speed lightweight ship detection algorithm based on yolo-v4 for three-channels rgb sar image,” Remote Sensing, vol. 13, no. 10, p. 1909, 2021.

M. Adimoolam, S. Mohan, G. Srivastava, et al., “A novel technique to detect and track multiple objects in dynamic video surveillance systems,” vol. 7, no. 4, pp. 112–120, 2022.

R. K. Som, Practical sampling techniques. CRC press, 1995.

B. Sekachev, N. Manovich, and A. Zhavoronkov, “Computer vision annotation tool,” Oct. 2019. GitHub: https://github.com/opencv/cvat.

T. Baltrusaitis, A. Zadeh, Y. C. Lim, and L.-P. Morency, “Openface 2.0: Facial behavior analysis toolkit,” in 2018 13th IEEE international conference on automatic face & gesture recognition (FG 2018), pp. 59–

, IEEE, 2018.

A. Rosebrock, Deep learning for computer vision with python: Starter bundle. PyImageSearch, 2017.

M. Boháˇcek and M. Hrúz, “Sign pose-based transformer for word-level sign language recognition,” in Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp. 182–191, 2022.

K. K. Verma and B. M. Singh, “Deep multi-model fusion for human activity recognition using evolutionary algorithms,” vol. 7, no. 2, pp. 44–58, 2021.

J. Yang, X. Huang, H. Wu, and X. Yang, “Eeg-based emotion classification based on bidirectional long short-term memory network,” Procedia Computer Science, vol. 174, pp. 491–504, 2020.

L. Chen, Y. Ouyang, Y. Zeng, and Y. Li, “Dynamic facial expression recognition model based on bilstm-attention,” in 2020 15th International Conference on Computer Science & Education (ICCSE), pp. 828–832, IEEE, 2020.

R. Halder and R. Chatterjee, “Cnn-bilstm model for violence detection in smart surveillance,” SN Computer science, vol. 1, no. 4, p. 201, 2020.

K. Han, Y. Wang, H. Chen, X. Chen, J. Guo, Z. Liu, Y. Tang, A. Xiao, C. Xu, Y. Xu, et al., “A survey on vision transformer,” IEEE transactions on pattern analysis and machine intelligence, vol. 45, no. 1, pp. 87–110,

A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in neural information processing systems, vol. 30, p. 5999, 2017.

E. Bisong, Building machine learning and deep learning models on Google cloud platform: A comprehensive guide for beginners. Apress, 2019.

A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, et al., “Pytorch: An imperative style, high-performance deep learning library,” Advances in

neural information processing systems, vol. 32, pp. 8024–8035, 2019.

T. Minka, “Automatic choice of dimensionality for pca,” Advances in neural information processing systems, vol. 13, pp. 598–604, 2000.

G. Lemaître, F. Nogueira, and C. K. Aridas, “Imbalanced-learn: A python toolbox to tackle the curse of imbalanced datasets in machine learning,” Journal of Machine Learning Research, vol. 18, no. 17, pp. 1–

, 2017.

L. Amaral, V. Ferraz, T. Vieira, and T. Vieira, “Skelibras: A large 2d skeleton dataset of dynamic brazilian signs,” in Iberoamerican Congress on Pattern Recognition, pp. 184–193, Springer, 2021.

W. L. Passos, G. M. Araujo, J. N. Gois, and A. A. de Lima, “A gait energy image-based system for brazilian sign language recognition,” IEEE Transactions on Circuits and Systems I: Regular Papers, vol. 68,

no. 11, pp. 4761–4771, 2021.

F. Ronchetti, F. Quiroga, C. A. Estrebou, L. C. Lanzarini, and A. Rosete, “Lsa64: an argentinian sign language dataset,” in XXII Congreso Argentino de Ciencias de la Computación (CACIC 2016)., pp. 794–803, 2016.

D. Li, C. Rodriguez, X. Yu, and H. Li, “Word-level deep sign language recognition from video: A new large-scale dataset and methods comparison,” in Proceedings of the IEEE/CVF winter conference on applications of computer vision, pp. 1459–1469, 2020.