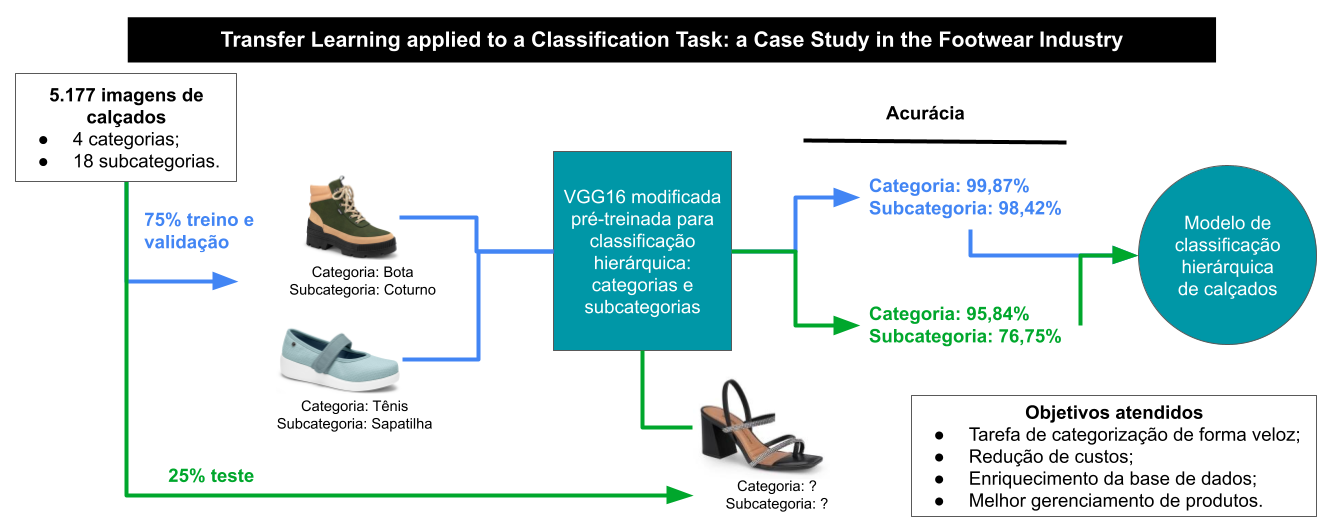

Transfer Learning applied to a Classification Task: a Case Study in the Footwear Industry

Keywords:

Convolutional Neural Networks, Image Classification, Transfer Learning, VGG16.Abstract

Convolutional Neural Networks are a widely used method for image classification. They are part of the Deep Learning area, whose main advantage is the fact that they do not require an human support to extract features from the images. In the context of the footwear industry, they represent a useful computational resource, being applied for style classification problems, machine vision, among others. This article aims to evaluate the performance of transfer learning methods for the purpose of hierarchical classification of new items of footwear products. For this purpose, a dataset composed by 5,177 images of women’s shoes was built. A pretrained architecture was selected to be refined, in order to produce a classification model. As a main result of this study, we confirm that the use of transfer learning speeds up deep neural nets training, allowing outstanding results through a VGG16 architecture. In terms of accuracy, the results achieved 99.97% and 98.42% for classifying respectively footwear categories and subcategories.

Downloads

References

A. More, “Global footwear market and foot care products market size 2021 by trends evaluation, consumer-demand, consumption, recent developments, strategies, leading players updates, market impact and forecast till 2027.” [Online]. Available at https://www.globenewswire.com, jul. 2021.

N. Khosla and V. Venkataraman, “Building image-based shoe search using convolutional neural networks,” CS231n course project reports, pp. 1–7, 2015.

W. Niblack, R. Barber, W. Equitz, M. Flickner, E. Glasman, D. Petkovic, P. Yanker, C. Faloutsos, and G. Taubin, “The qbic project: Querying

images by content, using color, texture, and shape.,” SPIE Conference on Storage and Retrieval for Image and Video Databases, vol. 1908,

pp. 173–187, 01 1993.

A. Karpathy, “Visualizing what convnets learn.” CS231n: Deep Learning for Computer Vision, Stanford University, 2015. [Online]. Available at https://cs231n.github.io/understanding-cnn/.

A. Iliukovich-Strakovskaia, “Using pre-trained models for fine-grained image classification in fashion field.” pp. 1-5, 2016. [Online]. Available at https://kddfashion2016.mybluemix.net/.

M. Shaha and M. Pawar, “Transfer learning for image classification,” in 2018 Second International Conference on Electronics, Communication and Aerospace Technology (ICECA), pp. 656–660, 2018.

B. K. Jha, S. G. G, and V. K. R, “E-commerce product image classification using transfer learning,” in 2021 5th International Conference on

Computing Methodologies and Communication (ICCMC), pp. 904–912, IEEE, 2021.

D. Misal, “Significance of transfer learning in the world of deep learning.” (Dec. 17, 2018). Acessed: Nov. 20, 2021. [Online]. Available at https://analyticsindiamag.com/transfer-learning-deep-learningsignificance.

O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma,

Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. FeiFei, “Imagenet large scale visual recognition challenge.,” International Journal of Computer Vision (IJCV), vol. 115, no. 3, pp. 211–252, 2015.

T. Choudhary, V. Mishra, A. Goswami, and J. Sarangapani, “A transfer learning with structured filter pruning approach for improved breast cancer classification on point-of-care devices,” Computers in Biology and Medicine, vol. 134, p. 104432, 2021.

A. J. Trappey, C. V. Trappey, and S. Shih, “An intelligent contentbased image retrieval methodology using transfer learning for digital

ip protection,” Advanced Engineering Informatics, vol. 48, p. 101291, 2021.

R. Sampaio and M. Mancini, “Estudos de revisão sistemática: Um guia para síntese criteriosa da evidência científica,” Brazilian Journal

of Physical Therapy, vol. 11, no. 1, pp. 83–89, 2007.

Y. Jia, E. Shelhamer, J. Donahue, S. Karayev, J. Long, R. Girshick, S. Guadarrama, and T. Darrell, “Caffe: Convolutional architecture for

fast feature embedding,” in Proceedings of the 22nd ACM International Conference on Multimedia, MM ’14, (New York, NY, USA), p. 675–678, Association for Computing Machinery, 2014.

G. Griffin, A. Holub, and P. Perona, “Caltech-256 object category dataset.” (California Institute of Technology), pp. 1-20, 2007. [Online].

Available at https://authors.library.caltech.edu/7694/.

J. Li and J. Wang, “Automatic linguistic indexing of pictures by a statistical modeling approach,” IEEE Transactions on Pattern Analysis

and Machine Intelligence, vol. 25, no. 9, pp. 1075–1088, 2003.

R. K. Khanuja, “Optimizing e-commerce product classification using transfer learning.” Master’s Project, Computer Science, San Jose University, San Jose, CA, USA, 2019.

A. Schindler, T. Lidy, S. Karner, and M. Hecker, “Fashion and apparel classification using convolutional neural networks.” Proceedings of the 10th Forum Media Technology and 3rd All Around Audio Symposium, St. Poelten, Austria, Nov. 2017, pp. 29-30.

Y. Seo and K. shik Shin, “Hierarchical convolutional neural networks for fashion image classification,” Expert Systems with Applications, vol. 116, pp. 328–339, 2019.

B. Kolisnik, I. Hogan, and F. Zulkernine, “Condition-cnn: A hierarchical multi-label fashion image classification model,” Expert Systems with Applications, vol. 182, p. 115195, 2021.

U. M. Fayyad, G. Piatetsky-Shapiro, P. Smyth, and R. Uthurusamy, eds., Advances in Knowledge Discovery and Data Mining. USA: American Association for Artificial Intelligence, 1996.

Keras: the Python deep learning API. (2021). Accessed: Fev 12, 2022. [Online]. Available: https://keras.io/.