Modified YOLO Module for Efficient Object Tracking in a Video

Keywords:

Object detection, YOLO, Motion Tracking, RANSACAbstract

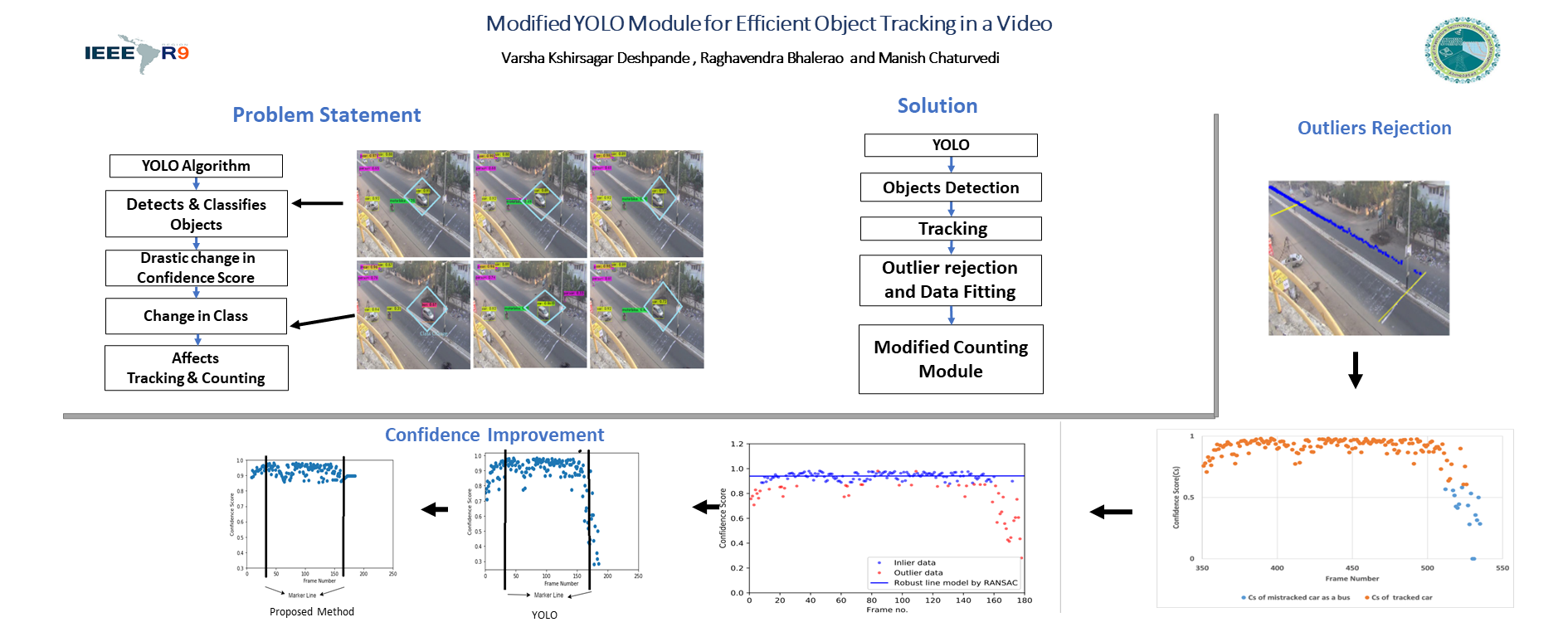

In the proposed work, initially, the YOLO algorithm is used to extract and classify objects in a frame. In the sequence of frames, due to various reasons the confidence measure suddenly drops. This changes the class of an object in consecutive frames which affects the object tracking and counting process severely. To overcome this limitation of the YOLO algorithm, it is modified to enable and track the same object efficiently in the sequence of frames. This will in turn increase object tracking and counting accuracy. In the proposed work drastic change in confidence scores and class change of an object in consecutive frames are identified by tracking the confidence of a particular object in the sequence of frames. These outliers are detected and removed using the RANSAC algorithm. After the removal of the outliers, interpolation is applied to get the new confidence score at that point. By applying the proposed method a smooth confidence measure variation is obtained across the frames. Using this, average counting accuracy has been increased from 66 % to 87 % and overall average object classification accuracy is in the range of 94 - 96 % for various standard dataset.

Downloads

References

M. B. Khalkhali, A. Vahedian, and H. S. Yazdi, “Vehicle tracking with Kalman filter using online situation assessment,” Robotics and

Autonomous Systems, vol. 131, p. 103596, 2020.

S. Liu and Y. Feng, “Real-time fast-moving object tracking in severely degraded videos captured by unmanned aerial vehicle,” International Journal of Advanced Robotic Systems, vol. 15, no. 1,p. 1729881418759108, 2018.

N. H. Dardas and N. D. Georganas, “Real-time hand gesture detection and recognition using bag-of-features and support vector machine techniques,” IEEE Transactions on Instrumentation and Measurement, vol. 60, no. 11, pp. 3592–3607, 2011

R. H. Bhalerao, V. Kshirsagar, and M. Raval, “Finger tracking based tabla syllable transcription,” in Asian Conference on Pattern Recognition, pp. 569–579, Springer, 2019.

X. Bian, G. Li, C. Wang, W. Liu, X. Lin, Z. Chen, M. Cheung, and X. Luo, “A deep learning model for detection and tracking in highthroughput images of the organoid,” Computers in Biology and Medicine,p. 104490, 2021.

X. Hou, Y. Wang, and L.-P. Chau, “Vehicle tracking using deep sort with low confidence track filtering,” in 2019 16th IEEE International

Conference on Advanced Video and Signal Based Surveillance (AVSS), pp. 1–6, IEEE, 2019.

Y. Fang, C. Wang, W. Yao, X. Zhao, H. Zhao, and H. Zha, “On-road vehicle tracking using part-based particle filter,” IEEE transactions on

intelligent transportation systems, vol. 20, no. 12, pp. 4538–4552, 2019.

V. Kshirsagar-Deshpande, T. Patel, A. Abbas, K. Bhatt, R. Bhalerao, and J. Shah, “Vehicle tracking using morphological properties for

traffic modeling,” in 2020 IEEE India Geoscience and Remote Sensing Symposium (InGARSS), pp. 98–101, IEEE, 2020.

P. C. Niedfeldt and R. W. Beard, “Multiple target tracking using recursive RANSAC,” in 2014 American Control Conference, pp. 3393–3398, IEEE, 2014.

J. Chen and L. Dai, “Research on vehicle detection and tracking algorithm for intelligent driving,” in 2019 International Conference on

Smart Grid and Electrical Automation (ICSGEA), pp. 312–315, IEEE, 2019.

A. R. Pathak, M. Pandey, and S. Rautaray, “Application of deep learning for object detection,” Procedia computer science, vol. 132, pp. 1706–1717, 2018.

S. Jha, C. Seo, E. Yang, and G. P. Joshi, “Real-time object detection and tracking system for video surveillance system,” Multimedia Tools and Applications, vol. 80, no. 3, pp. 3981–3996, 2021.

J Redmon, S. Divvala, R. Girshick, and A. Farhadi, “You only look once: Unified, real-time object detection,” in Proceedings of the IEEE

conference on computer vision and pattern recognition, pp. 779–788,2016.

S. Cervantes, A. Mexicano, J.-A. Cervantes, R. Rodríguez, and . Fuentes-Pacheco, “Binary pattern descriptors for scene classification,”

IEEE Latin America Transactions, vol. 18, no. 01, pp. 83–91, 2020.

X. Chen, H. Wu, X. Li, X. Luo, and T. Qiu, “Real-time visual object tracking via the camshaft-based robust framework.,” International Journal of Fuzzy Systems, vol. 14, no. 2, 2012.

S. Kumar and J. S. Yadav, “Video object extraction and its tracking using background subtraction in complex environments,” respectives in Science, vol. 8, pp. 317–322, 2016.

C. Szegedy, A. Toshev, and D. Erhan, “Deep neural networks for object detection,” 2013.

D. Erhan, C. Szegedy, A. Toshev, and D. Anguelov, “Scalable object detection using deep neural networks,” in Proceedings of the IEEE

conference on computer vision and pattern recognition, pp. 2147–2154,2014.

P. Sermanet, D. Eigen, X. Zhang, M. Mathieu, R. Fergus, and Y. LeCun, “Over feat: Integrated recognition, localization, and detection using convolutional networks,” arXiv preprint arXiv:1312.6229, 2013.

R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich feature hierarchies for accurate object detection and semantic segmentation,”

in Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 580–587, 2014.

J. Choi, D. Chun, H. Kim, and H.-J. Lee, “Gaussian yolov3: An accurate and fast object detector using localization uncertainty for autonomous driving,” in Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 502–511, 2019.

C. J. Veenman, M. J. Reinders, and E. Backer, “Resolving motion correspondence for densely moving points,” IEEE Transactions on

Pattern Analysis and Machine Intelligence, vol. 23, no. 1, pp. 54–72,2001.

K. Shafique and M. Shah, “A noniterative greedy algorithm for multi-frame point correspondence,” IEEE transactions on pattern analysis and machine intelligence, vol. 27, no. 1, pp. 51–65, 2005.

A. Yilmaz, O. Javed, and M. Shah, “Object tracking: A survey,” Acm computing surveys (CSUR), vol. 38, no. 4, pp. 13–es, 2006.

R. Xia, Y. Chen, and B. Ren, “Improved anti-occlusion object tracking algorithm using unscented Rauch-tung-Stroebel smoother and kernel correlation filter,” Journal of King Saud University-Computer and Information Sciences, 2022.

M. Mandal and S. K. Vipparthi, “An empirical review of deep learning frameworks for change detection: Model design, experimental frameworks, challenges, and research needs,” IEEE Transactions on Intelligent Transportation Systems, 2021.