High Speed Marker Tracking for Flight Tests

Keywords:

deep learning, convolutional neural networks, tracking, CNNs, image processing, flight test, corner detectionAbstract

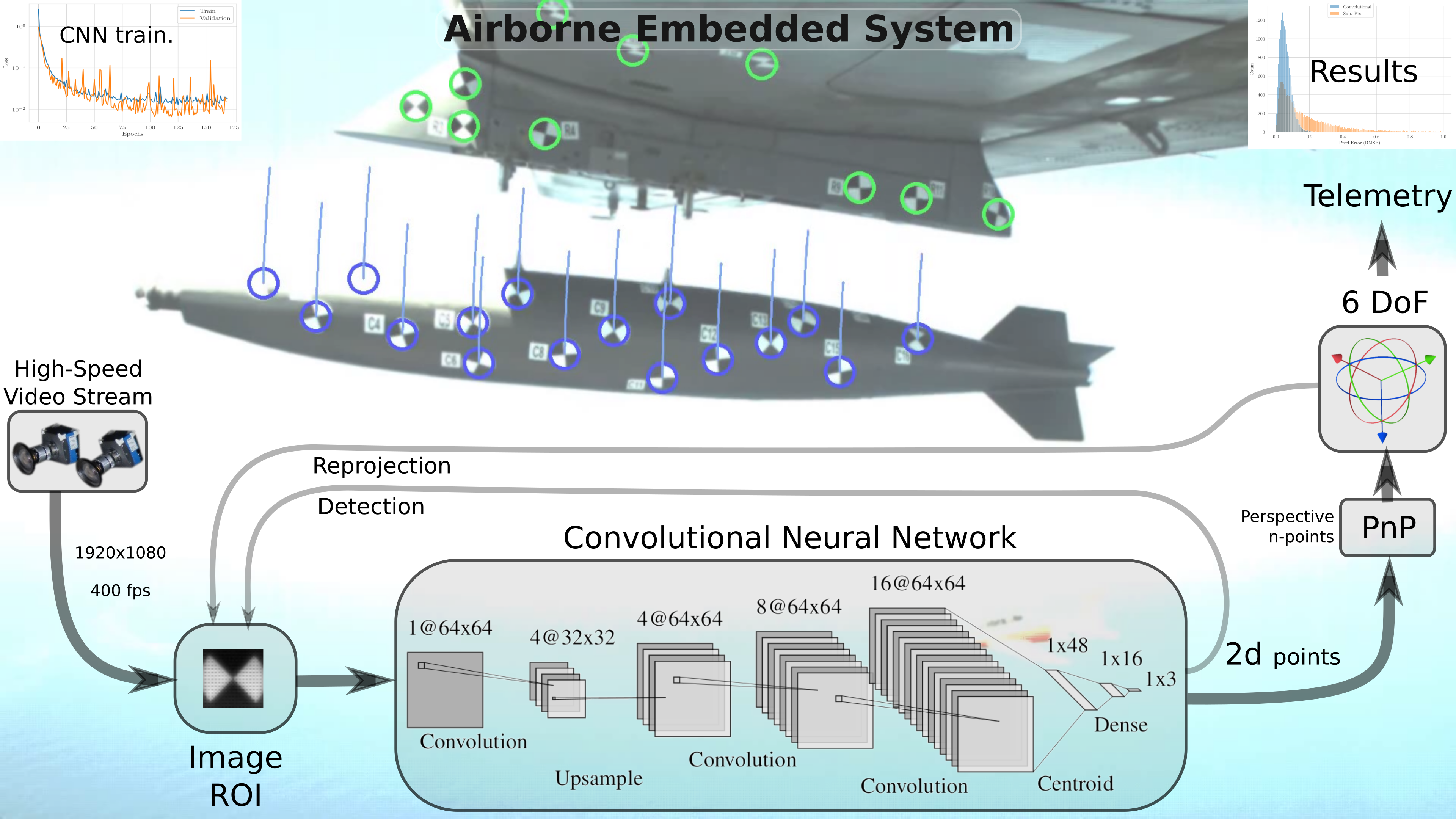

Flight testing is a mandatory process to ensure safety during normal operations and to evaluate an aircraft during its certification phase. As a test flight may be a high-risk activity that may result in loss of the aircraft or even loss of life, simulation models and real-time monitoring systems are crucial to access the risk and to increase situational awareness and safety. We propose a new detecting and tracking model based on CNN, that uses fiducial markers, called HSMT4FT. It is one of the main components of the Optical Trajectory System (SisTrO) which is responsible for detecting and tracking fiducial markers in external stores, in pylons, and in the wings of an aircraft during Flight Tests. HSMT4FT is a real-time processing model that is used to measure the trajectory in a store separation test and even to assess vibrations and wing deflections. Despite the fact that there are several libraries providing rule-based approaches for detecting predefined markers, this work contributes by developing and evaluating three convolutional neural network (CNN) models for detecting and localizing fiducial markers. We also compared classical methods for corner detection implemented in the OpenCV library and the neural network model executed in the OpenVINO environment. Both the execution time and the precision/accuracy of those methodologies were evaluated. One of the CNN models achieved the highest throughput, smaller RMSE, and highest F1 score among tested and benchmark models. The best model is fast enough to enable real-time applications in embedded systems and will be used for real detecting and tracking in real Flight Tests in the future.

Downloads

References

L. E. G. de Vasconcelos, N. P. O. Leite, A. Y. Kusumoto, L. Roberto, and C. M. A. Lopes, “Store separation: Photogrammetric solution for the static ejection test,” International Journal of Aerospace Engineering, vol. 2019, p. 6708450, Jan 2019.

D. Hu, D. DeTone, and T. Malisiewicz, “Deep charuco: Dark charuco marker pose estimation,” in 2019 IEEE/CVF Conference on Computer

Vision and Pattern Recognition (CVPR), pp. 8428–8436, 2019.

F. Romero-Ramirez, R. Muñoz-Salinas, and R. Medina-Carnicer, “Speeded up detection of squared fiducial markers,” Image and Vision Computing, vol. 76, 06 2018.

Itseez, The OpenCV Reference Manual, 2.4.9.0 ed., April 2014.

S. Garrido-Jurado, R. Muñoz-Salinas, F. Madrid-Cuevas, and M. Marín-Jiménez, “Automatic generation and detection of highly reliable fiducial markers under occlusion,” Pattern Recognition, vol. 47, p. 2280–2292, 06 2014.

Y. Zheng, Y. Kuang, S. Sugimoto, K. Åström, and M. Okutomi, “Revisiting the pnp problem: A fast, general and optimal solution,” in 2013 IEEE International Conference on Computer Vision, pp. 2344–2351, 2013.

S. D. Blostein and T. S. Huang, “Error analysis in stereo determination of 3-d point positions,” IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. AMI-9, no. 6, pp. 752–765, 1987.

S. Garrido-Jurado, R. Muñoz-Salinas, F. Madrid-Cuevas, and R. Medina-Carnicer, “Generation of fiducial marker dictionaries using mixed integer linear programming,” Pattern Recognition, vol. 51, 10 2015.

O. Araar, I. E. Mokhtari, and M. Bengherabi, “Pdcat: a framework for fast, robust, and occlusion resilient fiducial marker tracking,” Journal of Real-Time Image Processing, vol. 18, pp. 691–702, Jun 2021.

G. N. Maria Cristina, V. G. Cruz Sanchez, O. O. Vergara Villegas, M. Nandayapa, H. d. J. Ochoa Dominguez, and J. H. Sossa Azuela, “Study of the effect of combining activation functions in a convolutional neural network,” IEEE Latin America Transactions, vol. 19, no. 5, pp. 844–852, 2021.

G. Adriano de Melo, D. N. Sugimoto, P. M. Tasinaffo, A. H. Moreira Santos, A. M. Cunha, and L. A. Vieira Dias, “A new approach to river flow forecasting: Lstm and gru multivariate models,” IEEE Latin America Transactions, vol. 17, no. 12, pp. 1978–1986, 2019.

E. Paiva, A. Paim, and N. Ebecken, “Convolutional neural networks and long short-term memory networks for textual classification of information access requests,” IEEE Latin America Transactions, vol. 19, no. 5, pp. 826–833, 2021.

R. Gonzales-Martínez, J. Machacuay, P. Rotta, and C. Chinguel, “Hyperparameters tuning of faster r-cnn deep learning transfer for persistent object detection in radar images,” IEEE Latin America Transactions, vol. 20, no. 4, pp. 677–685, 2022.

S. Guo, Z. Liang, and L. Zhang, “Joint denoising and demosaicking with green channel prior for real-world burst images,” IEEE Transactions on Image Processing, vol. 30, pp. 6930–6942, 2021.

L. Liu, W. Ouyang, X. Wang, P. Fieguth, J. Chen, X. Liu, and M. Pietikäinen, “Deep learning for generic object detection: A survey,” International Journal of Computer Vision, vol. 128, pp. 261–318, Feb 2020.

X. Glorot and Y. Bengio, “Understanding the difficulty of training deep feedforward neural networks,” Journal of Machine Learning Research - Proceedings Track, vol. 9, pp. 249–256, 01 2010.

A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala, “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems 32 (H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, eds.), pp. 8024–8035, Curran Associates, Inc., 2019.

G. Bradski, “The OpenCV Library,” Dr. Dobb’s Journal of Software Tools, 2000.

W. FORSTNER, “A fast operator for detection and precise location of distincs points, corners and center of circular features.,” in Intercommission Conference on Fast Processing of Photogrammetric Data, pp. 281–305, 1987.