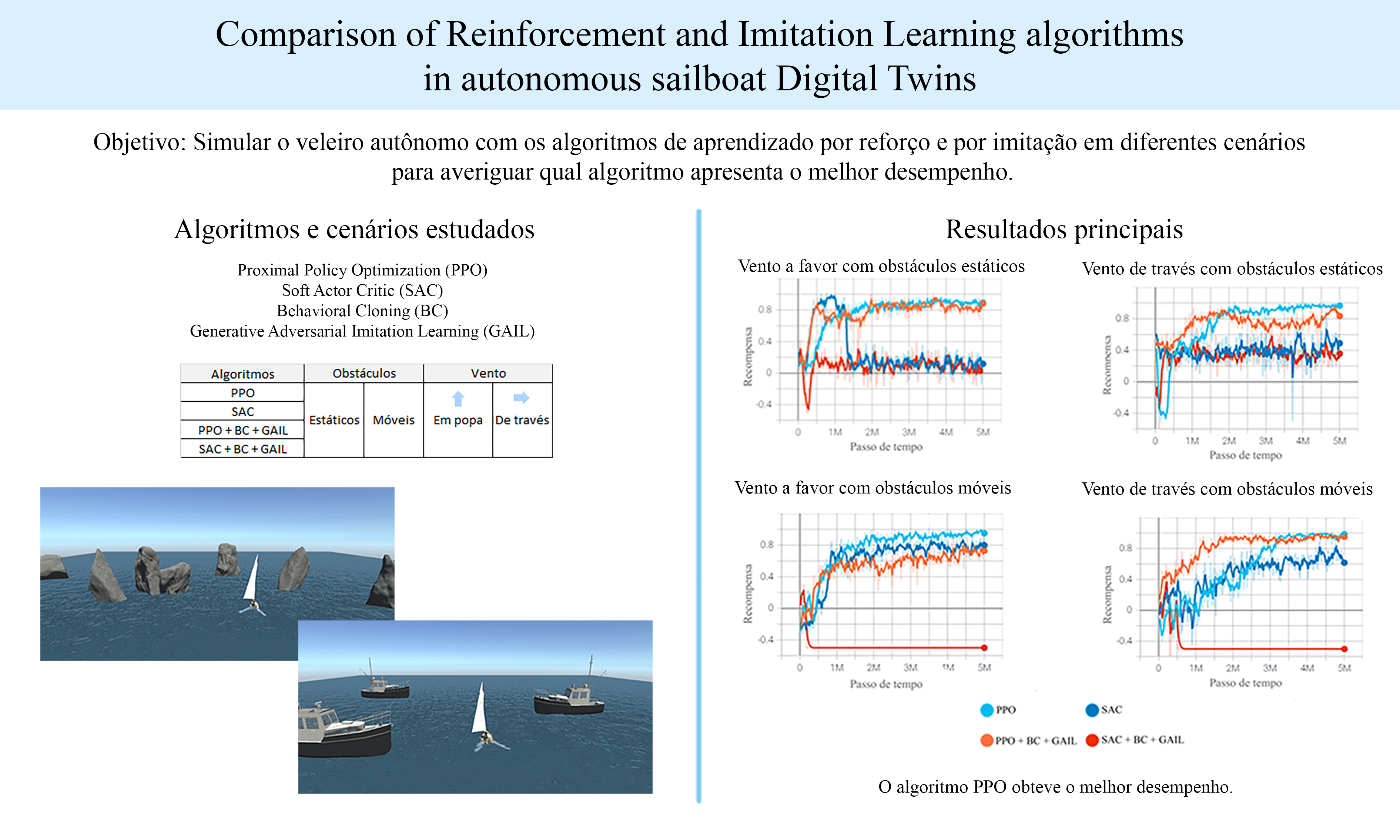

Comparison of Reinforcement and Imitation Learning algorithms in autonomous sailboat Digital Twins

Keywords:

reinforcement learning, imitation learning, autonomous sailboat, unmanned surface vehicleAbstract

This project aims to study the performance of two reinforcement machine learning algorithms, namely the Proximal Policy Optimization and Soft Actor Critic, in the simulation of autonomous sailboats and their response to different wind directions while avoiding obstacles detected by image analysis and following defined target check-points. Also, the effect of the imitation learning algorithms Behavioral Cloning and Generative Adversarial Imitation Learning combined with the first mentioned algorithms is studied. The proposed scenarios consist of areas filled with random static or moving obstacles and with the presence of favorable or crosswinds. The motivation for the project comes from the lack of studies of the mentioned algorithms in autonomous sailboats, issue which the current study tries to address. The Unity® platform and ML-Agents machine learning toolkit are used for development and the methodology that guides the project can be similarly applied to other reinforcement learning problems. Through agent training, it is possible to compare the results and observe that the Proximal Policy Optimization obtains better performance within the proposed scenarios, both with and without the support of imitation learning algorithms.

Downloads

References

B. G. Buchanan, “A (Very) Brief History of Artificial Intelligence,” AI Mag., vol. 26, no. 4, pp. 53–53, Dec. 2005, doi: 10.1609/AIMAG.V26I4.1848.

T. W. Vaneck, “Fuzzy Guidance Controller for an Autonomous Boat,” IEEE Control Syst., vol. 17, no. 2, pp. 43–51, 1997, doi: 10.1109/37.581294.

J. Abril, J. Salom, and O. Calvo, “Fuzzy control of a sailboat,” Int. J. Approx. Reason., vol. 16, no. 3–4, pp. 359–375, Apr. 1997, doi: 10.1016/S0888-613X(96)00132-6.

R. Stelzer and T. Pröll, “Autonomous sailboat navigation for short course racing,” Rob. Auton. Syst., vol. 56, no. 7, pp. 604–614, Jul. 2008, doi: 10.1016/J.ROBOT.2007.10.004.

C. Pêtrès, M. A. Romero-Ramirez, and F. Plumet, “Reactive path planning for autonomous sailboat,” IEEE 15th Int. Conf. Adv. Robot. New Boundaries Robot. ICAR 2011, pp. 112–117, 2011, doi: 10.1109/ICAR.2011.6088585.

R. S. Sutton and A. G. Barto, Reinforcement Learning: An Introduction, 2nd, in prog ed. 2015.

A. Stanford-Clark, E. Frank-Schultz, and M. Harris, “What are digital twins? – IBM Developer,” 2019. https://developer.ibm.com/articles/what-are-digital-twins/ (accessed Apr. 03, 2022).

J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv. arXiv, Jul. 19, 2017.

T. Haarnoja, A. Zhou, P. Abbeel, and S. Levine, “Soft Actor-Critic: Off-Policy Maximum Entropy Deep Reinforcement Learning with a Stochastic Actor,” 35th Int. Conf. Mach. Learn. ICML 2018, vol. 5, pp. 2976–2989, Jan. 2018.

V. Mnih et al., “Human-level control through deep reinforcement learning,” Nature, vol. 518, 2015, doi: 10.1038/nature14236.

T. P. Lillicrap et al., “Continuous control with deep reinforcement learning,” 4th Int. Conf. Learn. Represent. ICLR 2016 - Conf. Track Proc., Sep. 2015.

M. Andrecut and M. K. Ali, “Deep-sarsa: A reinforcement learning algorithm for autonomous navigation,” Int. J. Mod. Phys. C, vol. 12, no. 10, pp. 1513–1523, Dec. 2001, doi: 10.1142/S0129183101002851.

V. Mnih et al., “Asynchronous Methods for Deep Reinforcement Learning,” 33rd Int. Conf. Mach. Learn. ICML 2016, vol. 4, pp. 2850–2869, Feb. 2016.

J. Ho and S. Ermon, “Generative adversarial imitation learning,” in Advances in Neural Information Processing Systems, Jun. 2016, pp. 4572–4580.

Unity Technologies, “Unity,” 2021. https://unity.com/ (accessed Sep. 11, 2021).

Unity Technologies, “GitHub - Unity-Technologies/ml-agents: Unity Machine Learning Agents Toolkit,” 2021. https://github.com/Unity-Technologies/ml-agents (accessed May 10, 2021).

V. Lytsus, “GitHub - vlytsus/unity-3d-boat: Unity Yacht Simulator,” 2020. https://github.com/vlytsus/unity-3d-boat (accessed May 15, 2021).

E. Meyer, H. Robinson, A. Rasheed, and O. San, “Taming an Autonomous Surface Vehicle for Path following and Collision Avoidance Using Deep Reinforcement Learning,” IEEE Access, vol. 8, pp. 41466–41481, 2020, doi: 10.1109/ACCESS.2020.2976586.

X. Zhou, P. Wu, H. Zhang, W. Guo, and Y. Liu, “Learn to Navigate: Cooperative Path Planning for Unmanned Surface Vehicles Using Deep Reinforcement Learning,” IEEE Access, vol. 7, pp. 165262–165278, 2019, doi: 10.1109/ACCESS.2019.2953326.

Z. Shi, H. Zhang, J. Zhou, and J. Wei, “An Adaptive Path Planning Based on Improved Fuzzy Neural Network for Multi-robot Systems,” pp. 319–343, Jan. 2016, doi: 10.4018/978-1-4666-9572-6.CH012.

J. Woo, C. Yu, and N. Kim, “Deep reinforcement learning-based controller for path following of an unmanned surface vehicle,” Ocean Eng., vol. 183, pp. 155–166, Jul. 2019, doi: 10.1016/j.oceaneng.2019.04.099.

A. G. da S. Silva Junior, D. H. dos Santos, A. P. F. de Negreiros, J. M. V. B. de S. Silva, and L. M. G. Gonçalves, “High-Level Path Planning for an Autonomous Sailboat Robot Using Q-Learning,” Sensors, vol. 20, no. 6, p. 1550, Mar. 2020, doi: 10.3390/s20061550.

W. Wang, X. Luo, Y. Li, and S. Xie, “Unmanned surface vessel obstacle avoidance with prior knowledge‐based reward shaping,” Concurr. Comput. Pract. Exp., p. e6110, Dec. 2020, doi: 10.1002/cpe.6110.

R. Polvara, S. Sharma, J. Wan, A. Manning, and R. Sutton, “Autonomous Vehicular Landings on the Deck of an Unmanned Surface Vehicle using Deep Reinforcement Learning,” Robotica, vol. 37, no. 11, pp. 1867–1882, Nov. 2019, doi: 10.1017/S0263574719000316.

X. Lin and R. Guo, “Path planning of unmanned surface vehicle based on improved q-learning algorithm,” in 2019 IEEE 3rd International Conference on Electronic Information Technology and Computer Engineering, EITCE 2019, Oct. 2019, pp. 302–306, doi: 10.1109/EITCE47263.2019.9095038.

Z. Zhou, Y. Zheng, K. Liu, X. He, and C. Qu, “A Real-time Algorithm for USV Navigation Based on Deep Reinforcement Learning,” Dec. 2019, doi: 10.1109/ICSIDP47821.2019.9173280.

X. Xu, Y. Lu, X. Liu, and W. Zhang, “Intelligent collision avoidance algorithms for USVs via deep reinforcement learning under COLREGs,” Ocean Eng., vol. 217, p. 107704, Dec. 2020, doi: 10.1016/j.oceaneng.2020.107704.

Y. Koren and J. Borenstein, “Potential field methods and their inherent limitations for mobile robot navigation,” Proc. - IEEE Int. Conf. Robot. Autom., vol. 2, pp. 1398–1404, 1991, doi: 10.1109/ROBOT.1991.131810.

P. Fiorini and Z. Shiller, “Motion Planning in Dynamic Environments Using Velocity Obstacles:,” http://dx.doi.org/10.1177/027836499801700706, vol. 17, no. 7, pp. 760–772, Jul. 1998, doi: 10.1177/027836499801700706.

S. Wang, F. Ma, X. Yan, P. Wu, and Y. Liu, “Adaptive and extendable control of unmanned surface vehicle formations using distributed deep reinforcement learning,” Appl. Ocean Res., vol. 110, p. 102590, May 2021, doi: 10.1016/j.apor.2021.102590.

X. Wu et al., “The autonomous navigation and obstacle avoidance for USVs with ANOA deep reinforcement learning method,” Knowledge-Based Syst., vol. 196, p. 105201, May 2020, doi: 10.1016/j.knosys.2019.105201.

E. Meyer, A. Rasheed, A. Heiberg, and O. San, “COLREG-COMPLIANT COLLISION AVOIDANCE FOR UNMANNED SURFACE VEHICLE USING DEEP REINFORCEMENT LEARNING,” arXiv. arXiv, Jun. 16, 2020, doi: 10.1109/access.2020.3022600.

N. Vanvuchelen, J. Gijsbrechts, and R. Boute, “Use of Proximal Policy Optimization for the Joint Replenishment Problem,” Comput. Ind., vol. 119, p. 103239, Aug. 2020, doi: 10.1016/J.COMPIND.2020.103239.

I. J. Goodfellow et al., “Generative Adversarial Nets,” in Proceedings of the International Conference on Neural Information Processing Systems, 2014, pp. 2672–2680.

Facebook, “PyTorch,” 2021. https://pytorch.org/ (accessed Sep. 11, 2021).

A. Juliani et al., “Unity: A General Platform for Intelligent Agents,” arXiv, Sep. 2018.

Microsoft, “Visual Studio: IDE e Editor de Código para Desenvolvedores de Software e Teams,” 2021. https://visualstudio.microsoft.com/pt-br/ (accessed Sep. 11, 2021).

Blender Foundation, “blender.org - Home of the Blender project - Free and Open 3D Creation Software,” 2021. https://www.blender.org/ (accessed Sep. 11, 2021).

K. Gyzen, “Rock Pack Vol.1 Free - BlenderNation,” 2020. https://www.blendernation.com/2020/03/14/rock-pack-vol-1-free/ (accessed Jul. 02, 2021).

Seemlyhasan, “Fisher Boat free VR / AR / low-poly 3D model,” 2020. https://www.cgtrader.com/free-3d-models/watercraft/industrial/fisher-boat-96631d80-50ba-4b41-a11d-2bea68e1db64 (accessed Jul. 02, 2021).

L. Alzubaidi et al., “Review of deep learning: concepts, CNN architectures, challenges, applications, future directions,” J. Big Data, vol. 8, no. 1, p. 53, Dec. 2021, doi: 10.1186/s40537-021-00444-8.