Using Artificial Vision for Measuring the Range of Motion

Keywords:

augmented reality, telerehabilitation, pose estimation, goniometer, artificial vision, Range of MotionAbstract

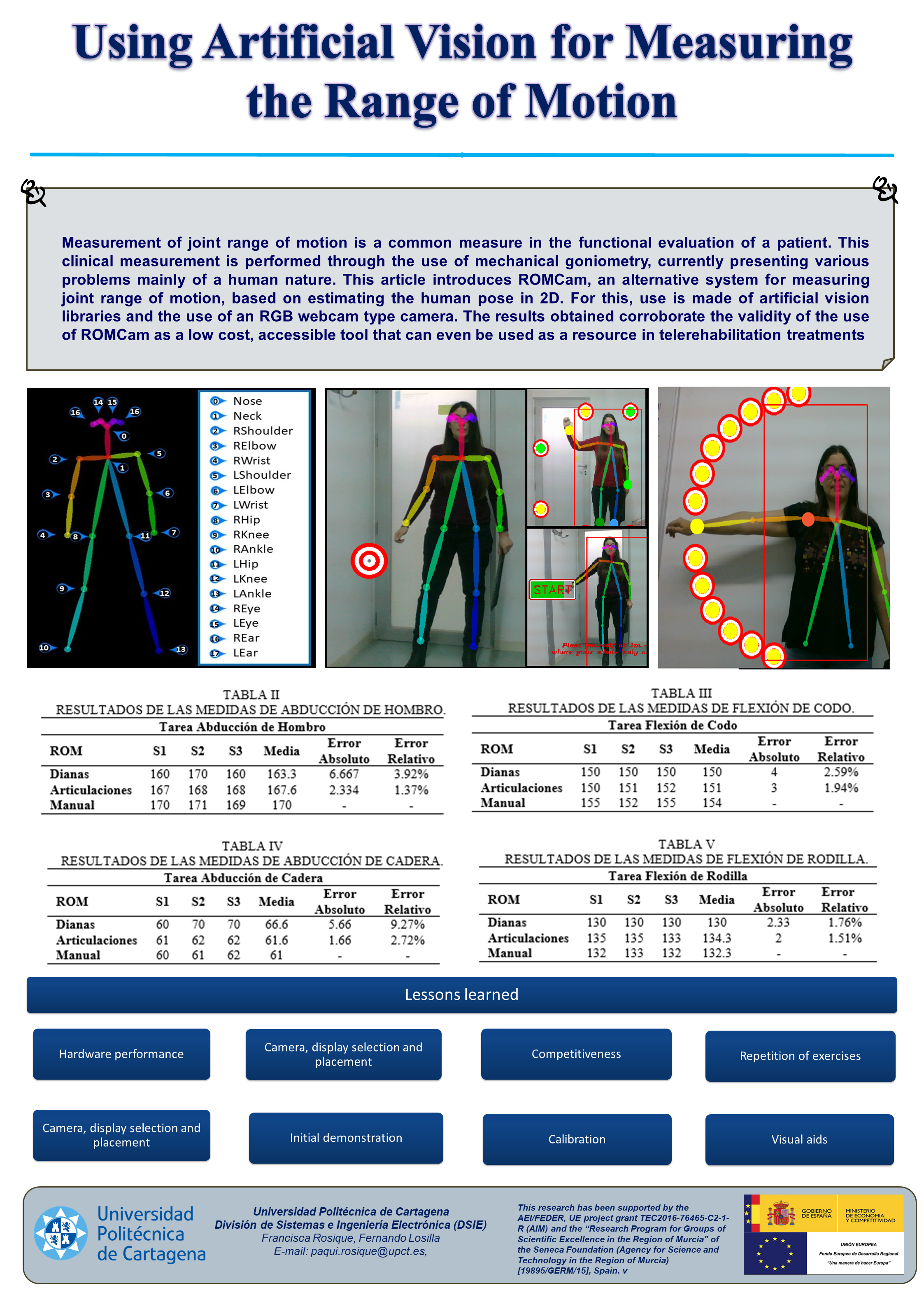

Measurement of joint range of motion is a common measure in the functional evaluation of a patient. This clinical measurement is performed through the use of mechanical goniometry, currently presenting various problems mainly of a human nature. This article introduces ROMCam, an alternative system for measuring joint range of motion, based on estimating the human pose in 2D. For this, use is made of artificial vision libraries and the use of an RGB webcam type camera. The results obtained corroborate the validity of the use of ROMCam as a low cost, accessible tool that can even be used as a resource in telerehabilitation treatments.

Downloads

References

C. H. Taboadela, ‘UNA HERRAMIENTA PARA LA EVALUACIÓN DE LAS INCAPACIDADES LABORALES’, p. 130.

D. Collado Bertomeu, ‘Diseño y desarrollo de un goniómetro para la realización de medidas de caracterización de estructuras fotónicas de sensado’, Oct. 2017, Accessed: Nov. 07, 2020. [Online]. Available: https://riunet.upv.es/handle/10251/89570.

M. Hazman et al., ‘IMU sensor-based data glove for finger joint measurement’, Indonesian Journal of Electrical Engineering and Computer Science, vol. 20, pp. 82–88, Oct. 2020, doi: 10.11591/ijeecs.v20.i1.pp82-88.

O. M. Matamoros, P. A. R. Araiza, R. A. S. Torres, J. J. M. Escobar, and R. T. Padilla, ‘Computer-Vision System for Supporting the Goniometry’, in Proceedings of the Future Technologies Conference (FTC) 2020, Volume 2, Cham, 2021, pp. 946–964, doi: 10.1007/978-3-030-63089-8_62.

R. Sodhi, H. Benko, and A. Wilson, ‘LightGuide: Projected Visualizations for Hand Movement Guidance’, in Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, New York, NY, USA, 2012, pp. 179–188, doi: 10.1145/2207676.2207702.

Mr. Naeemabadi, B. Dinesen, O. K. Andersen, S. Najafi, and J. Hansen, ‘Evaluating Accuracy and Usability of Microsoft Kinect Sensors and Wearable Sensor for Tele Knee Rehabilitation after Knee Operation’:, in Proceedings of the 11th International Joint Conference on Biomedical Engineering Systems and Technologies, Funchal, Madeira, Portugal, 2018, pp. 128–135, doi: 10.5220/0006578201280135.

M. Sousa, J. Vieira, D. Medeiros, A. Arsenio, and J. Jorge, ‘SleeveAR: Augmented Reality for Rehabilitation Using Realtime Feedback’, in Proceedings of the 21st International Conference on Intelligent User Interfaces, New York, NY, USA, 2016, pp. 175–185, doi: 10.1145/2856767.2856773.

Z. Zhang, ‘Microsoft Kinect Sensor and Its Effect’, IEEE Multimedia, vol. 19, no. 2, pp. 4–10, Feb. 2012, doi: 10.1109/MMUL.2012.24.

‘Software para Captura de Movimiento | Technaid - Leading Motion’. https://www.technaid.com/es/productos/motion-capture-software/ (accessed Apr. 08, 2020).

T. H. Laine and H. J. Suk, ‘Designing Mobile Augmented Reality Exergames’, Games and Culture, vol. 11, no. 5, pp. 548–580, Jul. 2016, doi: 10.1177/1555412015572006.

A. G. LeBlanc and J.-P. Chaput, ‘Pokémon Go: A game changer for the physical inactivity crisis?’, Preventive Medicine, vol. 101, pp. 235–237, Aug. 2017, doi: 10.1016/j.ypmed.2016.11.012.

P. A. Rauschnabel, A. Rossmann, and M. C. tom Dieck, ‘An adoption framework for mobile augmented reality games: The case of Pokémon Go’, Computers in Human Behavior, vol. 76, pp. 276–286, Nov. 2017, doi: 10.1016/j.chb.2017.07.030.

M. Dixit, A. Tiwari, H. Pathak, and R. Astya, ‘An overview of deep learning architectures, libraries and its applications areas’, in 2018 International Conference on Advances in Computing, Communication Control and Networking (ICACCCN), Oct. 2018, pp. 293–297, doi: 10.1109/ICACCCN.2018.8748442.

W. Gong et al., ‘Human Pose Estimation from Monocular Images: A Comprehensive Survey’, Sensors (Basel), vol. 16, no. 12, Nov. 2016, doi: 10.3390/s16121966.

P. Wang, W. Li, P. Ogunbona, J. Wan, and S. Escalera, ‘RGB-D-based human motion recognition with deep learning: A survey’, Computer Vision and Image Understanding, vol. 171, pp. 118–139, Jun. 2018, doi: 10.1016/j.cviu.2018.04.007.

K. K. Verma, B. M. Singh, H. L. Mandoria, and P. Chauhan, ‘Two-Stage Human Activity Recognition Using 2D-ConvNet’, International Journal of Interactive Multimedia and Artificial Intelligence, vol. 6, no. Regular Issue, 2020, Accessed: Nov. 07, 2020. [Online]. Available: https://www.ijimai.org/journal/bibcite/reference/2762.

‘OpenPose: Real-time multi-person keypoint detection library for body, face, and hands estimation’, Mar. 05, 2018. https://github.com/CMU-Perceptual-Computing-Lab/openpose (accessed Mar. 05, 2018).

‘wrnch - Teaching Cameras To Read Human Body Language’, wrnch. https://wrnch.ai/ (accessed Aug. 19, 2019).

‘Pose Detection comparison : wrnchAI vs OpenPose | Learn OpenCV’. https://www.learnopencv.com/pose-detection-comparison-wrnchai-vs-openpose/ (accessed Aug. 19, 2019).

T.-Y. Lin et al., ‘Microsoft COCO: Common Objects in Context’, arXiv:1405.0312 [cs], May 2014, Accessed: Jan. 10, 2018. [Online]. Available: http://arxiv.org/abs/1405.0312.

Z. Cao, G. Hidalgo, T. Simon, S.-E. Wei, and Y. Sheikh, ‘OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields’, arXiv:1812.08008 [cs], May 2019, Accessed: Oct. 26, 2019. [Online]. Available: http://arxiv.org/abs/1812.08008.

Y. Raaj, H. Idrees, G. Hidalgo, and Y. Sheikh, ‘Efficient Online Multi-Person 2D Pose Tracking With Recurrent Spatio-Temporal Affinity Fields’, p. 9.

‘Visual Geometry Group - University of Oxford’. http://www.robots.ox.ac.uk/~vgg/research/very_deep/ (accessed Apr. 05, 2020).

Z. Cao, G. Hidalgo, T. Simon, S.-E. Wei, and Y. Sheikh, ‘OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields’, arXiv:1812.08008 [cs], May 2019, Accessed: Oct. 27, 2019. [Online]. Available: http://arxiv.org/abs/1812.08008.

Z. Cao, T. Simon, S.-E. Wei, and Y. Sheikh, ‘Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields’, arXiv:1611.08050 [cs], Apr. 2017, Accessed: Oct. 27, 2019. [Online]. Available: http://arxiv.org/abs/1611.08050.

Instituto de Migraciones y Servicios Sociales (España), España, and Ministerio de Trabajo y Asuntos Sociales, Valoración de las situaciones de minusvalía. Madrid: Instituto de Migraciones y Servicios Sociales, 2000.

J. Bresenham, ‘A linear algorithm for incremental digital display of circular arcs’, Communications of the ACM, vol. 20, no. 2, pp. 100–106, Jan. 1977, doi: 10.1145/359423.359432.

C. Manterola and T. Otzen, ‘Los Sesgos en Investigación Clínica’, Int. J. Morphol., vol. 33, no. 3, pp. 1156–1164, Sep. 2015, doi: 10.4067/S0717-95022015000300056.