MIHR: A Human-Robot Interaction Model

Keywords:

ROBOT, social robot, Human-Robot Interaction, Social Robotics.Abstract

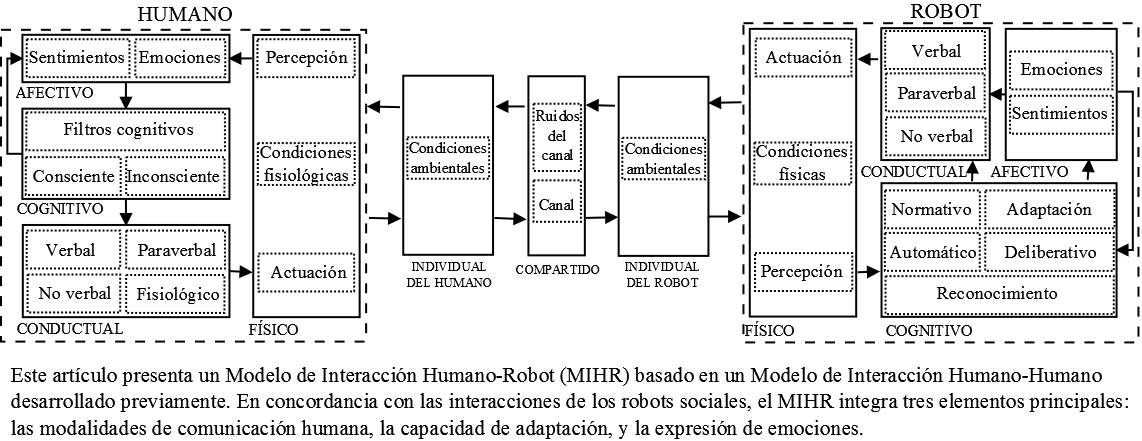

The interactions between people and social robots have generated positive effects on people of different ages in diverse contexts. A model of the interaction process is important to understand the person who interacts, in order to manage the internal dynamics of interaction in the social robots. There are models that describe the interaction between humans and machines, but they don’t integrate the three most important elements to be considered during the interactions by the social robots: the modalities of human communication, the capacity of adaptation, and the expression of emotions. In this paper, a review of the interaction models between people and social robots is made, in order to analyze what has been done about these three important elements of the interaction. Then, it is proposed a Human-Robot Interaction Model (MIHR) based on a Human-Human Interaction Model (MIHH) previously developed, which integrates the main elements to be considered during the interactions by the social robots.

Downloads

References

T. Ribeiro, A. Pereira, E. Di Tullio and A. Paiva, “The sera ecosystem: Socially expressive robotics architecture for autonomous human-robot interaction”, In Proc. Symposium on Enabling Computing Research in Socially Intelligent HumanRobot Interaction, pp. 155-163, 2016.

H. Admoni and B. Scassellati, “Social Eye Gaze in Human-Robot Interaction: A Review”, Journal of HRI, vol. 6, no. 1, pp. 25-63, 2017.

P. Lasota, T. Fong, and J. Shah, “A Survey of Methods for Safe Human-Robot Interaction”, Foundations and Trends in Robotics, vol. 5, no. 4, pp. 261-349, 2017.

R. Siregar, R. Syahputra, and M. Mustar. “Human-Robot Interaction Based GUI”, Journal of Electrical Technology UMY, vol. 1, no. 1, pp.10-19, 2017.

G. Briggs and M. Scheutz, “The Pragmatic Social Robot: Toward Socially-Sensitive Utterance Generation in Human-Robot Interactions”, In Proc. AAAI Fall Symposium Series: Artificial Intelligence for Human-Robot Interaction, pp. 12-15, 2016.

A. Ghazali, J. Ham, E. Barakova, and P. Markopoulos, “Effects of robot facial characteristics and gender in persuasive human-robot interaction”, Frontiers in Robotics and AI, vol. 5, no. 73, pp. 1-16, 2018.

F. Correia, S. Mascarenhas, R. Prada, F. Melo, and A. Paiva. “Group-based emotions in teams of humans and robots”, In Proc. International Conference on Human-Robot Interaction, pp. 261-269, 2018.

K. Luna, E. Palacios, and A. Marin, "A Fuzzy Speed Controller for a Guide Robot Using an HRI Approach", IEEE Latin America Transactions, vol. 16, no. 8, pp. 2102-2107, 2018.

R. Andreasson, B. Alenljung, E. Billing, and R. Lowe, “Affective Touch in Human–Robot Interaction: Conveying Emotion to the Nao Robot”, Journal of Social Robotics, vol. 10, no. 4, pp. 473-491, 2018.

Z. Zafar, D. Salazar, S. Al-Darraji, D. Urukalo, K. Berns, and A. Rodić, “Human Robot Interaction Using Dynamic Hand Gestures”, In Proc. International Conference on Robotics, pp. 649-656, 2017.

M. Graaf, “An ethical evaluation of human–robot relationships”, Journal of Social Robotics, vol. 8, no. 4, pp. 589-598, 2016.

J. Pérez, J. Aguilar, and E. Dapena, “MIHH: Un Modelo de Interacción Humano-Humano”, Revista Venezolana de Computación, vol. 5, no. 1, pp. 10-19, 2018.

B. Scassellati et al., “Improving social skills in children with ASD using a long-term, in-home social robot”, Science Robotics, vol. 3, no. 21, pp. 1-9, 2018.

J. Pérez and J. Castro, “LRS1: un robot social de bajo costo para la asignatura “Programación 1”, Revista Colombiana de Tecnologías de Avanzada, vol. 32, no. 2, pp. 68-77, 2018.

Y. Noguchi, H. Kamide, and F. Tanaka, “Effects on the Self-disclosure of Elderly People by Using a Robot Which Intermediates Remote Communication”, In Proc. 27th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), pp. 612-617, 2018.

B. Dumas, D. Lalanne, and S. Oviatt, “Multimodal interfaces: A survey of principles, models and frameworks”, In Human Machine Interaction, Berlin, pp. 3-26, 2009.

A. Vinciarelli et al., “Open challenges in modelling, analysis and synthesis of human behaviour in human–human and human–machine interactions”, Cognitive Computation, vol. 7, no. 4, pp. 397-413, 2015.

J. Hussain et al., “Model-based adaptive user interface based on context and user experience evaluation”, Journal on Multimodal User Interfaces, vol. 12, no. 1, pp.1-16, 2018.

G. Martins, L. Santos, and J. Dias. “User-Adaptive Interaction in Social Robots: A Survey Focusing on Non-physical Interaction”, International Journal of Social Robotics, pp. 1-21, 2018.

T. Hellström and S. Bensch, “Understandable robots. Paladyn”. Journal of Behavioral Robotics, vol. 9, no. 1, pp. 110-123, 2018.

A. Gil, J. Aguilar, E. Puerto, E. Dapena, "Emergence Analysis in a Multi-Robot System," In Proc. XLIV Latin American Computer Conference (CLEI), pp. 662-669, 2018.

A. Gil, J. Aguilar, E. Dapena, and R. Rivas, "A Control Architecture for Robot Swarms (AMEB)", Journal Cybernetics and Systems, vol. 50, no. 3, pp. 300-322, 2019.

S. Song and S. Yamada, “Expressing emotions through color, sound, and vibration with an appearance-constrained social robot”, In Proc. International Conference on Human-Robot Interaction, pp. 2-11, 2017.

I. Brinck, C. Balkenius, and B. Johansson, “Making Place for Social Norms in the Design of Human-Robot Interaction. What Social Robots Can and Should Do”, In Proc. Robophilosophy, pp. 303-312, 2016.

R. Pérula et al., “Bioinspired decision-making for a socially interactive robot”, Cognitive Systems Research, pp. 1-40, 2018.

D. Herrera, F. Roberti, M. Toibero, and R. Carelli, "Human-Robot Interaction: Legible behavior rules in passing and crossing events", IEEE Latin America Transactions, vol. 14, no. 6, pp. 2644-2650, 2016.

K. Tsiakas, M. Dagioglou, V. Karkaletsis, and F. Makedon, “Adaptive robot assisted therapy using interactive reinforcement learning”, In Pro. International Conference on Social Robotics, pp. 11-21, 2016.

S. Strohkorb et al., “Establishing Sustained, Supportive Human-Robot Relationships: Building Blocks and Open Challenges”, In Proc. Spring Symposium on Enabling Computing Research in Socially Intelligent Human-Robot Interaction, pp. 179-182, 2016.

A. Araujo, J. Pérez, and W. Rodriguez, “Aplicación de una Red Neuronal Convolucional para el Reconocimiento de Personas a Través de la Voz”, In Proc. Sexta Conferencia Nacional de Computación, Informática y Sistemas, pp. 77-81, 2018.

G. Martins, P. Ferreira, L. Santos, and J. Dias, “A context-aware adaptability model for service robots”, In Proc. Workshop on Autonomous Mobile Service Robots, pp. 1-7, 2016.

L. Nichola, W. Erin, and P. Heather, “Effects of voice-adaptation and social dialogue on perceptions of a robotic learning companion”, In Proc. 11th International Conf. on Human-Robot Interaction, pp. 255-262, 2016.

Q. Shen, K. Dautenhahn, J. Saunders, and H. Kose. “Can real-time, adaptive human-robot motor coordination improve humans overall perception of a robot?”, IEEE Transactions on Autonomous Mental Development, vol. 7, no. 1, pp. 52-64, 2015.

R. Ros, I. Baroni, and Y. Demiris, “Adaptive human-robot interaction in sensorimotor task instruction: from human to robot dance tutors”, Robotics and Autonomous Systems, vol. 62, no. 6, pp. 707-720, 2014.

R. Aylett, A. Kappas, G. Castellano, S. Bull, W. Barendregt, A. Paiva, and L. Hall, “I know how that feels—an empathic robot tutor”, In Proc. eChallenges e-2015 Conference, pp. 1–9, 2015.

A. Karami et al., “Adaptive artificial companions learning from users feedback”, Adaptive Behavior, vol. 24, no. 2, pp. 69–86, 2016.

S. Rukavina, et al., “Affective computing and the impact of gender and age”, PloS one, vol. 11, no. 3, pp. 1-20, 2016.

A. Gil, J. Aguilar, R. Rivas, and E. Dapena, “Behavioral Module in a Control Architecture for Multi-robots”, Revista Ingeniería al Día, vol. 2, no. 1, pp. 40-57, 2016.

N. Morán, J. Pérez, and W. Rodríguez, “Reconocimiento de Estados Emocionales de Personas Mediante la Voz Utilizando Algoritmos de Aprendizaje de Máquina”, Revista Venezolana de Computación, vol. 5, no. 2, pp. 41-52, 2018.

A. Bandhakavi, N. Wiratunga, S. Massie, and D. Padmanabhan, “Lexicon generation for emotion detection from text”, IEEE intelligent systems, vol. 32, no. 1, pp. 102-108, 2017.

V. Campos, B. Jou, and X. Giro, “From pixels to sentiment: Fine-tuning cnns for visual sentiment prediction”, Image and Vision Computing, vol. 65, pp. 15-22, 2017.

J. Cordero, J. Aguilar, K. Aguilar, D. Chávez, E. Puerto. “Recognition of the Driving Style in Vehicle Drivers”. Sensors, vol. 20, no. 9, 2020.

J. Aguilar, K. Aguilar, J. Cordero, D. Chávez, and E. Puerto, “Different Intelligent Approaches for Modeling the Style of Car Driving”, In Proc. International Conference on Informatics in Control, Automation and Robotics, pp. 284-291, 2017.

W. Lee and M. Norman, “Affective Computing as Complex Systems Science”, Procedia Computer Science, vol. 95, pp. 18-23, 2016.

T. Izui, I. Milleville, S. Sakka, and G. Venture, “Expressing emotions using gait of humanoid robot”, In Proc. International Symposium on Robot and Human Interactive Communication, pp. 241-245, 2015.

H. Samani, "The evaluation of affection in human-robot interaction", Kybernetes, vol. 45, no. 8, pp. 1257-1272, 2016.

J. Pérez, J. Aguilar, E. Dapena, and G. Carrillo, “Q-learning based algorithm for learning children’s difficulties in multiplication tables”, submitted to publication, 2020.

S. Thellman et al., “Physical vs. Virtual Agent Embodiment and Effects on Social Interaction”, In D. Traum et al. (eds) Intelligent Virtual Agents. Lecture Notes in Computer Science, Cham: Springer, 2016.

E. Dapena, J. Pérez, R. Rivas, and A. Guijarro, “Rostro Genérico para Máquinas que Interactúan con Personas”, Revista Científica UNET, vol. 28, no. 2, pp. 121-130, 2016.

B. Kitchenham, S. Linkman, and D. Law, “DESMET: A Methodology for Evaluating Software Engineering Methods and Tools”, Computing & Control Engineering Journal, vol. 8, no. 3, pp. 120-126, 1997.