Comparative study of methods to obtain the number of hidden neurons of an auto-encoder in a high-dimensionality context

Keywords:

High-dimensionality data, Neural Network, Auto-encoderAbstract

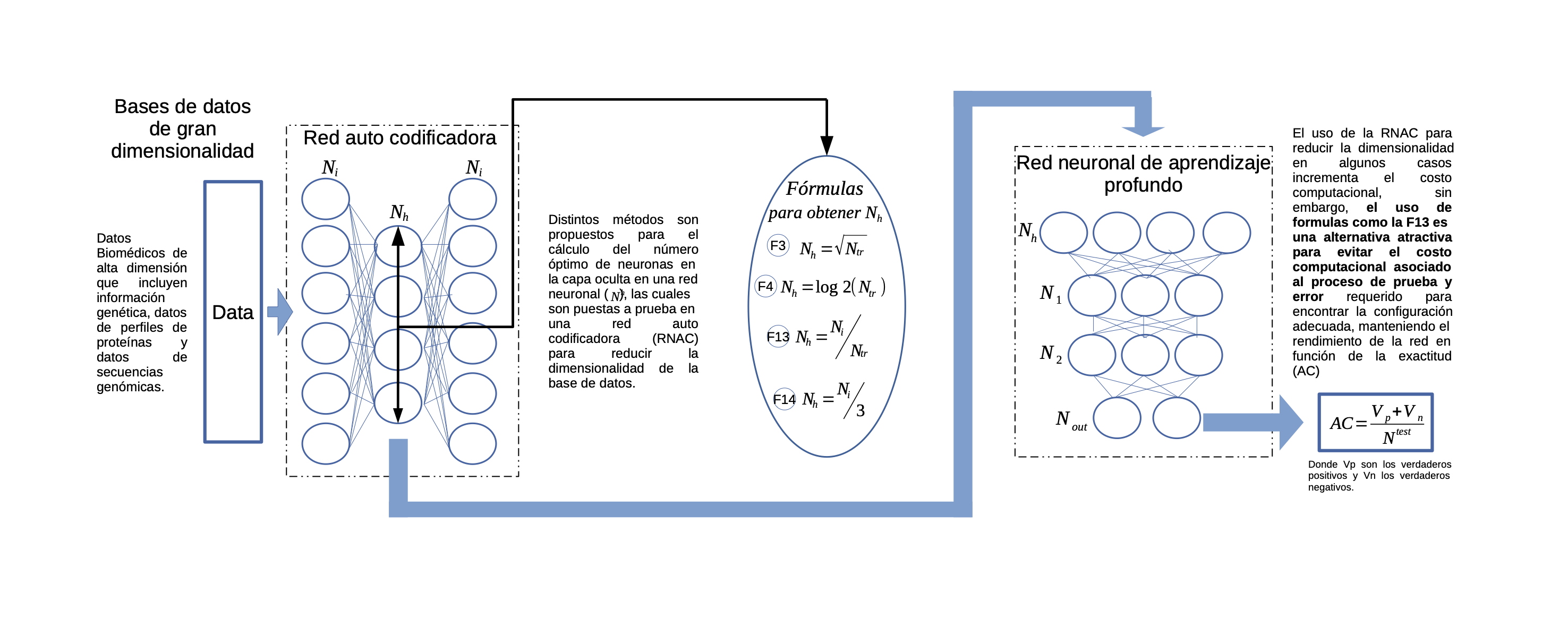

Fourteen formulas from the state-of-art were used in this paper to find the optimal number of neurons in the hidden layer of an autoencoder neural network. The latter is employed to reduce the dataset dimension on high-dimensionality scenarios with not significant reduction in classification accuracy in comparison to the use of the whole dataset. A Deep Learning neural network was employed to analyze the effectiveness of the studied formulas in classification terms (accuracy). Eight high-dimensional datasets were processed in an experimental set in order to assess this proposal. Results presented in this work show that formula 13 (used to find the number of hidden neurons of the auto-encoder) is effective to reduce the data dimensionality without a statistically significant reduction of the classification performance, as it was confirmed by the Freidman test and the Holm's post-hoc test.

Downloads

References

W. Alves, D. Martins, U. Bezerra, and A. Klautau, “A hybrid approach for big data outlier detection from electric power scada system,” IEEE Latin America Transactions, vol. 15, no. 1, pp. 57–64, 2017.

R. Elshawi, S. Sakr, D. Talia, and P. Trunfio, “Big data sy stems meet machine learning challenges: Towards big data science as a service,” Big Data Research, vol. 14, pp. 1 – 11, 2018.

S. Shilo, H. Rossman, and E. Segal, “Axes of a revolution: challenges and promises of big data in healthcare,” Nature Medicine, vol. 26, no. 1, pp. 29–38, 2020.

B. Deebak and F. Al-Turjman, “A novel community-based trust aware recommender systems for big data cloud service networks,” Sustainable Cities and Society, vol. 61, p. 102274, 2020.

A. Mumtaz, A. Bux Sargano, and Z. Habib, “Fast Learning Through Deep Multi-Net CNN Model For Violence Recognition In Video Surveillance,” The Computer Journal, 07 2020.

V. Morfino, S. Rampone, and E. Weitschek, A Comparison of Apache Spark Supervised Machine Learning Algorithms for DNA Splicing Site Prediction. Singapore: Springer Singapore, 2020, pp. 133–143.

R. L. Rodrigues, J. L. C. Ramos, J. C. S. Silva, and A. S. Gomes, “Discovery engagement patterns moocs through cluster analysis,” IEEE Latin America Transactions, vol. 14, no. 9, pp. 4129–4135, 2016.

I. Goodfellow, Y. Bengio, and A. Courville, Deep Learning. Cambridge, MA: MIT Press, 2016.

M. Zaharia, et al., “Apache spark: A unified engine for big data processing,” Commun. ACM, vol. 59, no. 11, pp. 56–65, 2016.

M. Abadi, et al, “Tensorflow: A system for large-scale machine learning,” in Proceedings of the 12th USENIX Conference on Operating Systems Design and Implementation, ser. OSDI’16. Berkeley, CA, USA: USENIX Association, 2016, pp. 265–283.

A. K. Dwivedi, “Artificial neural network model for effective cancer classification using microarray gene expression data,” Neural Computing and Applications, vol. 29, no. 12, pp. 1545–1554, 2018.

H. Liu and H. Motoda, Feature Extraction, Construction and Selection: A Data Mining Perspective. USA: Kluwer Academic Publishers, 1998.

P. Domingos, “A few useful things to know about machine learning,” Commun. ACM, vol. 55, no. 10, pp. 78–87, 2012.

Y. Bengio, A. Courville, and V. P., “Representation learning: a review and new perspectives,” IEEE Trans. Pattern Anal. Mach. Intell., vol. 35, no. 8, pp. 1798–1828, 2013.

U. G. Mangai, S. Samanta, and P. R. Das S. Chowdhury, “A survey of decision fusion and feature fusion strategies for pattern classification,” IETE Tech. Rev., vol. 27, no. 4, pp. 293–307, 2010.

D. Charte, F. Charte, S. Garc´ıa, M. del Jesus, and F. Herrera, “A practical tutorial on autoencoders for nonlinear feature fusion: Taxonomy, models, software and guidelines,” Information Fusion, vol. 44, pp. 78 – 96, 2018.

G. E. Hinton and R. R. Salakhutdinov, “Reducing the dimensionality of data with neural networks,” Science, vol. 313, no. 5786, pp. 504–507, 2006.

D. A. Sprecher, “A universal mapping for kolmogorov’s superposition theorem,” Neural Networks, vol. 6, pp. 1089–1094, 1993.

L. L. Rogers and F. U. Dowla, “Optimization of groundwater remediation using artificial neural networks with parallel solute transport modeling.” Water Resources Research, vol. 30, no. 2, pp. 457–481, 1994.

E. B. Baum, “On the capabilities of multilayer perceptrons,” Journal of Complexity, vol. 4, pp. 193–215, 1988.

N. Wanas, G. Auda, M. S. Kamel, and F. Karray, “On the optimal number of hidden nodes in a neural network,” Systems Design Engineering Department, University of Waterloo, Tech. Rep., 1998.

K. Shibata and Y. Ikeda, “Effect of number oh hidden neurons on learning in large-scale layered neural networks,” in 2009, ICROSSICE International Joint Conference, J. Fukuoka International Congress Center, Ed., 2009.

K. G. Sheela and S. Deepa, “A new algorithm to find number of hidden neurons in radial basis function networks for wind speed prediction in renewable energy systems,” Journal of Control Engineering and Applied Informatics, vol. 15, no. 3, 2013.

S. Xu and L. Chen, “A novel approach for determining the optimal number of hidden layer neurons for fnn’s and its application in data mining,” in 5th International Conference on Information Technology and Applications (ICITA 2008), 2008.

A. R. Barron, “Approximation and estimation bounds for artificial neural networks,” Machine Leraning, vol. 14, pp. 115–133, 1994.

R. Majalca and P. R. Acosta, “Convex hulls and the size and the size of the hidden layer in a mlp based classifier,” IEEE Latin America Transactions, vol. 17, no. 06, pp. 991–999, 2019.

Q. Fournier and D. Aloise, “Empirical comparison between autoencoders and traditional dimensionality reduction methods,” in 2019 IEEE Second International Conference on Artificial Intelligence and Knowledge Engineering (AIKE), 2019, pp. 211–214.

D. C. Ferreira, F. I. V´azquez, and T. Zseby, “Extreme dimensionality reduction for network attack visualization with autoencoders,” in 2019 International Joint Conference on Neural Networks (IJCNN), 2019, pp. 1–10.

J. R. Muh. Ibnu Choldun, Santoso and K. Surendro, “Determining the Number of Hidden Layers in Neural Network by Using Principal Component Analysis,” School of Electrical Engineering and Informatics, Institut Teknologi Bandung, Tech. Rep., 2019.

O. Meriwani, “Enhancing Deep Neural Network Perforamnce on Small Datasets by the using Deep Autoencoder.” An Assignment in Data Science, 2019.

R. Maulik, B. Lusch, and P. Balaprakash, “Reduced-order modeling of advection-dominated systems with recurrent neural networks and convolutional autoencoders,” 2020.

T. Vuji, T. Matijevi, J. Ljucovi, A. Balota, and Z. Evarac, “Comparative Analysis of Methods for Determining Number of Hidden Neurons in Artificial Neural Network,” in Faculty of Organization and Informatics. Central European Conference on Information and Intelligent Systems, 2016, pp. 219–256.

A. Lunt and S. Xu, “An Empirically-Sourced Heuristic for Predetermining the Size of the Hidden Layer of a Multi-layer Preceptron for Large Datasets,” School of Engineeringn and ICT, University of Tasmania, Tech. Rep., 2016.

R. Duda, P. Hart, and D. Stork, Pattern Classification, 2nd ed. New York: Wiley, 2001.

D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint arXiv:1412.6980, 2014.

J. Denker, D. Schwartz, B. Wittner, S. Solla, R. Howard, L. Jackel, and J. Hopfield, “Large automatic learning, rule extraction, and generalization,” Complex Systems, pp. 877–922, 1987.

P. Gallinari, S. Thiria, and F. Soulie, “Multilayer perceptrons and data analisys,” in IEEE International Conference on Neural Networks, vol. 391, 1988, pp. 391–399.

M. Arai, “Bounds on the number of hidden units in binary-valued threelayer neural networks,” Neural Networks, vol. 6, pp. 855–860, 1993.

S. Huang and Y. Huang, “Bounds on the number of hidden neurons in multilayer perceptrons,” IEEE Transactions on Neural Networks, vol. 2, no. 1, pp. 47–55, 1991.

K. Ridge, “Kent ridge biomedical data set repository,” 2005.

U. Markowska-Kaczmar and M. Koldowski, “Spiking neural network vs multilayer perceptron: who is the winner in the racing car computer game,” Soft Computing, vol. 19, no. 12, pp. 3465–3478, 2015.

M. Friedman, “The use of ranks to avoid the assumption of normality implicit in the analysis of variance.” Journal of the American Statistical Association, vol. 32, 1937.

R. L. Iman and J. M. Davenport, “Approximations of the critical region of the friedman statistic,” Communications in Statistics - Theory and Methods, vol. 9, no. 6, pp. 571–595, 1980.

S. Holm, “A simple sequentally rejective multiple test procedure.” Scand J Statist, vol. 6, 1979.

I. Triguero, S. Gonzalez, J. Moyano, S. Garcia, J. Alcala-Fdez, J. Luengo, A. Fernandez, M. del Jesus, L. Sanchez, and F. Herrera, “Keel 3.0: An open source software for multi-stage analysis in data mining,” International Journal of Computational Intelligence Systems, vol. 10, pp. 1238–1249, 2017.

A. Reyes-Nava, J. S´anchez, R. Alejo, A. Flores-Fuentes, and E. Rendón-Lara, Performance analysis of deep neural networks for classification of gene-expression microarrays,” in Pattern Recognition, MCPR 2018, vol. 10880, June 2018, pp. 105–115.

A. Reyes-Nava, et al., “Using deep learning to classify class imbalanced gene-expression microarrays datasets,” in Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. Cham: Springer International, 2019, pp. 46–54.

A. Shawahna, S. M. Sait, and A. El-Maleh, “Fpga-based accelerators of deep learning networks for learning and classification: A review,” IEEE Access, vol. 7, pp. 7823–7859, 2019.

P. N. Whatmough, C. Zhou, P. Hansen, S. K. Venkataramanaiah, J. sun Seo, and M. Mattina, “Fixynn: Efficient hardware for mobile computer vision via transfer learning,” 2019.

C. Sandoval-Ruiz, “Lfsr-fractal ann model applied in r-ieds for smart energy,” IEEE Latin America Transactions, vol. 18, no. 04, pp. 677–686, 2020.